✨ Voice simulation, Flexi evals, Adaptive load balancing, and more

🎙️ Feature spotlight

🤖 Voice simulation and evals are live on Maxim!

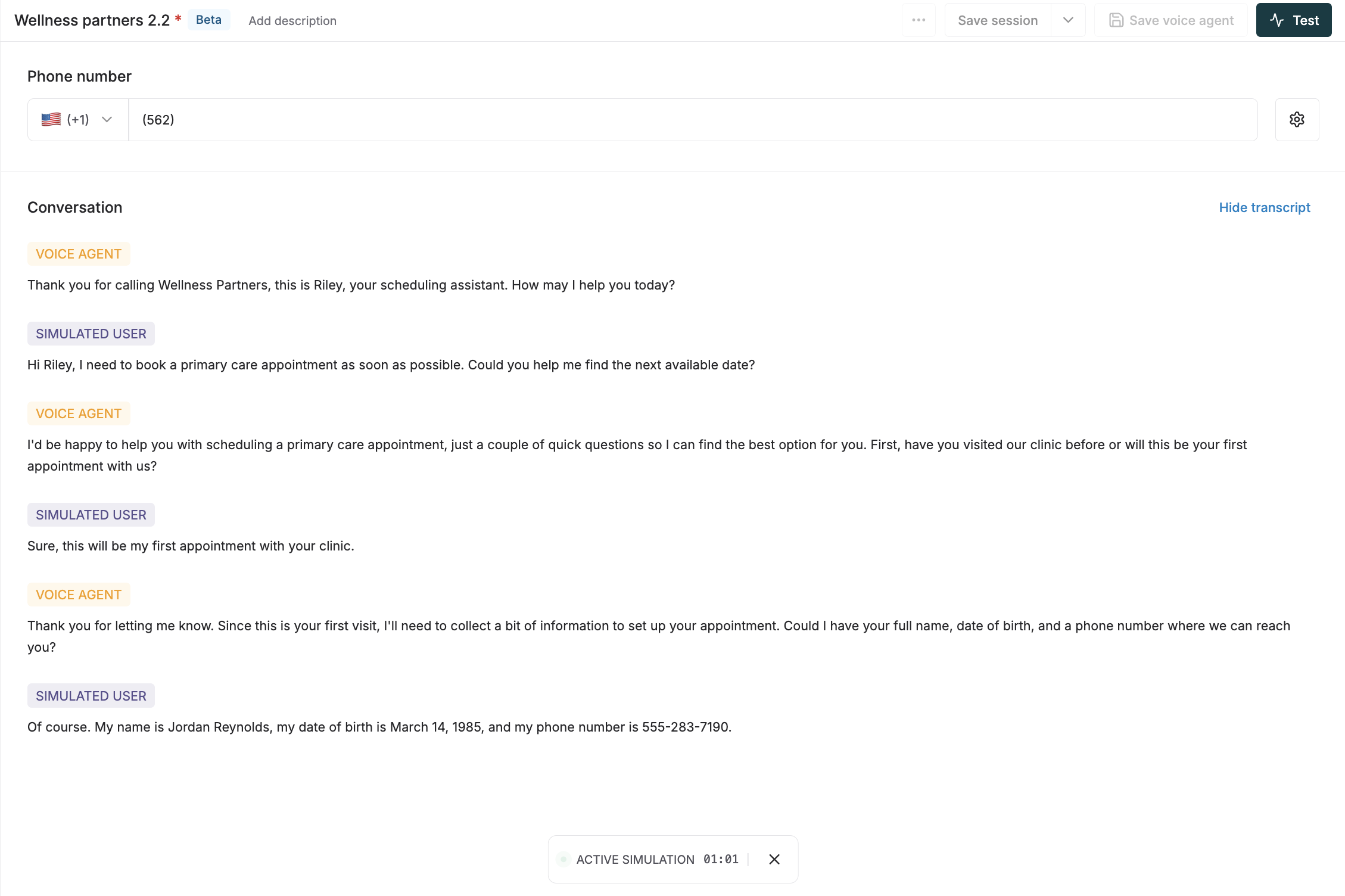

Teams can now simulate multi-turn conversations with their voice agents and monitor performance across hundreds of scenarios and user personas – at a fraction of the time and effort required for manual testing.

You can simply bring your voice agents onto the Maxim platform via phone number and interact with them through manual test calls or by running full-scale automated simulations powered by Maxim’s simulation agent. This enables realistic, scenario-driven testing that mirrors real-world customer interactions, resulting in improved coverage of edge cases and failure modes for your agents.

We’ve also introduced a comprehensive set of voice evals that measure key quality metrics, including AI interruptions, user satisfaction, sentiment, and signal-to-noise ratio. These evals can be applied to both simulated and manual interactions, as well as directly to session recordings, giving teams deep, actionable insights into their voice agent’s performance.

⚙️ Flexi evals

We’ve made evaluations on Maxim logs fully configurable. Instead of being limited to predefined parameters like input, output, retrieval, etc, you can now decide exactly which value in your trace or session should serve as the “input,” “output,” or any other field for your evaluators. Key highlights of Flexi evals:

- Custom mapping: Configure any element of a trace/session to serve as evaluator fields, such as inputs, outputs, etc.

- Programmatic flexibility: Create custom code blocks (in JS) to extract or combine fields and map them to any evaluator parameter. You can pull values from JSON, perform string manipulations, or apply validations to shape evaluations however you need.

This gives teams greater control over how evaluations are run on Maxim – allowing them to focus on specific areas of LLM interactions, eliminate noise from evaluation parameters, and generate more precise, actionable insights.

🗂️ Workspace duplication

Teams can now duplicate an entire Maxim workspace, making it easier to set up new workspaces by reusing the workflows and assets of an existing one. Key highlights:

- What’s duplicated: Prompts, agents (via HTTP endpoint), voice agents, and no-code agents are duplicated along with session and version history. In addition to these, prompt tools, datasets, prompt partials, and evaluators are also eligible.

- What’s not duplicated: Log repositories, context sources, evaluation runs, and dashboards.

- Access control: You can decide whether the users of the original workspace should also gain access to the duplicated one.

This gives you full flexibility to select which components you want to carry over into the new workspace.

📈 Custom metric support

We’ve introduced custom metric support, giving teams full flexibility to log and track the KPIs that matter most beyond the default metrics that are already logged. You can now push any metric as part of your traces, generations, or retrievals via the Maxim SDK. These metrics can be plotted on Maxim’s built-in or custom dashboards, used in evaluators, and even tied to alerts – providing instant visibility into the signals that matter.

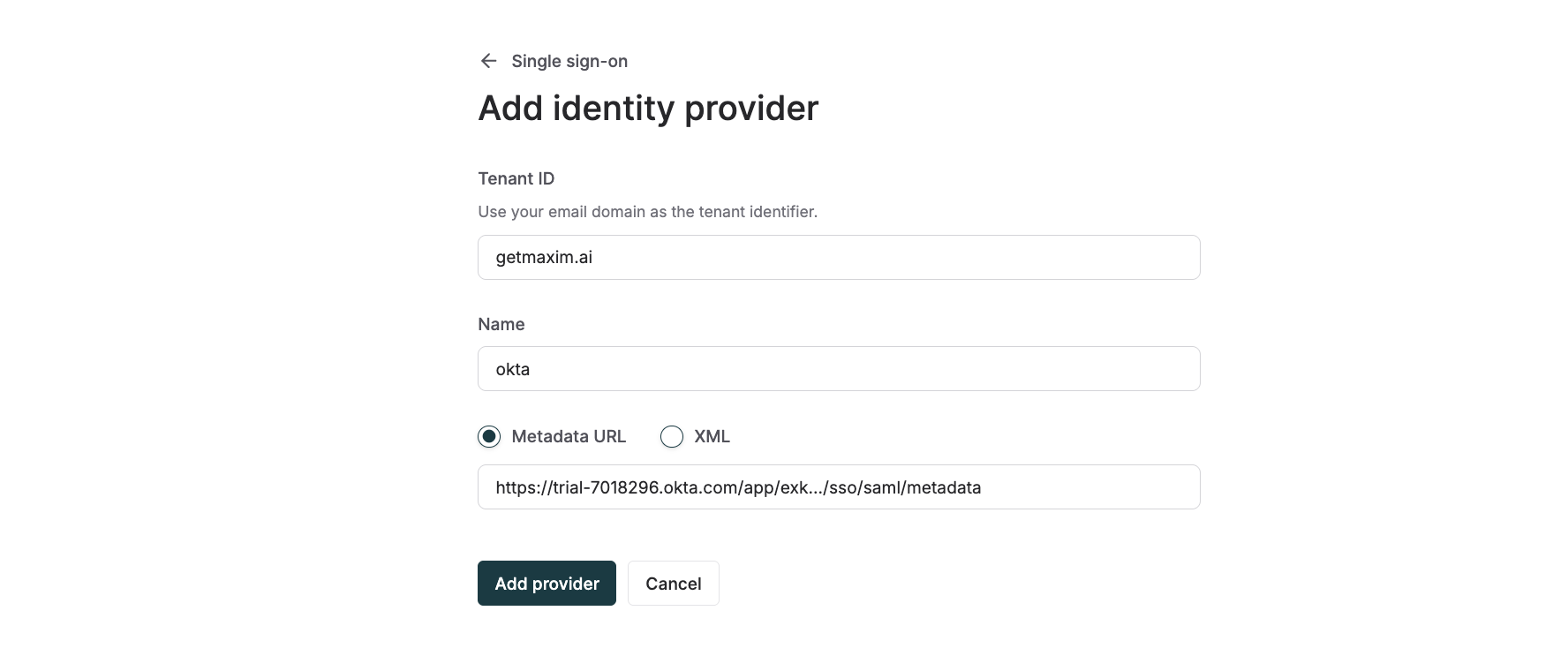

👨💻 SAML-based Single Sign-On (SSO)

We’ve added support for SAML-based Single Sign-On (SSO) in Maxim, starting with integrations for Okta and Google Workspace. This enables teams to connect Maxim to their Identity Provider (IdP) and manage access centrally. Users who are assigned permission to Maxim within Okta or Google Workspace can log in seamlessly through SSO, ensuring secure and simplified onboarding to the platform.

🚀 Added support for new models and providers

OpenAI's GPT-5 is now available on Maxim. Use the latest GPT-5 model, offering stronger reasoning, enhanced multi-turn dialogue, expanded context, and multimodal support to power your experimentation and evaluation workflows.

Maxim now supports two more providers – OpenRouter and Cerebras. OpenRouter gives you the flexibility to connect with a wide range of popular open-source and hosted models, while Cerebras enables running large-scale models with low latency and efficient compute.

⚡ Meet Bifrost!

Built for speed and scale, here are some of the key features of Bifrost- the fastest, open-source LLM gateway:

⚖️ Dynamic load balancing and clustering support

Bifrost supports dynamic load balancing across keys and clusters, optimized for the lowest latency and minimal errors. Traffic distribution is dynamically managed based on latency, error rate, TPM limits, and fatal errors (5xx). Our starvation algorithm prevents overuse of any single key, while recovery logic ensures underperforming keys are reintroduced once stable. No need to define fallback setup manually – Bifrost handles it all dynamically, penalizing weaker keys in favor of better-performing ones.

🧠 Semantic caching

Bifrost supports a fully configurable semantic caching plugin to cut costs on your most frequently asked queries. Backed by TTL-based cache control, it currently integrates with Weaviate and RediSearch (Pinecone support coming soon). Best of all, there’s no token size limit for embeddings, giving you full flexibility to cache and reuse even large documents without truncation or loss of context.

🛡️ Governance

Bifrost provides fine-grained governance to help teams control and monitor costs, TPM, total requests, and model access at the user, team, or customer level. You can define policies, set budgets, and maintain a complete audit log of team activity – ensuring tighter control over usage, spending, and compliance.

🎁 Upcoming releases

🔍 Insights on logs

We’re introducing Insights to Maxim logs, making it easy to analyze user queries and model generations without manually combing through raw logs. With Insights, you can quickly see which queries are asked most often, which generations vary the most, and other key patterns, saving hours of manual effort.

📣 Google Cloud Marketplace x Maxim AI

We’re excited to share that Maxim is now available on the Google Cloud Marketplace. This makes it even easier for our customers, especially enterprises already on Google Cloud, to integrate Maxim into their AI development workflows.

Through the Marketplace, customers gain access to Maxim’s powerful simulation, evaluation, and observability infrastructure to ship reliable AI applications with the speed and quality required for real-world use – while benefitting from centralized GCP billing. For customers who prefer full control over their data, the Maxim platform is also available as a self-hosted deployment within their own Google Cloud environment.

Check out Maxim on Google Cloud Marketplace.