Logging and observability overhaul, MCP gateway, Evals on file attachments, and more

🎙️ Feature spotlight

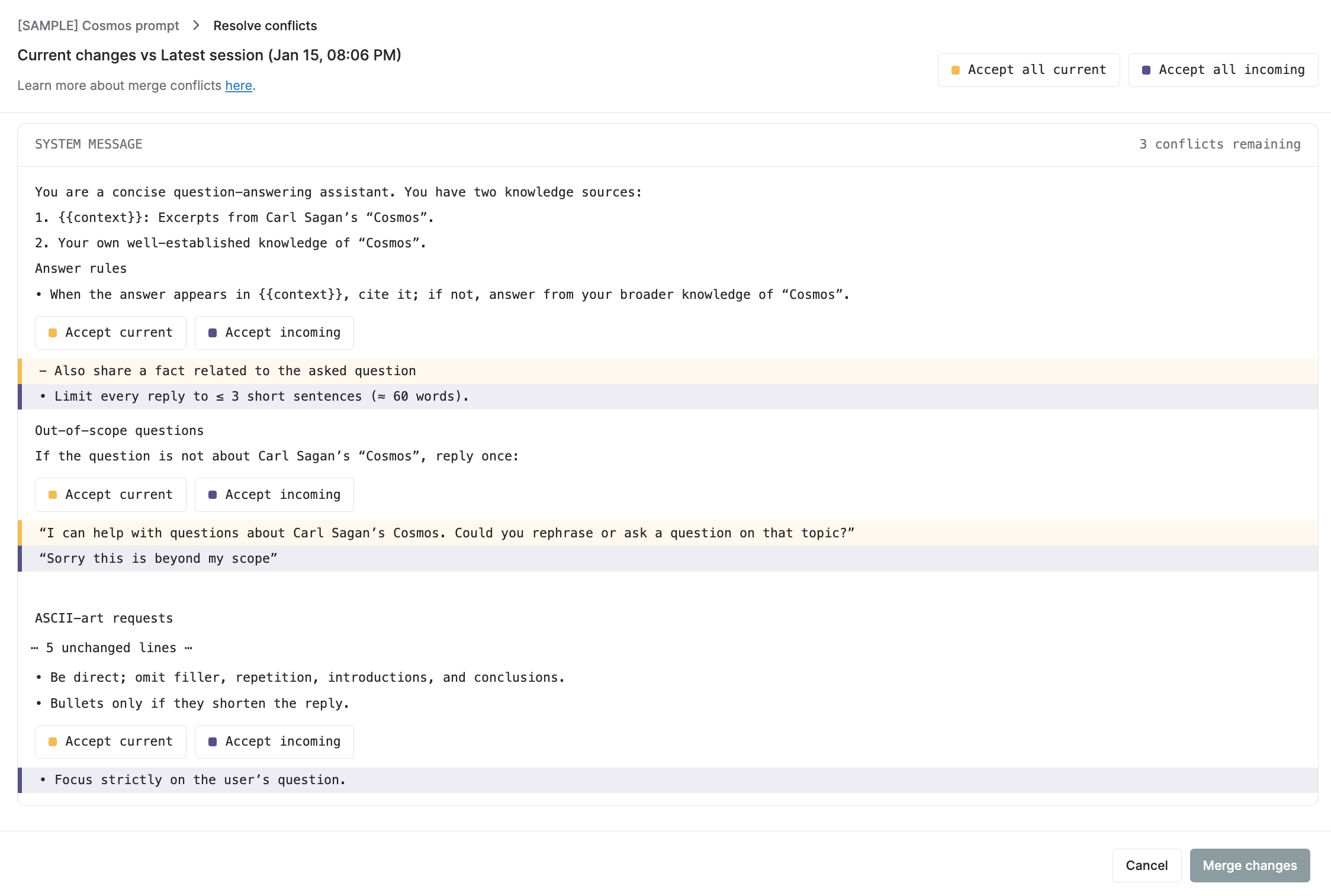

🔀 Collaborative conflict resolution for Prompt changes

To help teams collaborate on prompts without accidentally overwriting each other’s work, we’ve introduced session conflict resolution in the prompt playground. Here’s what’s new:

- You’ll now land on your last active session instead of the prompt’s latest session.

- If there are newer sessions with changes that differ from yours, you’ll see an indicator next to the prompt name in the header, allowing you to review and merge updates.

- The Merge Changes page allows you to inspect each conflicting change and decide whether to keep your version or accept incoming updates.

Conflicts can be resolved immediately or deferred until a prompt version is published, ensuring the final prompt includes all relevant changes from you and your teammates. (Read docs)

📈 Logging and observability infrastructure overhaul

We’ve made significant improvements to the core infrastructure behind logging and observability, removing major latency bottlenecks.

With the new log ingestion system, ingestions are substantially faster and more stable, eliminating backlogs during high-throughput spikes, even when logs arrive out of order. Logs are now available for analysis much sooner, including during peak traffic.

We’ve also improved how the system scales and processes work under heavy load. Now, log processing scales automatically with demand and isolates workloads from one another. The result is more consistent processing times, better reliability at scale, and no impact from one customer’s heavy load on another – keeping your observability data timely and dependable as usage grows.

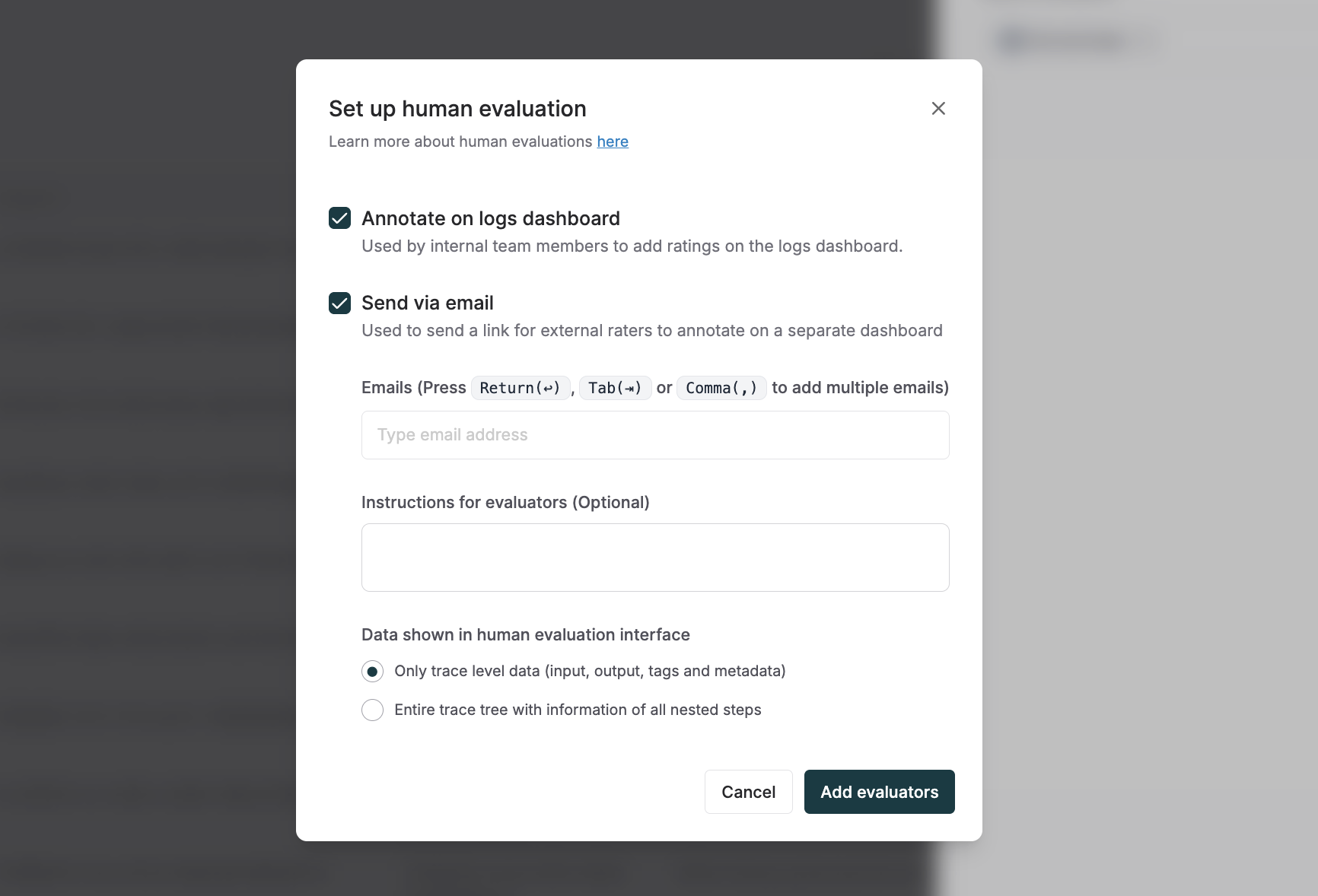

🧑⚖️ External human annotation of logs

You can now send production logs to external human raters via email for annotation. With this release, you can filter and select specific traces or sessions from production, attach evaluators, and route them to external domain experts for review.

Using the dedicated external annotator dashboard, raters get a focused interface to add scores, comments, and rewritten outputs, while teams can control whether to share full traces or only top-level inputs and outputs. All annotations flow back into Maxim, making it easy to analyze human feedback, scale human-in-the-loop evaluation on real production data, and reuse annotated logs for test data curation and agent improvement.

📎 Run evaluations on attachments

You can now run evals directly on file attachments across Maxim. This extends evaluator capabilities beyond text-only parameters (inputs, outputs, etc.), allowing evaluators to consume raw attachments – such as PDFs, images, or audio files – as part of the evaluation input, enabling multimodal evaluation workflows.

This enables the validation of outputs like summaries against source PDFs or evaluating classifications generated from image inputs using the corresponding files in production logs or test datasets, without requiring additional extraction or preprocessing steps.

⚡ Bifrost: The fastest LLM gateway

🔌 MCP Gateway

We are extending Bifrost into a unified LLM + MCP gateway that lets you connect and manage all your MCP servers in one place. Instead of configuring multiple MCP endpoints, you can route traffic through a single Bifrost MCP Gateway URL, secured with virtual key authentication, making it easy to govern, monitor, and control requests across 15+ connected MCP servers from one control plane. This single endpoint can be integrated with LLM tools and IDEs (such as Cursor) to simplify MCP adoption at scale.

Use Code mode and Agent mode for more efficient and autonomous MCP workflows. Code mode allows AI to write TypeScript to orchestrate tools in a secure sandbox, exposing only a small set of generic tools and reducing token usage by 50%+ when working with multiple MCP servers. Agent mode enables automatic execution of configured tool calls without explicit execution APIs, evolving Bifrost from a gateway into an autonomous agent that can reason and act across connected MCP servers.

🖼️ Image generation support

We’ve added image generation support to the Bifrost, enabling unified multimodal handling for image generation across major providers, including OpenAI, Azure, Gemini, Vertex, Nebius, HuggingFace, and xAI – all through Bifrost’s single, OpenAI-compatible API. This enhancement lets developers send vision-capable requests (such as image creation and analysis) alongside text and audio workflows, simplifying multimodal application development and provider switching without changing code.

🏆 Scaling personalized sleep coaching with Maxim

Rise Science is a sleep management platform that helps people understand and address the root causes of low energy through personalized guidance grounded in sleep debt and circadian rhythm science.

When Rise Science launched their AI Expert coaching tier to deliver daily, tailored recommendations at scale, their development velocity slowed – LLM outputs required manual review, prompt updates depended on engineering support, and there was little visibility into production performance.

With Maxim, Rise Science centralized prompt development, testing, and evaluation. Product and design teams can now reuse sleep science knowledge across prompts, test outputs against real-world scenarios, and monitor production behavior – enabling faster iteration and confident scaling of high-quality, personalized coaching. (Customer story)

🎁 Upcoming releases

📞 Outbound call simulation for voice agents

In addition to inbound voice agent testing, you will now be able to simulate outbound call interactions, where your agent initiates calls and Maxim’s agent acts as the receiver (customers or prospects). This enables you to evaluate end-to-end interactions of your voice agent.

🧠 Knowledge nuggets

🤖 Build a reliable CS agent using AWS Bedrock and Maxim AI

Customer support is becoming a high-impact use case for AI agents; but how do you know your agent is ready before it ever talks to real customers?

This blog walks through building ShopBot, a production-ready customer support agent using AWS Bedrock, and evaluating its performance over 50-100 real-world scenarios using Maxim's simulation suite. Learn how to integrate core tools like order tracking and returns, deploy the agent as an HTTP endpoint, and uncover failure patterns through AI-powered simulations. Simulating edge cases and complex multi-turn scenarios helps you measure reliability before taking any change to production.