Top 5 Prompt Management Tools for 2026

TL;DR

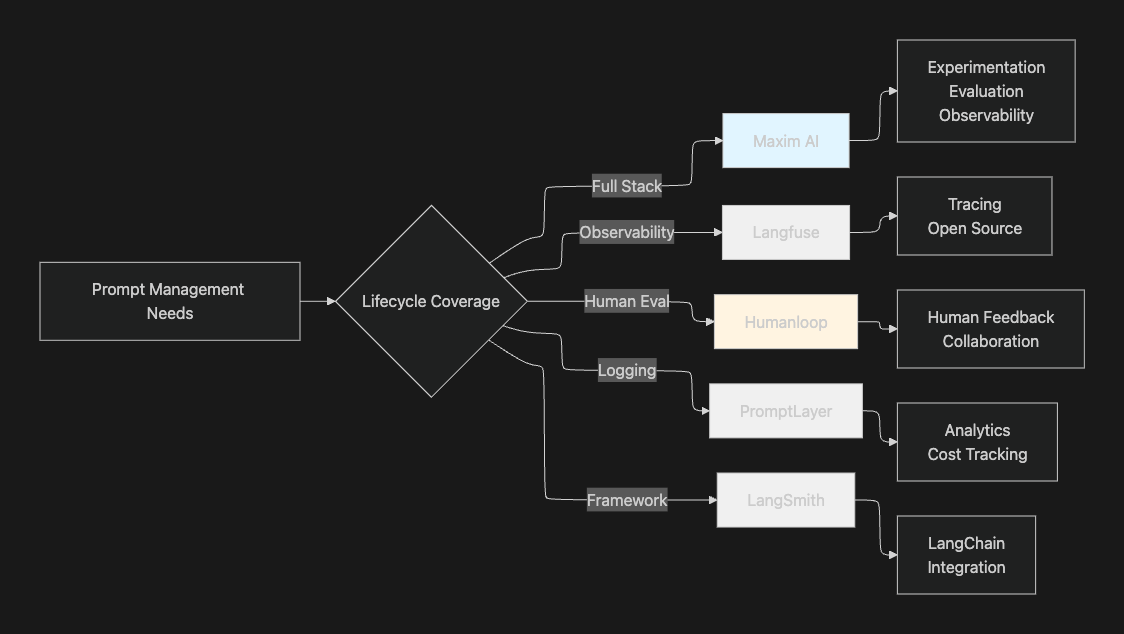

As we close out 2025 and look ahead to 2026, prompt management platforms have become essential for teams building AI applications. The top five platforms leading this space are Maxim AI (end-to-end experimentation, evaluation, and observability), Langfuse (open-source observability with versioning), Humanloop (human-centered evaluation workflows), PromptLayer (lightweight logging and analytics), and LangSmith (LangChain-native integration). Organizations should select platforms based on their lifecycle coverage needs, evaluation sophistication requirements, team collaboration patterns, and production monitoring demands.

Introduction

As 2025 draws to a close, AI teams preparing their roadmaps for 2026 face a transformed landscape. What began as simple experimentation with prompts has evolved into managing hundreds of production prompts across multiple models, providers, and deployment environments. According to recent industry analysis, organizations with mature prompt management practices ship AI features 3-4x faster than those relying on ad-hoc approaches.

The stakes have never been higher. Production AI systems now power customer support, sales enablement, content generation, and even mission-critical decision-making workflows. A single poorly tested prompt can degrade user experience, inflate costs, or create compliance risks. Meanwhile, the complexity continues growing as teams adopt multi-agent architectures, integrate with RAG systems, and orchestrate workflows across multiple AI providers.

This comprehensive guide examines the five prompt management platforms positioned to lead in 2026, analyzing their architectures, unique capabilities, and ideal use cases to help you make informed infrastructure decisions for the year ahead.

Understanding Prompt Management in 2026

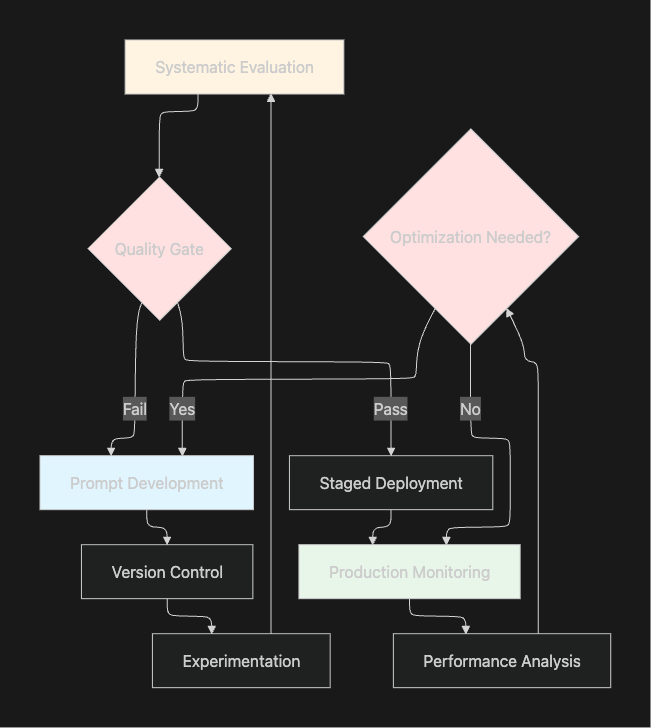

Prompt management encompasses the complete lifecycle of prompts from initial development through production monitoring and continuous optimization. Modern prompt management platforms must address several interconnected challenges that define enterprise AI development in 2026:

Version Control and Governance: Teams need Git-like capabilities for prompts with complete change tracking, branch management, and rollback mechanisms. Regulatory compliance increasingly demands audit trails showing exactly which prompt versions were active during specific time periods.

Experimentation at Scale: Development teams require environments where they can rapidly test prompt variations across different models, compare outputs side-by-side, and measure quality systematically before committing to production deployments.

Systematic Evaluation: AI evaluation frameworks have matured significantly, with platforms now supporting automated evaluators, LLM-as-a-judge approaches, and human review workflows that run at multiple granularity levels from individual spans to complete conversational sessions.

Cross-Functional Collaboration: Product managers, domain experts, and QA teams increasingly participate directly in prompt optimization. Modern platforms must enable non-technical stakeholders to contribute meaningfully without creating engineering bottlenecks.

Production Observability: Real-time monitoring connects prompt versions to quality metrics, cost data, latency patterns, and user feedback. Teams need visibility into how prompt changes impact production systems before issues cascade to users.

Research from Stanford's Center for Research on Foundation Models indicates that organizations implementing comprehensive prompt management see 40-60% faster iteration cycles and 35-50% fewer production incidents compared to teams managing prompts through code repositories or spreadsheets alone.

Top 5 Prompt Management Tools for 2026

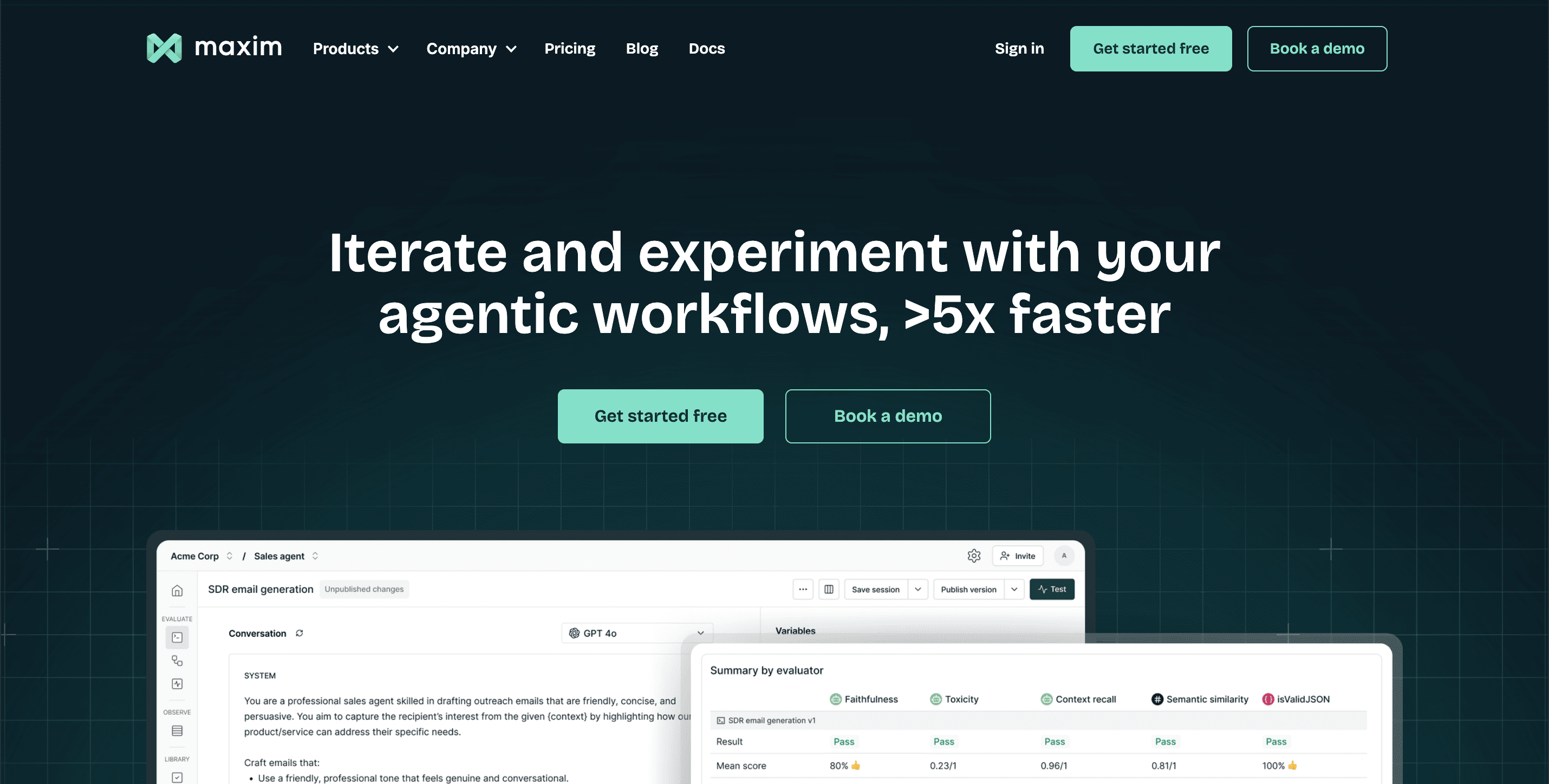

1. Maxim AI: Comprehensive AI Lifecycle Platform

Maxim AI delivers the industry's most integrated approach to prompt management, embedding it within a full-stack platform spanning experimentation, simulation, evaluation, and observability.

Experimentation Suite

Maxim's Playground++ enables advanced prompt engineering workflows that go beyond basic testing environments. Teams organize and version prompts directly through an intuitive UI, enabling rapid iteration without touching code. The platform supports deploying prompts with different strategies including A/B testing, canary releases, and gradual rollouts, all configurable through the interface.

What distinguishes Maxim is the seamless integration with databases, RAG pipelines, and external tools. Engineers can connect data sources and test prompts against realistic contexts without building custom integration layers. The side-by-side comparison functionality enables teams to evaluate prompt performance across multiple models and parameters simultaneously, accelerating decision-making during optimization cycles.

Simulation and Testing

The simulation capabilities represent a breakthrough for teams building agentic systems. Maxim enables testing prompts across hundreds of scenarios and user personas, analyzing agent behavior at every conversational step. This proactive testing uncovers edge cases and failure modes before production exposure.

Teams can re-run simulations from any point to reproduce issues systematically, dramatically reducing debugging time for complex multi-agent interactions. This capability is particularly valuable when working with multi-agent AI systems where understanding conversational flow is essential for quality assurance.

Evaluation Framework

Maxim's unified evaluation framework combines automated and human evaluation in a flexible architecture. Teams access an evaluator store with pre-built evaluators while building custom evaluators tailored to specific application requirements using deterministic logic, statistical methods, or LLM-as-a-judge approaches.

The flexibility to configure evaluations at session, trace, or span level enables nuanced quality assessment across different granularities. Product teams can define evaluation criteria through the UI without engineering dependencies, accelerating iteration cycles significantly. Human evaluation workflows provide the last-mile quality checks that automated systems cannot capture, ensuring alignment with human preferences.

Production Observability

Maxim's observability suite provides real-time visibility into production prompt performance with distributed tracing for complex agent architectures. Teams track quality metrics, monitor costs, and receive alerts when issues emerge, minimizing user impact through rapid response.

The platform enables in-production quality measurement through automated evaluations based on custom rules, creating continuous feedback loops between production performance and development workflows.

Data Management

The integrated Data Engine addresses a critical gap in most prompt management platforms by providing comprehensive multi-modal dataset management. Teams curate datasets from production logs, collect human feedback, and enrich data through managed labeling workflows. This creates a virtuous cycle where production insights improve evaluation datasets, which in turn drive better prompts.

Ideal For: Organizations building production AI agents requiring comprehensive lifecycle management from experimentation through observability. Particularly strong for teams needing cross-functional collaboration between engineering and product organizations. Companies like Comm100 and Atomicwork demonstrate how Maxim's integrated approach accelerates production AI development.

2. Langfuse: Open-Source Observability with Prompt Versioning

Langfuse approaches prompt management through the lens of observability, providing an open-source platform that prioritizes transparency and developer control.

Core Capabilities

Langfuse's prompt management system integrates tightly with its tracing infrastructure, enabling teams to version prompts centrally while maintaining complete visibility into production usage patterns. The platform captures detailed metadata for every LLM interaction including model parameters, token counts, costs, and execution traces.

The open-source foundation provides significant advantages for organizations with strict data residency requirements or teams preferring self-hosted deployments. Community contributions drive continuous feature additions while giving teams complete transparency into platform behavior.

Observability Focus

Where Langfuse particularly excels is comprehensive tracing capabilities for LLM applications. The platform provides granular visibility into execution flows, making it straightforward to identify performance bottlenecks and debug production issues. Integration with frameworks like LangChain and LlamaIndex simplifies implementation for teams already invested in these ecosystems.

Evaluation Support

Langfuse supports running prompts against test datasets with result comparison across versions. While less comprehensive than dedicated evaluation platforms, the integration with external evaluation frameworks enables teams to incorporate custom scoring functions for domain-specific metrics.

Ideal For: Engineering-focused teams prioritizing open-source solutions with full infrastructure control. Best suited for organizations needing strong observability capabilities with prompt versioning as a complementary feature rather than the primary focus.

3. Humanloop: Human-Centered Evaluation

Humanloop distinguishes itself through emphasis on human-in-the-loop workflows for prompt optimization, making it straightforward to collect expert feedback and incorporate qualitative assessments into development cycles.

Evaluation Workflows

The platform's strength lies in evaluation infrastructure designed specifically for collecting and analyzing human feedback. Teams can set up evaluation tasks, distribute them to subject matter experts, and aggregate results for systematic prompt comparison. This human-centered approach complements automated metrics, addressing use cases where quality depends on nuanced judgment rather than purely computational evaluation.

Prompt Management

Humanloop provides solid version control and collaborative editing features with strong support for template variables and dynamic prompts. The platform tracks complete change history, enabling teams to understand prompt evolution and roll back when necessary.

Collaboration Features

The clean, intuitive interface facilitates cross-functional participation in prompt development. Product managers and domain experts can contribute meaningfully without requiring deep technical expertise, reducing dependencies on engineering resources.

Ideal For: Teams where prompt quality assessment relies heavily on expert human judgment. Particularly valuable for applications in domains like content creation, customer communication, or specialized professional services where automated metrics provide insufficient signal.

4. PromptLayer: Analytics-First Logging

PromptLayer takes a middleware approach to prompt management, focusing primarily on comprehensive logging and analytics for understanding existing prompt usage patterns.

Logging Infrastructure

The platform acts as a logging layer that captures all LLM requests with detailed metadata including prompts, completions, parameters, costs, and latency. This creates an audit trail enabling teams to analyze usage patterns and identify optimization opportunities.

Version Tracking

PromptLayer links logged requests to specific prompt versions, facilitating analysis of how changes impact real-world performance. Teams compare metrics across versions to validate that modifications actually improve outcomes.

Analytics Dashboard

The analytics capabilities provide insights into cost patterns, popular prompts, error rates, and performance trends. Tagging and filtering support enables segmented analysis by user cohort, feature area, or other relevant dimensions.

Ideal For: Teams needing comprehensive logging for existing AI applications where the primary goal is understanding usage patterns and costs rather than building new experimentation workflows. Works well as a complementary tool alongside platforms focused on prompt development.

5. LangSmith: LangChain-Native Integration

Built by the creators of LangChain, LangSmith provides deeply integrated prompt management for teams heavily invested in the LangChain ecosystem.

Framework Integration

The tight coupling with LangChain enables prompt management with full awareness of chains, agents, and other LangChain constructs. Teams manage prompts within the context of their broader application architecture rather than as isolated artifacts.

Evaluation Capabilities

LangSmith includes evaluation features supporting both automated evaluators and human review. Teams create evaluation datasets, run prompts systematically, and compare results across versions using built-in evaluation patterns designed for LangChain workflows.

Tracing Features

The platform's tracing capabilities provide visibility into complex LangChain applications, showing how prompts interact with retrieval systems, tools, and multi-step reasoning processes. This is particularly valuable when monitoring LLMs in production environments.

Ideal For: Organizations deeply committed to LangChain who want native integration between their framework and prompt management infrastructure. Teams should consider whether framework-specific tooling aligns with long-term architecture flexibility goals.

Comparative Analysis

Key Differentiators

Lifecycle Coverage: Maxim AI provides the most comprehensive approach, integrating experimentation, simulation, evaluation, and observability in a unified platform. This reduces tool sprawl and enables seamless workflows across development stages. Other platforms focus on specific segments, requiring teams to integrate multiple tools for complete coverage.

Evaluation Sophistication: The depth of evaluation frameworks varies dramatically across platforms. Maxim offers the most flexible architecture with support for custom evaluators at multiple granularity levels combined with sophisticated human review workflows. This flexibility proves critical when building complex agentic systems requiring nuanced quality assessment.

Cross-Functional Design: Maxim and Humanloop lead in enabling non-technical stakeholders to participate meaningfully in prompt optimization. Platforms oriented primarily toward engineering teams limit participation from product managers and domain experts, creating bottlenecks in organizations where these stakeholders drive quality requirements.

Production Monitoring: While Langfuse and PromptLayer provide strong observability capabilities, Maxim delivers the most comprehensive production monitoring with real-time quality checks, distributed tracing for multi-agent systems, and integrated alerting. This matters most for teams operating production AI systems where reliability is critical.

Selecting the Right Platform for 2026

Choosing the optimal prompt management platform depends on several organizational factors:

For Comprehensive AI Development: Teams building production AI agents benefit most from Maxim AI's full-stack approach covering experimentation, evaluation, and observability. The integrated platform eliminates friction between development stages and accelerates time-to-production through seamless workflows.

For Open-Source Requirements: Organizations with strict data governance requirements or those preferring self-hosted infrastructure should evaluate Langfuse. The open-source model provides complete transparency and control while maintaining strong observability capabilities.

For Human Evaluation Emphasis: Applications where quality depends heavily on expert judgment rather than automated metrics benefit from Humanloop's evaluation workflows. The platform makes it straightforward to collect and analyze qualitative feedback from domain experts.

For Usage Analytics: When the primary need is understanding existing prompt patterns and optimizing costs, PromptLayer's logging and analytics capabilities provide comprehensive visibility without requiring major application changes.

For Framework-Specific Needs: Teams deeply committed to LangChain benefit from LangSmith's native integration, though this creates framework coupling that may limit architectural flexibility over time.

Critical Decision Factors

- Team Structure: Do product managers and domain experts need to participate actively in prompt optimization, or will engineering handle everything?

- Production Requirements: How critical is real-time monitoring and AI reliability for your application?

- Evaluation Complexity: Do you need simple metrics or sophisticated multi-dimensional evaluation frameworks?

- Scale Considerations: How many prompts will you manage and how many team members require access?

- Integration Requirements: Does your architecture benefit from framework-specific tooling or require provider-agnostic solutions?

Conclusion

The five platforms examined here represent different philosophies for solving prompt lifecycle challenges, each with distinct strengths aligned to specific organizational needs.

Maxim AI stands out for teams requiring comprehensive lifecycle management with strong cross-functional collaboration features. Langfuse provides open-source flexibility with robust observability, Humanloop emphasizes human evaluation, PromptLayer delivers detailed analytics, and LangSmith offers deep framework integration for LangChain users.

The decision ultimately depends on your team's specific requirements, existing infrastructure, and production constraints. Most importantly, adopting any systematic approach to prompt management dramatically improves outcomes compared to ad-hoc methods using spreadsheets or scattered code repositories.

As AI applications grow in complexity and business importance throughout 2026, investing in proper prompt management infrastructure will increasingly differentiate organizations that ship reliable AI systems quickly from those struggling with quality issues and slow iteration cycles. The platforms discussed here provide proven approaches to these challenges, enabling teams to build trustworthy AI applications at scale.

Ready to explore how comprehensive prompt management can transform your AI development workflow in 2026? Consider booking a demo with Maxim to see how integrated experimentation, evaluation, and observability accelerate production AI development.