Top 5 LLM observability platforms in 2026

TL;DR

Production LLM applications demand comprehensive observability beyond traditional monitoring. This guide examines five leading platforms that track, debug, and optimize AI systems in production:

- Maxim AI provides end-to-end observability integrated with simulation, evaluation, and experimentation for cross-functional teams

- Langfuse offers open-source flexibility with detailed tracing and prompt management for teams requiring self-hosting

- Arize AI extends enterprise ML observability to LLMs with proven production-scale performance

- LangSmith delivers native LangChain integration for teams building within that ecosystem

- Helicone combines lightweight observability with AI gateway features for fast deployment

As LLM applications become mission-critical infrastructure, observability platforms have evolved from optional monitoring tools to essential components for maintaining reliability, controlling costs, and ensuring quality.

Why LLM Observability Matters

Traditional application monitoring falls short for LLM-powered systems. When your AI application serves thousands of users daily, tracking uptime and latency provides an incomplete picture. You need visibility into prompt quality, response accuracy, token usage, and user satisfaction across every interaction.

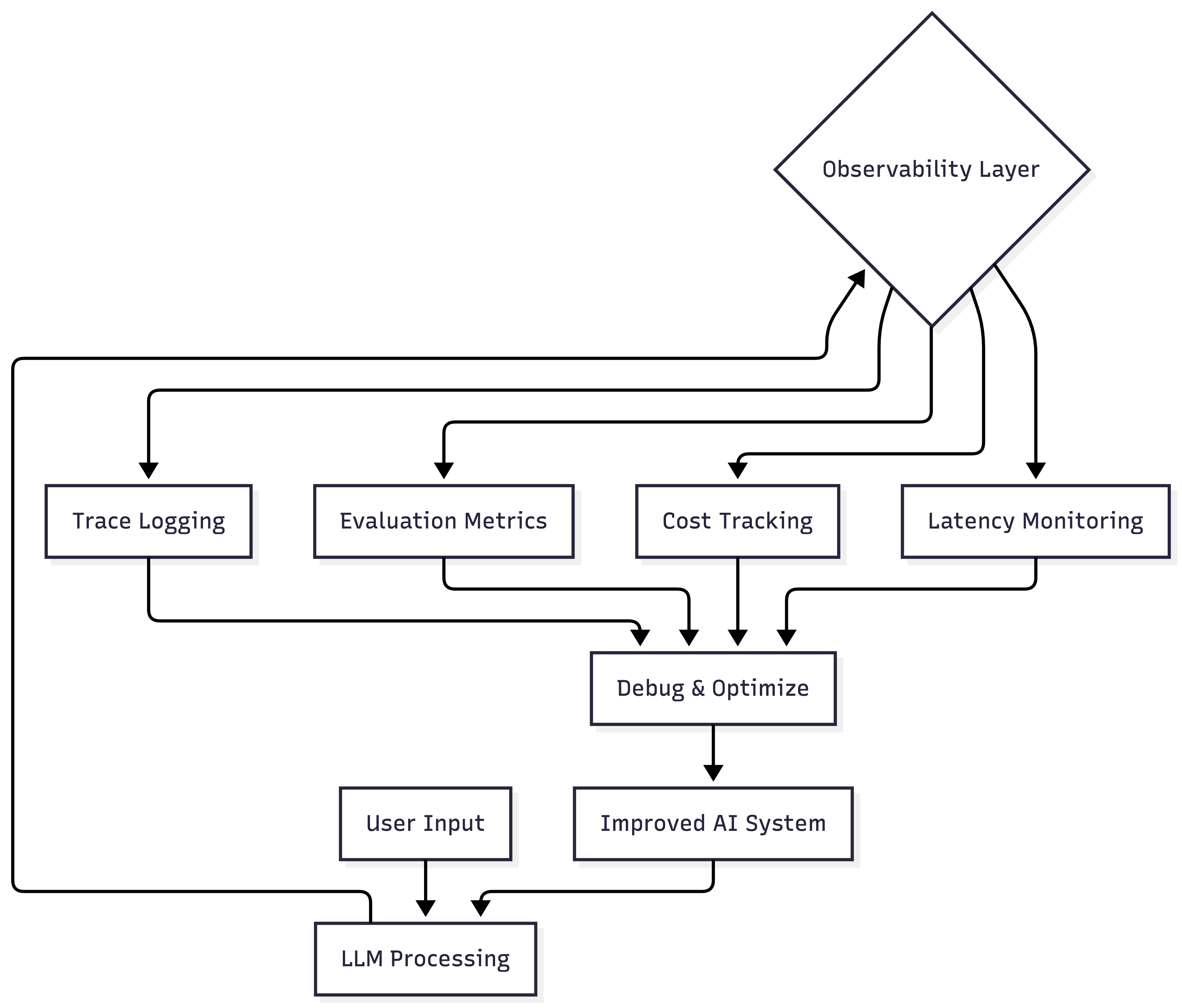

LLM observability captures the complete lifecycle of AI interactions: from initial user input through model inference, retrieval operations, tool calls, and final response delivery. This comprehensive view helps teams identify issues, optimize performance, and ensure production reliability.

The Unique LLM Observability Challenge

LLM systems present challenges distinct from traditional software:

Non-deterministic outputs make the same prompt yield different responses across runs, challenging reproducibility and traditional testing approaches. Teams cannot rely on deterministic test suites that work for conventional software.

Silent failures occur when models generate plausible but incorrect responses. Unlike application crashes that trigger alerts, hallucinations and factual errors reach users without obvious signals. This makes proactive quality monitoring essential rather than reactive incident response.

Multi-step complexity in agentic systems requires tracking orchestration across tool calls, retrieval operations, and multi-turn conversations where failures cascade unpredictably. A single broken component can compromise entire workflows.

Cost variability stems from token usage patterns that shift based on prompt complexity, response length, and context windows. Without granular visibility, unexpected usage spikes can dramatically exceed budgets.

Quality drift emerges gradually as user behavior evolves, edge cases surface, or model versions change, degrading performance without clear warning signs. Regular monitoring catches these trends before they impact user satisfaction.

1. Maxim AI: End-to-End Observability Platform

Maxim AI integrates observability within a comprehensive platform spanning simulation, evaluation, experimentation, and production monitoring. This approach serves teams shipping mission-critical AI agents requiring visibility across the entire development lifecycle.

Platform Overview

Maxim's observability suite delivers real-time visibility into production AI systems with debugging capabilities, automated quality checks, and cross-functional collaboration features. The platform connects observability data to evaluation workflows and dataset curation, creating feedback loops for continuous improvement.

Distributed tracing captures complete execution paths through multi-agent systems, showing tool calls, retrieval operations, and decision points with full context. Teams can track requests at session, trace, and span levels for granular debugging across complex workflows.

Key Features

Real-Time Monitoring provides instant visibility through live trace streaming, quality score tracking across automated evaluations, cost analysis by feature or user segment, latency metrics identifying bottlenecks, and error tracking with complete context. Product teams can monitor AI quality without engineering dependency.

Distributed Tracing follows requests across multi-step workflows with session-level analysis, span-level granularity, and error propagation visualization. This implementation transforms opaque behaviors into explainable execution paths.

Automated Evaluations catch regressions through 50+ pre-built evaluators and custom evaluation logic. Alert configuration notifies teams when quality scores, costs, or latency thresholds are breached.

Custom Dashboards enable no-code insight creation with custom filtering and visualization, democratizing observability across teams.

Data Curation transforms production logs into evaluation datasets with human-in-the-loop enrichment.

Integration & Deployment

Maxim provides robust SDKs in Python, TypeScript, Java, and Go for seamless integration across diverse tech stacks. The platform supports all major LLM providers (OpenAI, Anthropic, AWS Bedrock, Google Vertex) and frameworks (LangChain, LlamaIndex, CrewAI) through native integrations and OpenTelemetry compatibility.

For high-throughput deployments, Bifrost, Maxim's LLM gateway, adds ultra-low latency routing with automatic failover, load balancing, and semantic caching that reduces costs by up to 30% while maintaining reliability.

Best For

Maxim excels for cross-functional teams requiring collaboration between engineering, product, and QA on AI quality. The platform serves organizations needing end-to-end lifecycle coverage, connecting observability to evaluation and experimentation. Companies deploying complex multi-agent systems with session-level quality requirements benefit from comprehensive tracing capabilities. Teams prioritizing both powerful SDKs for engineers and intuitive UI for non-technical stakeholders find Maxim's balanced approach particularly effective.

Success stories from Comm100, Thoughtful, Mindtickle, and Atomicwork demonstrate how Maxim's observability drives production reliability while accelerating development cycles.

2. Langfuse: Open-Source Engineering Platform

Langfuse provides open-source observability with self-hosting capabilities. The platform's transparency, active community, and flexible deployment options appeal to teams requiring complete infrastructure control.

Key Features

Langfuse delivers detailed trace logging capturing LLM calls, retrieval operations, embeddings, and tool usage across production systems. Session tracking enables multi-turn conversation analysis with user-level attribution. Prompt management includes version control, A/B testing, and deployment workflows. Evaluation support covers LLM-as-a-judge approaches, user feedback collection, manual annotations, and custom metrics with comprehensive score analytics for comparing results across experiments.

Best For

Teams requiring self-hosting for data residency or compliance needs, organizations building primarily on LangChain or LlamaIndex frameworks, development teams prioritizing open-source control and community-driven development, and projects where observability and tracing are primary needs. Compare at Maxim vs Langfuse.

3. Arize AI: Enterprise ML Observability

Arize AI extends proven ML observability capabilities into LLM monitoring, processing over 1 trillion inferences monthly. Arize Phoenix (open-source) and Arize AX (enterprise platform) serve different deployment scales and organizational needs.

Key Features

Built on OpenTelemetry standards, Arize provides vendor-agnostic, framework-independent observability with performance tracing identifying problematic predictions and feature-level issues, drift detection across prediction, data, and concept dimensions to catch quality degradation early, embeddings analysis for visualizing and clustering model behaviors, agent visibility supporting frameworks like CrewAI, AutoGen, and LangGraph, and enterprise infrastructure with proven high-volume production performance handling massive scale.

Best For

Enterprises with existing ML infrastructure extending to LLM applications, teams needing proven production-scale performance, organizations wanting unified observability across traditional ML and GenAI workloads, and companies prioritizing OpenTelemetry-based vendor neutrality. Compare at Maxim vs Arize.

4. LangSmith: Native LangChain Integration

LangSmith from the LangChain team provides deep framework integration through one-line setup requiring only an environment variable, framework-aware tracing showing chain execution with full context, prompt playground for rapid iteration and testing, dataset-based evaluation with LLM-as-a-judge support, and thread views connecting multi-step operations.

Best For

Teams building exclusively with LangChain or LangGraph frameworks who want framework-native observability without additional integration work, and organizations preferring to stay within the LangChain ecosystem. Compare at Maxim vs LangSmith.

5. Helicone: Lightweight Gateway

Helicone combines AI gateway capabilities with observability through proxy-based architecture. The distributed system adds only 50-80ms latency while processing over 2 billion LLM interactions.

Key Features

One-line integration via simple base URL change, built-in semantic caching reducing API costs by 20-30%, comprehensive cost tracking across providers and models, session tracing for multi-step workflows, and flexible self-hosting support via Docker or Kubernetes.

Best For

Teams wanting lightweight observability with minimal engineering investment, built-in cost optimization through intelligent caching, and deployment flexibility including self-hosting options.

Platform Comparison

| Capability | Maxim AI | Langfuse | Arize AI | LangSmith | Helicone |

|---|---|---|---|---|---|

| Deployment | Cloud, Self-hosted | Cloud, Self-hosted | Cloud, Self-hosted | Cloud | Cloud, Self-hosted |

| Integration | SDK + OpenTelemetry | SDK-based | OpenTelemetry | Native LangChain | Proxy-based |

| Tracing Depth | Multi-agent, session | Detailed | Comprehensive | LangChain-optimized | Basic-moderate |

| Dashboards | Custom builder | Standard | Enterprise | Built-in | Standard |

| Evaluations | Multi-granularity | Flexible | Production-focused | Dataset-based | Limited |

| Cost Tracking | Detailed | Basic | Comprehensive | Basic | Advanced |

| Prompt Mgmt | Versioning + A/B | Full-featured | Manual | Integrated | Not included |

| Human-in-Loop | Native workflows | Annotation queues | Manual | Manual | Not included |

| Best For | End-to-end lifecycle | Open-source control | Enterprise ML/LLM | LangChain teams | Fast setup |

Observability Workflow

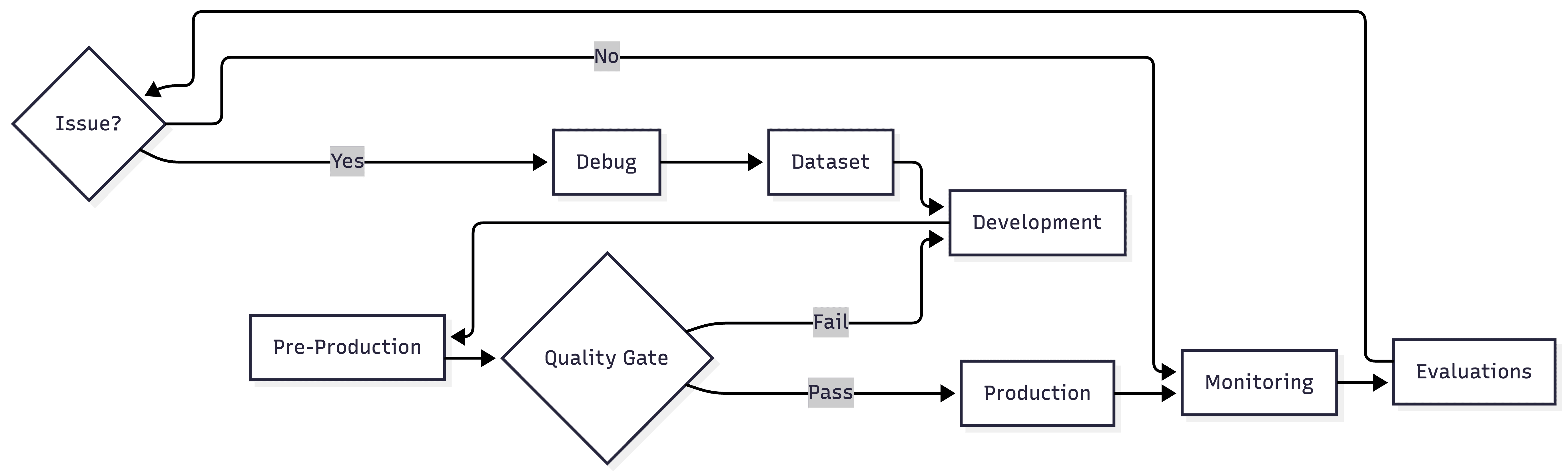

Effective observability connects development through production deployment, real-time monitoring, automated evaluations, systematic debugging, and data curation. This complete cycle enables continuous improvement where production insights directly inform development decisions.

Platforms that close this loop, particularly Maxim's integrated approach, accelerate continuous improvement by transforming production issues into evaluation datasets that drive better prompts, improved workflows, and more reliable AI systems. The feedback mechanism ensures teams learn from production behavior systematically rather than reactively fixing individual issues as they arise.

Platform Selection Guide

Choose Maxim AI for:

- Comprehensive lifecycle coverage connecting observability, evaluation, and experimentation in one platform

- Cross-functional teams needing seamless engineering-product-QA collaboration

- Complex multi-agent systems with session-level and conversation-flow quality requirements

- Organizations wanting powerful SDKs combined with no-code capabilities for non-technical stakeholders

Schedule a demo to explore how Maxim's integrated workflows accelerate shipping reliable AI agents.

Choose Langfuse for:

- Self-hosting requirements for data residency or compliance

- Development primarily on LangChain or LlamaIndex frameworks

- Open-source control and community-driven development priorities

Choose Arize for:

- Existing ML infrastructure extending to LLM applications

- Proven enterprise-scale production performance requirements

- Unified observability across traditional ML and GenAI workloads

Choose LangSmith for:

- Exclusive LangChain or LangGraph development

- Native integration without additional setup overhead

- Preference for staying within the LangChain ecosystem

Choose Helicone for:

- Minimal engineering investment for fast deployment

- Cost optimization priorities through built-in caching

- Lightweight observability without evaluation complexity

Implementation Best Practices

Effective LLM observability requires strategic implementation across several dimensions.

Start with Core Metrics by tracking latency (p50, p95, p99 percentiles), token usage (input/output costs), error rates (failures and timeouts), and quality scores from automated evaluations.

Implement Distributed Tracing using consistent trace IDs linking user input, retrieval operations, LLM calls, tool executions, and final responses.

Configure Meaningful Alerts with thresholds for quality degradation (>10%), cost spikes (>20%), latency increases (>15%), and error rate changes (>5%). Refine based on signal quality.

Build Feedback Loops where production issues become evaluation test cases, low-quality traces trigger reviews, edge cases expand coverage, and insights drive improvements.

Enable Cross-Functional Visibility through executive dashboards, product views by feature, engineering debugging tools, and QA validation interfaces.

Further Reading

Maxim AI Core Resources

Documentation & Implementation

Platform Comparisons

External Resources

- OpenTelemetry for distributed tracing standards

- LangChain Documentation for framework integration

Conclusion

Production LLM applications require observability platforms that capture the complete AI interaction lifecycle. Maxim AI leads with comprehensive coverage, integrating observability, evaluation, experimentation, and simulation. The cross-functional collaboration features and agent tracing serve teams shipping mission-critical AI agents. Langfuse provides open-source flexibility for teams prioritizing infrastructure control. Arize extends enterprise ML observability with proven production-scale performance. LangSmith offers tight LangChain integration. Helicone delivers lightweight observability for fast deployment.

Schedule a demo to explore how Maxim's integrated observability accelerates shipping reliable AI agents.