Top 5 AI Prompt Management Tools of 2026

TL;DR

Managing prompts at scale has evolved from basic version tracking to a comprehensive development infrastructure. This guide analyzes the five leading prompt management platforms:

- Maxim AI: Full-stack platform combining prompt management with evaluation, simulation, and production monitoring for end-to-end AI lifecycle coverage

- PromptLayer: A Lightweight solution with Git-inspired version control, empowering domain experts through visual workflows

- Langfuse: Open-source engineering platform with comprehensive observability and zero-latency prompt caching

- Vellum: Enterprise-grade workspace with intuitive GUI for cross-team collaboration and rapid prototyping

- Humanloop: Environment-based deployment platform connecting versioning to evaluation infrastructure

Bottom Line: Choose Maxim AI for comprehensive lifecycle management, PromptLayer for team collaboration, Langfuse for open-source flexibility, Vellum for enterprise polish, and Humanloop for evaluation-driven development.

Why Prompt Management Matters in 2026

Prompt engineering has evolved into a systematic discipline that requires purpose-built infrastructure. Teams deploying AI applications face critical challenges: maintaining hundreds of prompt variations, ensuring deployment consistency, tracking performance metrics, and enabling cross-functional collaboration without bottlenecks.

The cost of poor prompt management compounds quickly. A single poorly managed prompt change can degrade user experience, increase operational costs by 30-50% through inefficient token usage, or introduce compliance risks that threaten entire deployments. Organizations without structured prompt management face three recurring problems:

Deployment Bottlenecks

When prompts live in application code, simple text changes require engineering involvement, code review, and full deployment cycles. A 2-minute update becomes a multi-day process. Teams report 5-10× slower iteration when prompts aren't managed separately from code.

Quality Blind Spots

Without systematic evaluation infrastructure, teams deploy prompt changes based on manual spot checks or intuition. Production issues surface through user complaints rather than proactive monitoring. Research shows that teams without evaluation frameworks experience 3× higher regression rates.

Collaboration Friction

Product managers and domain experts hold crucial knowledge about desired AI behavior, but lack direct control when prompts require engineering handoffs. The constant back-and-forth creates delays, misaligned expectations, and lost context.

Modern prompt management platforms address five essential needs:

Version Control and Deployment

Track every prompt iteration with full history, enable instant rollback capabilities, and deploy changes across environments without code modifications. Teams using structured version control report 60% faster deployment cycles.

Evaluation Integration

Connect prompts directly to quality metrics, run automated tests against comprehensive datasets, and compare performance across variations systematically. Platforms like Maxim AI provide pre-built evaluators covering accuracy, relevance, safety, and custom business metrics.

Cross-Functional Collaboration

Enable product managers, domain experts, and engineers to iterate together without constant handoffs. The best platforms provide accessible UIs alongside powerful APIs, ensuring both technical and non-technical stakeholders contribute effectively.

Production Observability

Monitor real-world performance through distributed tracing, identify quality regressions before they impact users, and gather production data for continuous improvement. Comprehensive observability transforms reactive firefighting into proactive optimization.

Cost and Latency Optimization

Track token usage across prompts and models, implement intelligent caching to reduce redundant API calls, and optimize model selection based on actual performance data rather than assumptions.

Platform Comparison at a Glance

| Platform | Best For | Key Strength | Deployment | Pricing |

|---|---|---|---|---|

| Maxim AI | End-to-end lifecycle management | Integrated simulation, evaluation & observability | Managed/Self-hosted | Seat-based + Enterprise |

| PromptLayer | Domain expert collaboration | Git-inspired version control with no-code UI | Managed | Freemium |

| Langfuse | Open-source advocates | Zero-latency caching, self-hosting | Managed/Self-hosted | Open-source + Cloud |

| Vellum | Enterprise rapid prototyping | Visual workflow builder | Managed | Freemium + Enterprise |

| Humanloop | Product & PM-led LLM teams | Continuous feedback & human-in-the-loop evaluation | Managed | Usage-based |

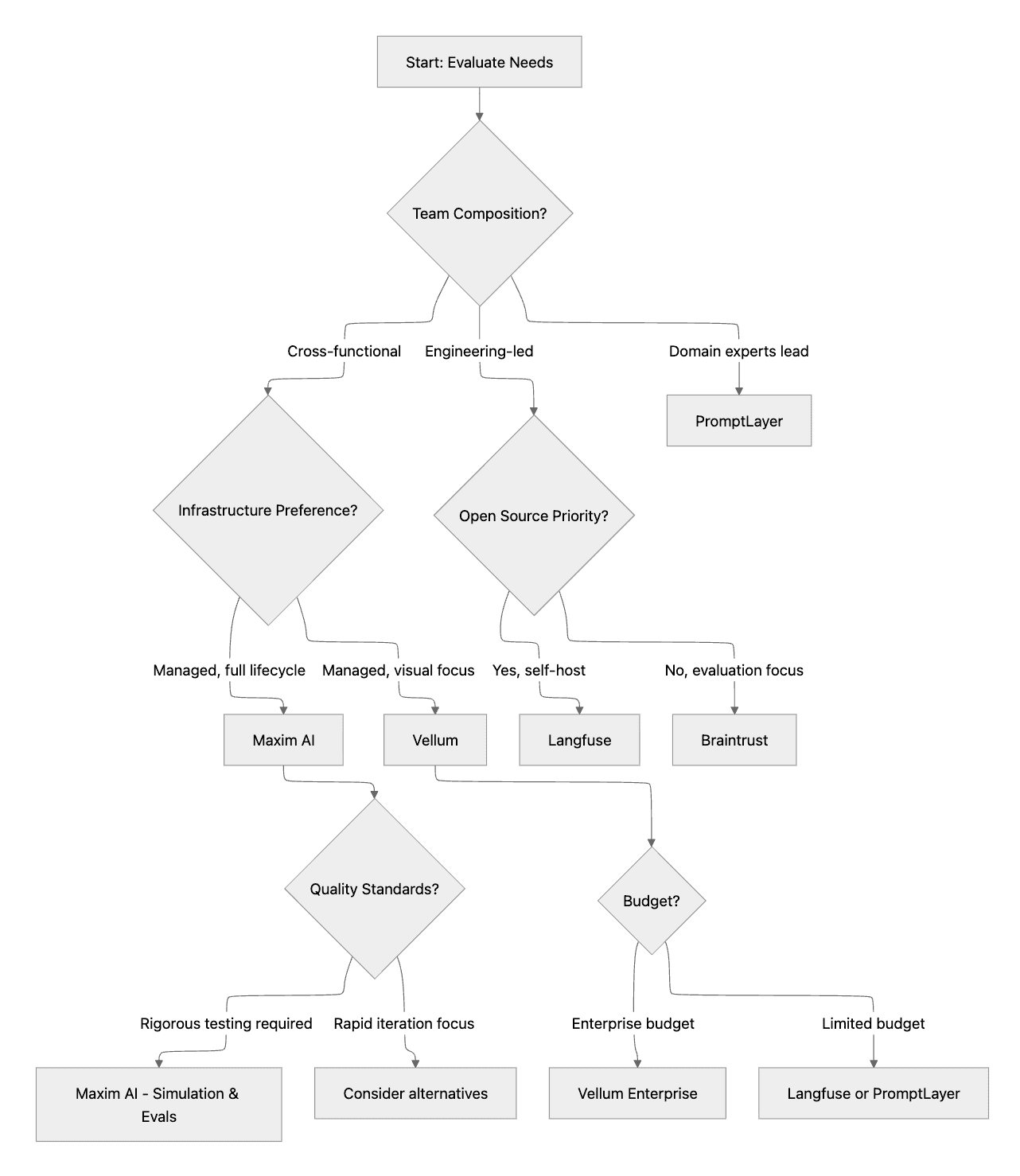

Choosing the Right Platform: Decision Flow

1. Maxim AI: End-to-End AI Lifecycle Platform

Platform Overview

Maxim AI delivers comprehensive prompt management integrated into the complete AI development lifecycle. The platform connects experimentation, simulation, evaluation, and observability into a unified system where prompts evolve through systematic quality improvement.

Maxim serves AI engineers, product managers, and QA teams building production-grade applications, recognizing that effective prompt management requires infrastructure for testing against realistic scenarios, measuring quality comprehensively, and monitoring production behavior.

Key Benefits

Unified Experimentation

Maxim's Playground++ enables rapid iteration with side-by-side comparisons across models and providers. Teams organize prompts from the UI, deploy with different strategies, and connect to databases and RAG pipelines seamlessly.

AI-Powered Simulation

The simulation engine tests prompts across hundreds of user personas and scenarios, evaluating conversational trajectories, task completion, and failure points at the agent level.

Comprehensive Evaluation

Access pre-built evaluators or create custom metrics. Run machine and human evaluations at the session, trace, or span level with flexible configuration.

Production Observability

Monitor real-time logs, track quality metrics automatically, and receive degradation alerts. Distributed tracing and custom dashboards enable continuous improvement through production data curation.

Cross-Functional Workflows

Performant SDKs in Python, TypeScript, Java, and Go combine with UI-first evaluation configuration, allowing product teams to iterate without engineering dependencies.

Best For

Teams building multi-agent systems and RAG implementations, quality-focused organizations requiring rigorous evaluation standards, and cross-functional teams collaborating extensively on AI development.

Proven Impact

Organizations deploy AI agents 5× faster using Maxim. Case studies from Clinc, Thoughtful, and Atomicwork demonstrate measurable deployment velocity and quality improvements.

Maxim's Bifrost AI gateway provides unified access to 12+ providers with automatic fallbacks, semantic caching, and comprehensive telemetry integration.

Compare Maxim with competitors or request a demo.

2. PromptLayer: Git for Prompts

PromptLayer brings Git-inspired version control to prompt engineering, designed for domain specialists (healthcare professionals, legal experts, educators) to contribute directly to prompt optimization. The platform operates as middleware between applications and LLMs, capturing every interaction while providing no-code interfaces for iteration.

Key Features: Visual version control, collaborative workflows with approval processes, built-in A/B testing, usage monitoring, and provider-agnostic support.

Best For: Teams where subject matter experts lead prompt quality, rapid content operations requiring high iteration velocity, and organizations prioritizing cross-disciplinary collaboration.

3. Langfuse: Open-Source Observability

Langfuse delivers comprehensive LLM observability through an open-source platform with self-hosting or managed deployment options. The platform emphasizes zero-latency prompt management via client-side caching and deep framework integration (LangChain, LlamaIndex, OpenAI SDK).

Key Features: Zero-latency caching, detailed step-level tracing, dataset management, self-hosting flexibility, and Model Context Protocol support.

Best For: Open-source advocates prioritizing transparency and data sovereignty, LangChain-centric organizations, and budget-conscious teams with engineering resources for infrastructure management.

4. Vellum: Enterprise Development Platform

Vellum provides enterprise-grade low-code workflows for building and deploying LLM features. The visual development environment enables rapid prototyping while maintaining production rigor through workflow builders and agent development interfaces.

Key Features: Visual workflow builder, side-by-side comparisons, agent development through conversational interfaces, one-click deployment, and enterprise features (RBAC, isolated environments).

Best For: Product-led organizations where non-engineers drive AI features, rapid prototyping teams, and enterprises requiring governance and multi-environment isolation.

5.Humanloop

Humanloop provides product-focused prompt management with emphasis on rapid iteration and visual editing for non-technical team members.

Key Features

Visual prompt editor: Enable product teams to modify and version prompts without code changes

Rapid iteration: Quick feedback loops for testing prompt modifications

Environment support: Manage versions across different deployment stages

Evaluation features: Basic testing capabilities for version comparison

User-friendly interface: Accessible to non-technical stakeholders

Selection Criteria: Finding Your Fit

Choosing the right platform depends on four critical factors that determine long-term success:

Team Structure and Workflows

Engineering-led teams building complex, code-heavy applications may prefer platforms with robust APIs, programmatic control, and deep framework integration like Langfuse or Humanloop. These platforms provide powerful SDKs and CLI tools that integrate smoothly into existing development workflows.

Cross-functional organizations where product managers, domain experts, and engineers collaborate extensively benefit from platforms offering accessible UIs alongside technical capabilities. Maxim AI's approach emphasizes this balance through performant SDKs in Python, TypeScript, Java, and Go, combined with intuitive interfaces that enable product teams to configure evaluations, analyze results, and iterate on prompts without code dependencies.

Teams where subject matter experts (legal professionals, healthcare specialists, educators) drive AI behavior should prioritize platforms like PromptLayer that eliminate engineering bottlenecks for routine prompt updates through visual version control interfaces.

Infrastructure and Deployment Needs

Self-hosted requirements often stem from data sovereignty regulations, security policies, or specific compliance frameworks. Teams in regulated industries (healthcare, finance, government) requiring complete data control should evaluate open-source options like Langfuse that provide proven self-hosting paths with Docker Compose or Kubernetes deployments.

Managed services reduce operational overhead significantly but require trusting third-party infrastructure. Platforms like Maxim AI, Vellum, and Humanloop offer enterprise SLAs, security certifications (SOC 2, HIPAA), and dedicated support channels. The trade-off between operational simplicity and infrastructure control defines this decision.

Hybrid deployments are increasingly common. Organizations may use managed services for development and staging while self-hosting production instances. Ensure your chosen platform supports flexible deployment models without vendor lock-in.

Lifecycle Coverage Requirements

Teams requiring integrated experimentation, evaluation, and observability gain significant advantages from comprehensive platforms like Maxim AI that connect these capabilities natively. The alternative of stitching together best-of-breed tools for each need creates integration overhead, data synchronization challenges, and fragmented workflows.

Consider the full development lifecycle. During experimentation, can you rapidly test prompt variations across models and parameters? For evaluation, does the platform support both automated quality metrics and human review workflows? In production, do you get real-time alerting, distributed tracing, and the ability to curate datasets from live traffic for continuous improvement?

Organizations with mature MLOps infrastructure may prefer specialized tools that integrate into existing workflows. Teams building from scratch benefit from end-to-end platforms that provide everything needed without complex integrations.

Quality Standards and Risk Tolerance

Applications where prompt quality directly impacts business outcomes (customer-facing chatbots, financial analysis, healthcare recommendations, legal document review) require platforms with comprehensive evaluation frameworks and simulation capabilities.

Maxim's simulation engine tests prompts across hundreds of scenarios before production deployment, evaluating conversational trajectories and task completion at the agent level. This proactive testing catches issues that simple unit tests miss, particularly in complex multi-agent systems where emergent behaviors create unexpected failures.

Teams prioritizing rapid iteration over exhaustive testing may accept higher risk in exchange for faster deployment cycles. This approach works for internal tools, experimental features, or applications with limited user impact. However, even these scenarios benefit from baseline quality gates and production monitoring.

The cost of quality failures varies dramatically across applications. A chatbot giving slightly inaccurate product recommendations differs fundamentally from a medical AI assistant providing treatment guidance. Align platform capabilities with risk tolerance and regulatory requirements.

Implementation Best Practices

Start with Clear Objectives

Define specific goals: reducing deployment time, improving quality consistency, or enabling product team independence. Clear objectives guide platform selection and implementation.

Establish Baseline Metrics

Measure current performance before implementing new infrastructure: deployment frequency, quality incident rate, and cross-team handoff delays. Baselines enable ROI demonstration.

Implement Incrementally

Begin with a single use case rather than an organization-wide rollout. Validate the platform addresses core needs before scaling.

Integrate with Workflows

Connect prompt management to existing CI/CD pipelines, project management systems, and monitoring infrastructure to reduce adoption friction.

Further Reading

Internal Resources

- Prompt Management in 2025: How to Organize, Test, and Optimize

- Agent vs Model Evaluation: What's the Difference?

- What Are AI Evals? A Comprehensive Guide

Platform Comparisons

External Resources

Conclusion

Prompt management in 2026 requires purpose-built infrastructure extending beyond simple version control. The leading platforms (Maxim AI, PromptLayer, Langfuse, Vellum, and Humanloop) address distinct organizational needs through different architectural approaches.

Maxim AI delivers comprehensive lifecycle coverage, integrating experimentation, simulation, evaluation, and observability, accelerating deployment while maintaining rigorous quality standards. Organizations deploy AI agents 5× faster through systematic optimization and comprehensive visibility.

PromptLayer enables accessible collaboration, Langfuse provides open-source flexibility, Vellum offers enterprise-grade visual development, and Humanloop emphasizes evaluation-driven workflows.

The right platform transforms prompt management from a coordination bottleneck into a competitive advantage, enabling teams to iterate faster, deploy confidently, and deliver consistent quality at scale.

Explore Maxim AI's approach or schedule a demo to see how end-to-end lifecycle coverage accelerates AI development.