The Importance of Human-in-the-Loop Feedback in AI Agent Development

TL;DR: Automated evaluations provide scale, but human feedback delivers the nuanced judgment needed for reliable AI agents. Production environments introduce non-determinism, model drift, and subtle failures that static tests miss. This article explains why human-in-the-loop feedback is essential, how to design scalable review workflows, and how Maxim AI's evaluation and observability capabilities operationalize human review across pre-release and production settings.

AI agents increasingly drive customer support, internal copilots, and workflow automation. Yet production environments introduce variability that static test suites cannot anticipate, including non-determinism, model changes, and subtle failure modes that degrade trust without rigorous oversight. Human-in-the-loop feedback provides structured, expert judgment that complements automated evaluations to ensure reliable, high-quality agent behavior over time. This article explains why human feedback is essential, how to design scalable workflows, and how teams use Maxim AI's evaluation and observability capabilities to operationalize human review in pre-release and production settings.

Why Human Feedback Matters Beyond Automated Evals

Automated evaluators scale well and are necessary to keep quality signals current, but they are not sufficient. Humans excel at judging context, appropriateness, and subtle qualities such as tone, clarity, and user satisfaction that resist simple quantification. For example, a customer support agent can meet accuracy requirements while still failing at empathy or resolution effectiveness. Research indicates systems trained with human feedback align better to user preferences than those optimized solely on automated metrics, as highlighted by Stanford's Human-Centered AI Institute in their discussion of preference learning and alignment.

Maxim AI's evaluation approach recognizes this complementarity. The platform blends offline experiments, online evaluations, node-level analysis, and human review so teams can quantify quality, prevent regressions, and continuously align agents to human preference.

What Human-in-the-Loop Adds to AI Quality

Human reviewers provide granular, actionable insights that automated metrics cannot capture alone:

- Nuanced quality: Assess clarity, appropriateness, and resolution effectiveness alongside deterministic checks.

- Edge case validation: Interpret ambiguous interactions and boundary conditions where correctness is context-dependent.

- Trust alignment: Confirm behavior meets implicit social expectations, which is critical in conversational AI and copilots.

Maxim supports multiple evaluator classes and levels of granularity. Teams combine statistical and programmatic evaluators for deterministic scoring, use LLM-as-a-judge for qualitative dimensions such as clarity, toxicity, and faithfulness, and apply context metrics for RAG systems (recall, precision, relevance) via prompt retrieval testing. Evaluations can be attached at the session, trace, and node level to isolate failure points through node-level evaluation.

Designing Effective Human-in-the-Loop Workflows

To maximize value and maintain throughput, teams should structure human review across the lifecycle.

Identify High-Value Review Scenarios

Prioritize human review where it delivers the most signal:

- Automated uncertainty: Route low-confidence or evaluator disagreements for expert validation.

- User-reported issues: Investigate complaints or negative feedback from production logs.

- Business-critical workflows: Sample and review high-stakes domains (healthcare, finance, legal) regardless of automated scores.

- Novel scenarios and drift: Validate interactions that deviate from historical patterns before expanding automation.

Maxim's agent observability features help teams systematically surface these scenarios through intelligent filtering, evaluator outcomes, and production traces.

Streamline the Review Interface and Process

Human throughput depends on context and ergonomics:

- Provide complete conversation history, reasoning traces, tool calls, and current auto-eval scores.

- Use clear evaluation schemas with defined dimensions (accuracy, completeness, tone, helpfulness, compliance).

- Offer progressive disclosure, shortcuts, and intelligent defaults to reduce cognitive load.

Maxim's dedicated workflows support these patterns. Configure human evaluators with instructions, scoring types, and pass criteria. For production logs, create queues and rules to triage entries that need manual review.

Ensure Feedback Drives Action

Human judgments should lead to measurable improvements:

- Create tickets with ownership for flagged issues and track resolution.

- Aggregate feedback to detect failure clusters that warrant prompt or workflow changes.

- Feed human-corrected outputs into datasets for fine-tuning or ground truth updates using dataset curation.

Maxim's evaluation reports present pass/fail, scores, costs, tokens, latency, variables, and trace context, enabling engineering and product teams to act quickly. Keep reports actionable using customized reports.

Integrating Human and Automated Evaluations

Human and automated evaluators work best when unified within common pipelines:

- Automated triage: Use auto-eval scores and confidence to route ambiguous or low-scoring entries to humans. Configure auto-evaluation on production repositories.

- Validation loops: Regularly sample automated outcomes for human review to detect divergence and update evaluators or rules accordingly.

- Consensus gates: Require agreement between evaluators and multiple human reviewers for high-stakes decisions, then monitor post-deployment using alerts and scheduled checks.

For continuous monitoring, configure performance and quality alerts for latency, tokens, cost, and evaluator violations. Prevent drift with periodic scheduled runs and visualize outcomes across runs with comparisons and filters.

Production Observability and Distributed Tracing

Robust production observability is a prerequisite for effective human-in-the-loop systems. Instrument agents with distributed tracing to evaluate at session, trace, and node levels, and isolate failures quickly. With Maxim's agent observability, teams can:

- Track live agent behavior and AI monitoring signals in real time.

- Debug issues using agent tracing, LLM tracing, and node-level granularity.

- Curate datasets from production logs for ongoing AI evaluation and fine-tuning.

Explore the observability suite and the broader platform documentation.

Evaluator Choice: Quantitative, Qualitative, and Contextual

Use multiple evaluator types to capture different quality dimensions:

- Statistical/programmatic evaluators: Deterministic checks for tool call accuracy, schema conformance, or business rules.

- LLM-as-a-Judge: Structured, rubric-based qualitative scoring for faithfulness, clarity, and toxicity. For methodology and reliability practices, review the LLM-as-a-judge framework.

- RAG-specific evaluators: RAG evaluation via recall, precision, and relevance using prompt retrieval testing.

Attach evaluators programmatically or through the UI at the required granularity and pass the necessary variables (input, context, output). Review outcomes in unified reports, then iterate on prompts and workflows with data-driven prompt optimization.

Human Annotation in Practice: Configuration to Analysis

Maxim's human annotation capabilities cover end-to-end management of human feedback:

- Create evaluators with clear instructions, scoring types, and pass criteria in human annotation.

- Select evaluators while configuring test runs for prompts or HTTP endpoints through prompt evals for offline setup flows.

- Set up human evaluation using on-report annotation or email invitations for internal teams or SMEs with configurable sampling and dataset fields in human annotation on logs.

- Collect ratings either within the test report columns or via external rater dashboards. Multiple reviewers can contribute, and averages are computed for overall results.

- Analyze ratings with detailed feedback, rewritten outputs, filters per annotator, and options to add corrected outputs to datasets using dataset curation.

These workflows align human expertise with automated checks, ensuring teams maintain AI quality while scaling review efficiently.

Security and Safety Oversight

Production agents face adversarial risks that require continuous evaluation and human oversight to detect and mitigate. Integrate internal security reviews with evaluator violations and alerts. As threats evolve, scheduled runs and human review ensure guardrails remain effective.

Measurement: Quality, Efficiency, and Impact

Human-in-the-loop systems should be evaluated like any engineering system:

- Quality metrics: Inter-annotator agreement and correlation between human judgments and automated scores validate evaluation reliability.

- Operational metrics: Review throughput, time to feedback, and coverage rate ensure the process scales sustainably.

- Impact metrics: Quality improvement over time, reduced issue recurrence, and correlation with user satisfaction demonstrate real value.

Use Maxim's unified reports and dashboards to track these measures at the repository and run levels, then close the loop with agent observability and AI monitoring in production.

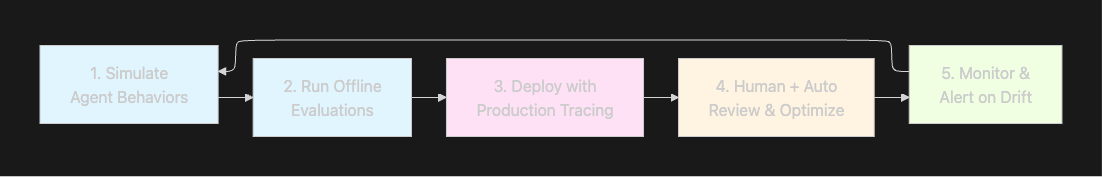

Putting It All Together: A Continuous Improvement Loop

The most effective teams treat human feedback as a first-class signal in a continuous improvement cycle:

- Simulate agent behaviors across scenarios and personas to stress test workflows through agent simulation.

- Run offline evaluations at session, trace, and span levels to quantify quality before deployment.

- Instrument agents in production with tracing and auto-eval rules from online evaluation, then route low-confidence or flagged cases into human queues via human annotation on logs.

- Aggregate human and machine results, optimize prompts using prompt optimization, and update datasets with corrected outputs through dataset curation.

- Monitor for regressions with alerts, rerun scheduled tests using scheduled runs, and iterate continuously.

TL;DR: Automated evaluations provide scale, but human feedback delivers the nuanced judgment needed for reliable AI agents. Production environments introduce non-determinism, model drift, and subtle failures that static tests miss. This article explains why human-in-the-loop feedback is essential, how to design scalable review workflows, and how Maxim AI's evaluation and observability capabilities operationalize human review across pre-release and production settings.

AI agents increasingly drive customer support, internal copilots, and workflow automation. Yet production environments introduce variability that static test suites cannot anticipate, including non-determinism, model changes, and subtle failure modes that degrade trust without rigorous oversight. Human-in-the-loop feedback provides structured, expert judgment that complements automated evaluations to ensure reliable, high-quality agent behavior over time. This article explains why human feedback is essential, how to design scalable workflows, and how teams use Maxim AI's evaluation and observability capabilities to operationalize human review in pre-release and production settings.

Why Human Feedback Matters Beyond Automated Evals

Automated evaluators scale well and are necessary to keep quality signals current, but they are not sufficient. Humans excel at judging context, appropriateness, and subtle qualities such as tone, clarity, and user satisfaction that resist simple quantification. For example, a customer support agent can meet accuracy requirements while still failing at empathy or resolution effectiveness. Research indicates systems trained with human feedback align better to user preferences than those optimized solely on automated metrics, as highlighted by Stanford's Human-Centered AI Institute in their discussion of preference learning and alignment.

Maxim AI's evaluation approach recognizes this complementarity. The platform blends offline experiments, online evaluations, node-level analysis, and human review so teams can quantify quality, prevent regressions, and continuously align agents to human preference.

What Human-in-the-Loop Adds to AI Quality

Human reviewers provide granular, actionable insights that automated metrics cannot capture alone:

- Nuanced quality: Assess clarity, appropriateness, and resolution effectiveness alongside deterministic checks.

- Edge case validation: Interpret ambiguous interactions and boundary conditions where correctness is context-dependent.

- Trust alignment: Confirm behavior meets implicit social expectations, which is critical in conversational AI and copilots.

Maxim supports multiple evaluator classes and levels of granularity. Teams combine statistical and programmatic evaluators for deterministic scoring, use LLM-as-a-judge for qualitative dimensions such as clarity, toxicity, and faithfulness, and apply context metrics for RAG systems (recall, precision, relevance) via prompt retrieval testing. Evaluations can be attached at the session, trace, and node level to isolate failure points through node-level evaluation.

Designing Effective Human-in-the-Loop Workflows

To maximize value and maintain throughput, teams should structure human review across the lifecycle.

Identify High-Value Review Scenarios

Prioritize human review where it delivers the most signal:

- Automated uncertainty: Route low-confidence or evaluator disagreements for expert validation.

- User-reported issues: Investigate complaints or negative feedback from production logs.

- Business-critical workflows: Sample and review high-stakes domains (healthcare, finance, legal) regardless of automated scores.

- Novel scenarios and drift: Validate interactions that deviate from historical patterns before expanding automation.

Maxim's agent observability features help teams systematically surface these scenarios through intelligent filtering, evaluator outcomes, and production traces.

Streamline the Review Interface and Process

Human throughput depends on context and ergonomics:

- Provide complete conversation history, reasoning traces, tool calls, and current auto-eval scores.

- Use clear evaluation schemas with defined dimensions (accuracy, completeness, tone, helpfulness, compliance).

- Offer progressive disclosure, shortcuts, and intelligent defaults to reduce cognitive load.

Maxim's dedicated workflows support these patterns. Configure human evaluators with instructions, scoring types, and pass criteria. For production logs, create queues and rules to triage entries that need manual review.

Ensure Feedback Drives Action

Human judgments should lead to measurable improvements:

- Create tickets with ownership for flagged issues and track resolution.

- Aggregate feedback to detect failure clusters that warrant prompt or workflow changes.

- Feed human-corrected outputs into datasets for fine-tuning or ground truth updates using dataset curation.

Maxim's evaluation reports present pass/fail, scores, costs, tokens, latency, variables, and trace context, enabling engineering and product teams to act quickly. Keep reports actionable using customized reports.

Integrating Human and Automated Evaluations

Human and automated evaluators work best when unified within common pipelines:

- Automated triage: Use auto-eval scores and confidence to route ambiguous or low-scoring entries to humans. Configure auto-evaluation on production repositories.

- Validation loops: Regularly sample automated outcomes for human review to detect divergence and update evaluators or rules accordingly.

- Consensus gates: Require agreement between evaluators and multiple human reviewers for high-stakes decisions, then monitor post-deployment using alerts and scheduled checks.

For continuous monitoring, configure performance and quality alerts for latency, tokens, cost, and evaluator violations. Prevent drift with periodic scheduled runs and visualize outcomes across runs with comparisons and filters.

Production Observability and Distributed Tracing

Robust production observability is a prerequisite for effective human-in-the-loop systems. Instrument agents with distributed tracing to evaluate at session, trace, and node levels, and isolate failures quickly. With Maxim's agent observability, teams can:

- Track live agent behavior and AI monitoring signals in real time.

- Debug issues using agent tracing, LLM tracing, and node-level granularity.

- Curate datasets from production logs for ongoing AI evaluation and fine-tuning.

Explore the observability suite and the broader platform documentation.

Evaluator Choice: Quantitative, Qualitative, and Contextual

Use multiple evaluator types to capture different quality dimensions:

- Statistical/programmatic evaluators: Deterministic checks for tool call accuracy, schema conformance, or business rules.

- LLM-as-a-Judge: Structured, rubric-based qualitative scoring for faithfulness, clarity, and toxicity. For methodology and reliability practices, review the LLM-as-a-judge framework.

- RAG-specific evaluators: RAG evaluation via recall, precision, and relevance using prompt retrieval testing.

Attach evaluators programmatically or through the UI at the required granularity and pass the necessary variables (input, context, output). Review outcomes in unified reports, then iterate on prompts and workflows with data-driven prompt optimization.

Human Annotation in Practice: Configuration to Analysis

Maxim's human annotation capabilities cover end-to-end management of human feedback:

- Create evaluators with clear instructions, scoring types, and pass criteria in human annotation.

- Select evaluators while configuring test runs for prompts or HTTP endpoints through prompt evals for offline setup flows.

- Set up human evaluation using on-report annotation or email invitations for internal teams or SMEs with configurable sampling and dataset fields in human annotation on logs.

- Collect ratings either within the test report columns or via external rater dashboards. Multiple reviewers can contribute, and averages are computed for overall results.

- Analyze ratings with detailed feedback, rewritten outputs, filters per annotator, and options to add corrected outputs to datasets using dataset curation.

These workflows align human expertise with automated checks, ensuring teams maintain AI quality while scaling review efficiently.

Security and Safety Oversight

Production agents face adversarial risks that require continuous evaluation and human oversight to detect and mitigate. Integrate internal security reviews with evaluator violations and alerts. As threats evolve, scheduled runs and human review ensure guardrails remain effective.

Measurement: Quality, Efficiency, and Impact

Human-in-the-loop systems should be evaluated like any engineering system:

- Quality metrics: Inter-annotator agreement and correlation between human judgments and automated scores validate evaluation reliability.

- Operational metrics: Review throughput, time to feedback, and coverage rate ensure the process scales sustainably.

- Impact metrics: Quality improvement over time, reduced issue recurrence, and correlation with user satisfaction demonstrate real value.

Use Maxim's unified reports and dashboards to track these measures at the repository and run levels, then close the loop with agent observability and AI monitoring in production.

Putting It All Together: A Continuous Improvement Loop

The most effective teams treat human feedback as a first-class signal in a continuous improvement cycle:

- Simulate agent behaviors across scenarios and personas to stress test workflows through agent simulation.

- Run offline evaluations at session, trace, and span levels to quantify quality before deployment.

- Instrument agents in production with tracing and auto-eval rules from online evaluation, then route low-confidence or flagged cases into human queues via human annotation on logs.

- Aggregate human and machine results, optimize prompts using prompt optimization, and update datasets with corrected outputs through dataset curation.

- Monitor for regressions with alerts, rerun scheduled tests using scheduled runs, and iterate continuously.

This loop unifies LLM evaluation, agent evaluation, agent tracing, and human oversight so teams can ship reliable agents faster.

Conclusion

Human-in-the-loop feedback is essential to developing trustworthy AI agents. Automation provides scale, but human expertise delivers the nuanced, context-aware judgments that maintain quality at the edge. Maxim AI's end-to-end platform operationalizes this balance with offline and online evaluations, node-level granularity, distributed tracing, human annotation queues, alerts, scheduled runs, and data curation so teams can measure, monitor, and improve continuously. To learn more about platform capabilities, explore the platform documentation and the article on LLM-as-a-judge in agentic applications.

Ready to evaluate, monitor, and optimize your agents end-to-end with human-in-the-loop feedback? Request a demo at https://getmaxim.ai/demo or sign up at https://app.getmaxim.ai/sign-up.

This loop unifies LLM evaluation, agent evaluation, agent tracing, and human oversight so teams can ship reliable agents faster.

Conclusion

Human-in-the-loop feedback is essential to developing trustworthy AI agents. Automation provides scale, but human expertise delivers the nuanced, context-aware judgments that maintain quality at the edge. Maxim AI's end-to-end platform operationalizes this balance with offline and online evaluations, node-level granularity, distributed tracing, human annotation queues, alerts, scheduled runs, and data curation so teams can measure, monitor, and improve continuously. To learn more about platform capabilities, explore the platform documentation and the article on LLM-as-a-judge in agentic applications.

Ready to evaluate, monitor, and optimize your agents end-to-end with human-in-the-loop feedback? Request a demo at https://getmaxim.ai/demo or sign up at https://app.getmaxim.ai/sign-up.