✨ Agentic mode, Scheduled runs, New evals, and more

Feature spotlight

🤖 Agentic mode in the Prompt Playground

Prototype complete agent behavior, including automatic tool calling, directly within the playground. Here’s what you can do:

- Test multi-step flows: Experiment with and evaluate complex agentic interactions where the model automatically calls tools and executes steps until a final response is generated.

- Set limits and termination conditions: Control the maximum number of tool calls allowed and define a custom string to end the agentic sequence once it appears in the response.

- Mimic and monitor tool usage: Track which tools are being called during model generation.

📎 Attach files to traces and spans

Enhance the observability of your AI workflows by adding local files (audio, images, text, etc.) or remote files (as URLs) directly to your traces and spans using the Maxim SDK. This capability provides richer context, e.g., documents, audio recordings, or images, which were used as input or context, for debugging, analysis, and auditing.

All attachments are stored and viewable within the Maxim platform alongside your trace data, allowing quick access to supporting information for faster issue resolution. Learn more.

🕣 Scheduled Runs

Run automated evaluations for your prompts, workflows, and prompt chains at regular intervals using Scheduled Runs. This removes the need for manually triggering test runs each time, and ensures your AI agents and workflows are routinely evaluated for quality and performance.

Set up Scheduled Runs for your quality evaluations by following these steps.

Scheduled Runs for periodic quality evaluation

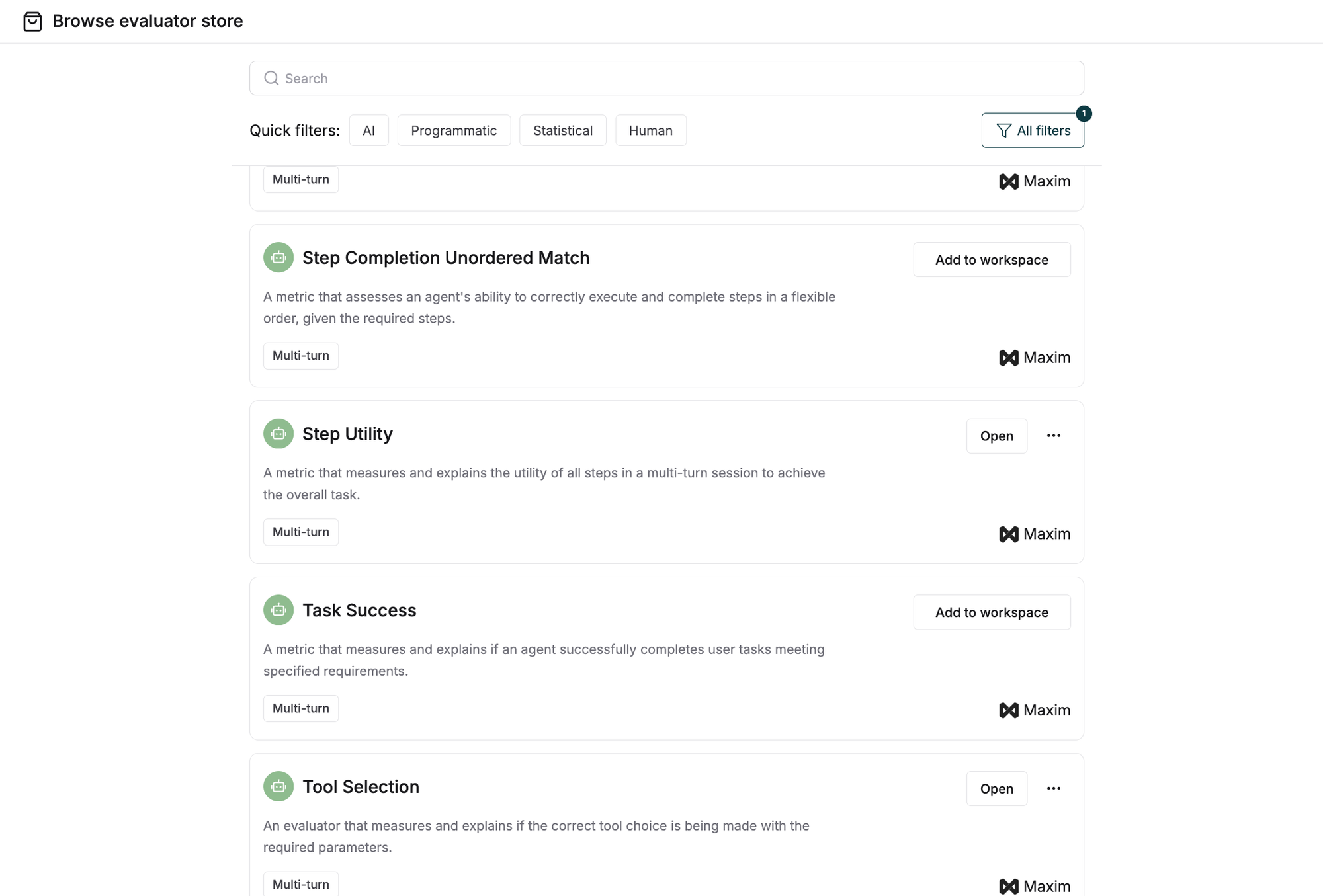

🧪 New evals for multi-turn and SQL-based use cases!

We’ve added a new set of evaluators (LLM-as-a-judge and statistical) to help you ship high-quality AI applications, with a strong focus on evals for agentic and NL-to-SQL workflows. Key highlights:

- Multi-turn evals: Evaluate if an agent successfully completes user tasks, makes correct tool choices, executes and completes the required steps, and follows the correct trajectory to achieve user goals.

- SQL evals: Validate the syntax and adherence to DB schema, and evaluate the correctness of SQL queries generated from natural language input.

- Tool call evals: Check whether the model selected the correct tool with the right parameters, and measure how accurately it called the expected tools.

You can add these to your workspaces from the Evaluator Store and start using!

🔭 Public API for OpenTelemetry trace ingestion

You can now send your OpenTelemetry GenAI traces directly to Maxim with a single-line code change, unlocking comprehensive LLM observability. Maxim supports semantic conventions for generative AI systems, so you can set up observability for your LLM workflows with minimal setup.

🧠 New model support: Claude 4, Gemma 3, and Qwen3

Claude 4 (Anthropic’s latest), Gemma 3 (Google’s newest open-model series), and Qwen3 (Alibaba’s latest open-source model family) models are now available on Maxim. These models bring enhanced reasoning, multilingual, and multimodal capabilities to your experimentation and evaluation workflows.

Add Claude 4 models to your workspace via the Anthropic provider, and Qwen3 and Gemma 3 via the Ollama provider.

🚀 Added model provider support: Fireworks AI & Mistral

You can now connect and run popular models like DeepSeek, Llama, and Qwen using the Fireworks AI provider within Maxim. Integrate your model via serverless option (best for a fast, no-ops setup) or deployment option (great for custom model control).

Additionally, Mistral’s SOTA models, including Ministral 8B, Mistral Large, and Pixtral Large, are now available in Maxim via the Mistral provider.

Customer story

🏦 Elevating conversational banking: Clinc x Maxim AI

Clinc is a conversational AI platform built for the banking industry, enabling financial institutions to manage account inquiries, execute transactions, and interact with documents through intelligent, context-aware virtual assistants.

As Clinc expanded their platform with RAG for document-based Q&A and enhanced NLU to better interpret user conversations, they faced familiar challenges: public benchmarks didn’t match production needs, initial eval datasets lacked real-world depth, and adopting emerging models required a systematic, modular evaluation framework.

Clinc partnered with Maxim to deliver high-quality conversational AI to their banking clients. They build versioned datasets, benchmark LLMs, and rapidly iterate on prompts—all without writing any custom scripts. Dashboards and shared workspaces enabled faster insights and collaboration, turning 40+ hours of work into minutes for Clinc. Read the full customer story.

Upcoming releases

📊 Revamped log dashboard

We’re making the logs dashboard more customizable than ever. Add dynamic charts to track and visualize metrics, like evaluation scores, trace counts, and more, that matter most to you. This enables you to start debugging directly from the charts and drill down into logs for faster root cause analysis and resolution.

You can also configure routine emails for daily, weekly, or monthly overviews to stay on top of your application's performance trends.

Knowledge nuggets

🤖 Agent2Agent Protocol (A2A)

The Agent-to-Agent Protocol (A2A) provides a standard way for AI systems to talk to each other across different platforms. Unlike the Model Context Protocol (MCP), which focuses on how AI agents access tools and resources, A2A specifically addresses how independent AI agents communicate with each other, enabling them to find, verify, and collaborate with other specialized AI agents.

For example, A2A allows a customer support agent to locate and interact with a data retrieval system or a project management platform to coordinate tasks across multiple specialized tools, breaking down traditional operational barriers. Learn more in our blog.

🚀 Build & test AI Agents with n8n and Maxim!

Learn how to build a reliable no-code AI agent using n8n for visual workflow automation and Maxim to evaluate its quality and performance.

In this example, the agent finds public events in the US and provides detailed information based on user preferences. Expose the agent as a webhook in n8n and integrate it into Maxim Workflows via an API call. This enables you to simulate agent behavior across scenarios and user personas, and evaluate the quality of interactions at every step. Check out our blog!

Video guide to build and test AI agents using n8n and Maxim