Top 5 Tools to Detect Hallucinations in AI Applications: A Comprehensive Guide

TL;DR:

As AI systems move from prototypes to production, hallucination detection has become essential to maintaining trust and reliability. This guide explains how teams identify factual drift in model outputs, what criteria define an effective detection tool, and how emerging evaluation frameworks are shaping safer, more transparent AI workflows.

Artificial Intelligence (AI) has rapidly advanced over the past decade, with Large Language Models (LLMs) and AI agents becoming integral to business operations, customer support, content creation, and more. However, as these systems proliferate, so does the risk of hallucinations, instances where AI generates plausible-sounding but factually incorrect or misleading information. Hallucinations undermine trust, compromise safety, and can have real-world consequences, especially in high-stakes domains. Effective detection and mitigation of hallucinations are critical to ensuring reliable and responsible AI.

This blog explores the top five tools for hallucination detection in AI applications, assesses their methodologies, and highlights how Maxim AI stands out with its robust, enterprise-ready solutions.

Table of Contents

- Understanding Hallucinations in AI

- Criteria for Evaluating Hallucination Detection Tools

- Top 5 Tools for Hallucination Detection

- Best Practices for Integrating Hallucination Detection

- Conclusion

- Further Reading and Resources

Understanding Hallucinations in AI

Hallucinations in AI refer to outputs that are syntactically correct and contextually relevant but factually inaccurate or misleading. These can range from minor factual errors to entirely fabricated statements. The phenomenon is particularly prevalent in LLM-based applications, where models generate text based on probabilities rather than grounded knowledge.

For a deeper dive into the causes and implications of AI hallucinations, see AI Reliability: How to Build Trustworthy AI Systems and What Are AI Evals?.

Criteria for Evaluating Hallucination Detection Tools

When selecting a hallucination detection tool, consider the following criteria:

- Accuracy: How reliably does the tool flag hallucinated content?

- Integration: Can it be embedded seamlessly into your existing AI pipelines?

- Observability: Does it provide actionable insights and traceability?

- Scalability: Is it suitable for production-scale applications?

- Compliance: Does it support auditability and regulatory requirements?

| Criterion | What It Means | Example Metrics |

|---|---|---|

| Accuracy | How precisely the tool flags hallucinated content | Factuality score, faithfulness rate |

| Integration | Ease of connecting to AI pipelines and MLOps stacks | SDK support, API latency |

| Observability | Depth of traceability and root-cause insight | Span tracing, evaluation logs |

| Scalability | Ability to handle production-scale models | Requests/sec, memory footprint |

| Compliance | Support for audit and governance requirements | Trace retention, GDPR readiness |

Top 5 Tools for Hallucination Detection

| Tool | Focus | Detection Method | Observability | Integration | Best For |

|---|---|---|---|---|---|

| Maxim AI | Evaluation & observability | Multi-stage detection (prompt, output, user) with rule/model evals | Full span traces, metrics, feedback loop | APIs, SDKs, orchestration (LangChain, OpenAI) | Enterprises needing reliable hallucination monitoring |

| Arize AI | Model observability | Embedding drift + anomaly detection | Drift metrics for LLMs & tabular data | Databricks, Vertex, MLflow | Teams tracking model drift in production |

| Comet | Experiment tracking | Output comparison via experiment logs | Limited (experiment-level only) | Python SDK, REST API | R&D teams analyzing prompt variations |

| LangSmith | Tracing & debugging | Test-based output evaluation | Chain-level tracing + metadata capture | LangChain-native + API | Developers debugging multi-chain LLM apps |

| Langfuse | LLM monitoring | Prompt/output logging + anomaly scoring | Session-level metrics & dashboards | OpenAI, Hugging Face, LangChain | Startups monitoring prompt quality in production |

1. Maxim AI

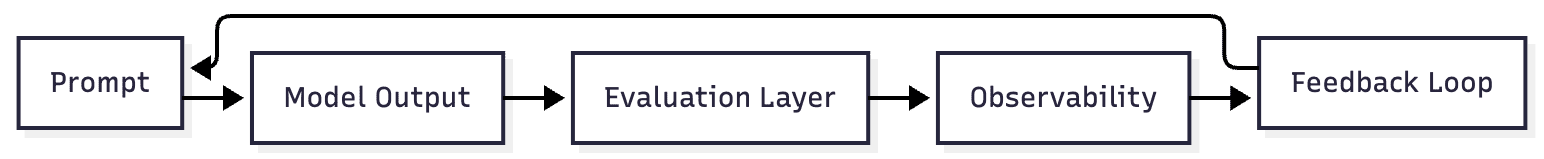

Maxim AI offers a comprehensive platform for AI agent quality evaluation, with advanced capabilities for hallucination detection, traceability, and workflow automation. Maxim’s evaluation workflows are designed to catch hallucinations at multiple stages (prompt design, model output, and user interaction) ensuring robust quality control.

Key Features

- Automated Hallucination Detection: Maxim leverages both rule-based and model-based detection strategies, allowing organizations to customize evaluation metrics to their specific domain. For more details, see AI Agent Evaluation Metrics.

- Integrated Prompt Management: By tracking prompt changes and their effect on hallucination rates, Maxim empowers teams to optimize prompts for factual accuracy. Learn more in Prompt Management in 2025.

- Rich Observability: Maxim provides detailed tracing and observability tools, enabling root-cause analysis of hallucinations. See LLM Observability: How to Monitor Large Language Models in Production.

- Enterprise-Grade Compliance: Maxim supports audit trails and compliance features essential for regulated industries.

- Seamless Integration: With robust APIs, Maxim fits into modern MLOps pipelines and supports integration with leading orchestrators.

Real-World Impact

Maxim’s capabilities are highlighted in multiple case studies, such as Clinc’s Path to AI Confidence and Mindtickle’s Quality Evaluation, where organizations reduced hallucination rates and improved user trust.

Learn More

For a detailed comparison with other tools, see Maxim vs Arize, Maxim vs LangSmith, and Maxim vs Langfuse.

2. Arize AI

Arize AI focuses on observability and monitoring for machine learning models, including LLMs. Its hallucination detection capabilities are centered around anomaly detection and drift monitoring, helping teams identify when model outputs deviate from expected norms.

Key Features:

- Automated anomaly detection

- Model drift tracking

- Integration with existing MLOps stacks

For a detailed comparison, see Maxim vs Arize.

3. Comet

Comet provides experiment tracking, model monitoring, and evaluation tools for ML practitioners. Its LLMOps suite includes features for prompt management and output evaluation, which can aid in identifying hallucinated responses.

Key Features:

- Experiment and prompt tracking

- Model output evaluation

- Collaboration features for teams

See Maxim vs Comet for a feature-by-feature breakdown.

4. LangSmith

LangSmith specializes in tracing and debugging LLM applications. Its evaluation tools help developers identify hallucinations through test suites and output analysis.

Key Features:

- Output tracing and debugging

- Custom evaluation workflows

- Integration with LangChain and related frameworks

For more, visit Maxim vs LangSmith.

5. Langfuse

Langfuse offers monitoring and observability for LLM-powered applications, focusing on prompt and output evaluation. Its tools help teams identify and address hallucinated outputs in production environments.

Key Features:

- Prompt and output monitoring

- Real-time analytics

- Integration with popular LLM frameworks

Compare with Maxim at Maxim vs Langfuse.

Best Practices for Integrating Hallucination Detection

- Incorporate Evaluation Early: Integrate hallucination detection during development, not just in production. Use tools like Maxim’s Evaluation Workflows to establish baselines.

- Leverage Observability: Implement tracing and observability to understand the context of hallucinations. See Agent Tracing for Debugging Multi-Agent AI Systems.

- Optimize Prompts: Regularly test and refine prompts to minimize hallucination risk. Refer to Prompt Management in 2025.

- Automate Evaluation: Use automated metrics and workflows to scale hallucination detection without manual bottlenecks.

- Monitor Continuously: Deploy continuous monitoring in production to catch new or evolving failure modes. See AI Model Monitoring is the Key to Reliable and Responsible AI.

Conclusion

Hallucinations present a significant challenge for AI applications, but with the right tools and best practices, organizations can mitigate risks and build trustworthy systems. Maxim AI leads the field with its comprehensive evaluation, observability, and workflow automation capabilities, making it the preferred choice for enterprises seeking to ensure AI reliability.

To see Maxim in action or discuss your evaluation needs, schedule a demo.

Further Reading and Resources

- AI Agent Quality Evaluation

- Evaluation Workflows for AI Agents

- Prompt Management in 2025

- AI Reliability: How to Build Trustworthy AI Systems

- How to Ensure Reliability of AI Applications: Strategies, Metrics, and the Maxim Advantage

- What Are AI Evals?

For a personalized consultation, reach out to Maxim’s team or explore the Maxim documentation for more technical details.