The Importance of Observability: Why Your AI Agents Need It

The artificial intelligence industry is experiencing a troubling paradox. While AI adoption has reached unprecedented levels, with enterprises investing $30 billion to $40 billion in generative AI pilots in 2024, the failure rate has simultaneously skyrocketed. Recent analysis from S&P Global Market Intelligence reveals that 42% of companies now abandon the majority of their AI initiatives, a dramatic surge from just 17% the previous year.

The root cause? Most organizations lack the observability infrastructure necessary to understand, monitor, and improve their AI agents in production. When AI agents operate as "black boxes," teams struggle to diagnose issues, optimize performance, or ensure reliability. This article explores why observability is not optional for production AI systems and how comprehensive monitoring can transform your AI agents from unpredictable experiments into reliable, production-ready solutions.

The Production AI Reliability Crisis

The statistics paint a sobering picture of AI deployment reality. Gartner research shows that at least 30% of generative AI projects will be abandoned after proof of concept by the end of 2025, primarily due to poor data quality, inadequate risk controls, escalating costs, or unclear business value. Even more concerning, MIT research found that 95% of AI pilots in 2024 delivered zero measurable business return.

The average organization scraps 46% of AI proof-of-concepts before they reach production. This represents roughly $30 billion in destroyed shareholder value in a single year. The reasons enterprises cite include cost overruns, data privacy concerns, and security risks, all challenges that proper observability can help address.

What makes this crisis particularly acute is that traditional monitoring tools, designed for deterministic software systems, cannot adequately address the unique challenges of AI agents. Unlike conventional applications that follow predictable execution paths, AI agents exhibit non-deterministic behavior and make autonomous decisions that can vary significantly across sessions with identical inputs.

Why AI Agents Are Different: The Non-Determinism Challenge

AI agents fundamentally differ from traditional software in ways that make observability critically important. Traditional software follows deterministic logic, given the same input, it produces the same output every time. AI agents, however, use probabilistic models that can produce different results with identical inputs.

This non-deterministic nature creates several observability challenges:

Opaque Decision-Making: When an agent fails or produces unexpected output, teams need detailed traces to understand why. According to OpenTelemetry's AI agent observability initiative, without proper monitoring, tracing, and logging mechanisms, diagnosing issues and improving efficiency becomes extremely challenging as agents scale to meet enterprise needs.

Dynamic Execution Paths: Agents often maintain internal memory and make decisions through callbacks that never appear in standard logs. A hiring agent might process thousands of resumes without technical errors, but if it silently favors one demographic group over another through biased decision-making, traditional monitoring would still show green lights across the board.

Multi-Step Workflows: Modern agentic systems involve multiple LLM calls, tool invocations, and conditional logic. Teams building agents with frameworks like LangGraph, CrewAI, or OpenAI's Agent SDK face the challenge of capturing complete execution paths across these complex workflows.

Cost and Latency Complexity: AI agents rely on LLMs and external APIs billed per token or per call, making cost attribution and latency optimization critical concerns that require granular visibility.

What Is AI Agent Observability?

AI agent observability is the practice of monitoring artificial intelligence applications from source to deployment, providing complete end-to-end visibility into both the health and performance of AI systems in production. Unlike traditional software observability that focuses on system metrics, logs, and traces, AI observability must monitor four interdependent components: data, system, code, and model response.

The OpenTelemetry GenAI Special Interest Group is actively working to standardize AI agent observability through semantic conventions that define how telemetry data should be collected and reported. This standardization effort aims to prevent vendor lock-in and ensure interoperability across different frameworks and observability tools.

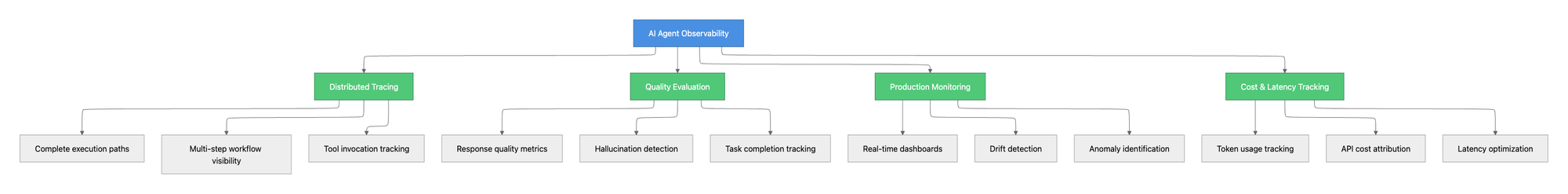

Effective AI observability encompasses several critical capabilities:

The Critical Importance of AI Agent Observability

Debugging and Root Cause Analysis

When an agent fails or produces unexpected output, observability tools provide the traces needed to pinpoint the source of error. This capability is especially important in complex agents involving multiple LLM calls, tool interactions, and conditional logic.

Without observability, debugging becomes a guessing game. Teams waste countless hours trying to reproduce issues that may only occur under specific conditions or with particular user inputs. With proper observability, engineers can replay traces, examine decision points, and understand exactly where and why failures occurred.

Preventing Silent Failures at Scale

The most dangerous failures in AI systems are those that occur without any error signals. Standard monitoring tools would show green lights across the board while an agent silently makes poor decisions that impact business outcomes and user trust.

Consider these real-world scenarios:

- A customer service agent that consistently escalates simple queries unnecessarily, inflating support costs

- A recommendation engine that slowly drifts toward suggesting only high-margin products, damaging customer experience

- A document processing agent that begins extracting incorrect information after a model update

These issues won't trigger traditional alerts based on error rates or system health. They require quality evaluation and behavioral monitoring that observability platforms provide.

Cost Management and Optimization

AI agents often accumulate substantial costs through LLM API calls, each billed per token. Research on AI-driven systems shows that organizations need accurate cost attribution across users, features, and workflows to manage expenses effectively.

Observability platforms track token usage at granular levels, enabling teams to:

- Identify workflows consuming disproportionate resources

- Compare costs across different model providers

- Optimize prompt engineering to reduce token consumption

- Set budget limits and receive alerts before overruns occur

Building Trust Through Transparency

Over 65% of organizations deploying AI systems cite monitoring and quality assurance as their primary technical challenge, according to Stanford's AI Index. This challenge stems from the fundamental need to trust AI systems making autonomous decisions.

Observability transforms AI agents from opaque "black boxes" into transparent "glass boxes," enabling:

- Explainability of agent decisions for compliance and audit requirements

- Validation that agents behave according to defined policies

- Evidence-based confidence in agent reliability for stakeholders

- Continuous improvement through data-driven optimization

The Observability Architecture for AI Agents

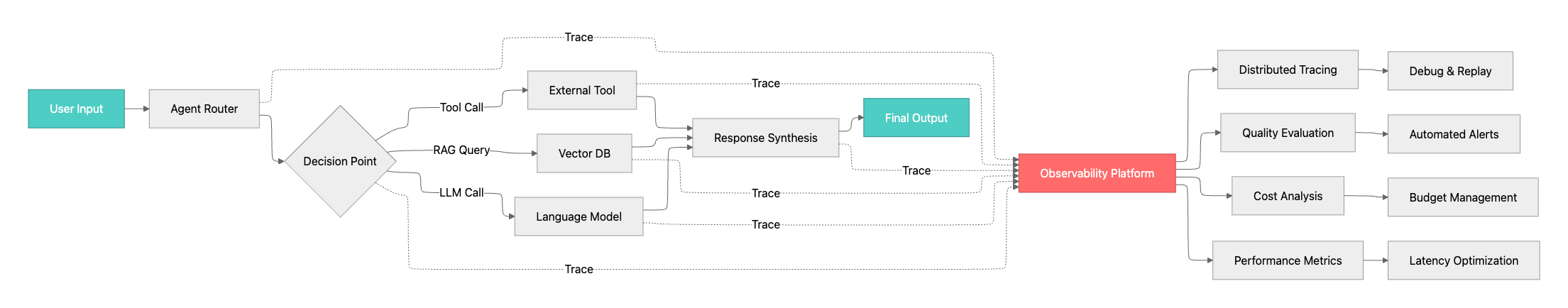

Modern AI agent observability requires a comprehensive architecture that captures data at every stage of the agent lifecycle:

This architecture ensures that every interaction, from initial user input through tool invocations to final response, is captured with sufficient context for debugging, evaluation, and optimization.

How Maxim AI Enables Comprehensive Agent Observability

Maxim AI provides an end-to-end platform that unifies simulation, evaluation, and observability, enabling teams to ship AI agents reliably and up to 5x faster. The platform's agent observability suite addresses the complete spectrum of production monitoring needs.

Distributed Tracing for Multi-Agent Systems

Maxim captures production telemetry through distributed tracing that tracks every step from user input through tool invocation to final response. This tracing capability provides visibility into:

- Complete execution paths across multi-step agent workflows

- Every LLM call with input/output pairs and token counts

- Tool invocations and their results

- Decision points and branching logic

- Context retrieved from RAG pipelines or databases

The platform's trace visualization enables teams to replay specific interactions, identify bottlenecks, and understand exactly how agents reached particular decisions.

Real-Time Quality Monitoring and Evaluation

Maxim's unified framework for machine and human evaluations allows teams to quantify improvements or regressions and deploy with confidence. The platform offers:

Automated Quality Checks: Run custom evaluations tailored to your specific agents and business logic, or leverage built-in templates for metrics like response relevancy, helpfulness, prompt adherence, language match, and task completion.

Flexible Evaluation Framework: Access a variety of off-the-shelf evaluators through the evaluator store or create custom evaluators using AI-based, programmatic, or statistical methods. Evaluations can be configured at session, trace, or span level for fine-grained quality assessment.

Human-in-the-Loop Validation: Conduct human evaluations for last-mile quality checks and nuanced assessments that automated systems cannot fully capture. This hybrid approach ensures agents align with human preferences and business requirements.

Production-to-Evaluation Feedback Loops

One of Maxim's key differentiators is the seamless connection between production monitoring and pre-release testing. Teams can:

- Curate datasets from production logs for regression testing

- Use real-world failures to strengthen future simulations

- Test prompt changes against historical production data before deployment

- Create continuous improvement cycles that enhance AI quality throughout the development lifecycle

This integration means that production observability directly informs experimentation and evaluation, creating a virtuous cycle of improvement.

Cost Visibility and Budget Management

Maxim tracks token usage, API costs, and latency at granular levels, providing teams with clear visibility into where expenses accumulate. The platform enables:

- Cost attribution across users, features, and workflows

- Budget alerts before overruns impact business operations

- Comparison of costs across different model providers

- Identification of optimization opportunities

For teams using multiple LLM providers, Maxim's Bifrost LLM gateway provides unified access to 12+ providers through a single OpenAI-compatible API, with automatic failover, load balancing, and semantic caching to reduce costs and latency.

Cross-Functional Collaboration

While Maxim offers highly performant SDKs in Python, TypeScript, Java, and Go, the platform's user experience is designed for cross-functional collaboration. Product managers can configure evaluations, create custom dashboards, and review agent performance without deep technical expertise, while engineers maintain full programmatic control through SDKs.

This approach addresses a common pain point in AI development: the disconnect between engineering teams who build agents and product teams who define success criteria. Maxim ensures both groups can work together seamlessly throughout the AI lifecycle.

Getting Started with AI Agent Observability

Implementing comprehensive observability for your AI agents requires a systematic approach:

1. Instrument Your Agents: Integrate Maxim's SDKs to begin capturing traces from your production agents. The platform supports all major AI frameworks and follows OpenTelemetry conventions for interoperability.

2. Define Quality Metrics: Establish the evaluators that matter for your specific use cases. Start with foundational metrics like response quality and task completion, then add custom evaluators aligned with your business logic.

3. Set Up Monitoring Dashboards: Create views that surface the most critical information for your team. Maxim's custom dashboard capability allows you to slice data across dimensions relevant to your agents.

4. Establish Alert Policies: Configure alerts for quality degradation, cost spikes, latency increases, or other conditions that require immediate attention. Proactive alerting prevents small issues from becoming major incidents.

5. Create Feedback Loops: Use production data to continuously improve your agents through simulation testing, prompt optimization, and model experimentation.

Conclusion: Observability as Competitive Advantage

The AI reliability crisis is real, but it's not insurmountable. While 42% of companies abandon their AI initiatives, those that succeed have one thing in common: they treat observability as a first-class requirement, not an afterthought.

As AI agents move from experimental prototypes to business-critical applications, the ability to understand their behavior, monitor their performance, and systematically evaluate their outputs becomes essential. Organizations that invest in proper observability infrastructure can harness the transformative power of AI agents while avoiding the pitfalls that have plagued early adopters.

Maxim AI provides the comprehensive observability platform teams need to ship reliable AI agents with confidence. From distributed tracing and automated evaluation to production monitoring and cost management, Maxim addresses every aspect of AI quality throughout the development lifecycle.

Ready to transform your AI agents from unpredictable experiments into reliable, production-ready solutions? Schedule a demo to see how Maxim can help your team ship AI agents reliably and 5x faster, or sign up today to start monitoring your agents with comprehensive observability.