Observability for AI Agents: LangGraph, OpenAI Agents, and Crew AI

TL;DR:

This blog provides a comprehensive guide to observability for AI agents—specifically focusing on LangGraph, OpenAI Agents, and Crew AI. It covers why observability is essential for reliable, scalable agentic systems, explores the unique architectures and debugging strategies of each framework, and demonstrates how platforms like Maxim AI enable advanced tracing, evaluation, and monitoring. Readers will learn practical approaches to agent tracing, session-level and node-level metrics, and how to build robust quality pipelines for multi-agent and single-agent workflows.

Introduction

Modern AI agents have evolved from simple demo scripts to robust systems that automate customer support, orchestrate research, and drive business operations. As these agents grow in complexity and autonomy, the need for rigorous observability becomes paramount. Observability in the context of AI agents ensures that teams can monitor, debug, and optimize agent behavior across real-world scenarios, minimizing risks and maximizing reliability.

In this blog, we explore the core principles of observability for agentic workflows, focusing on three leading frameworks: LangGraph, OpenAI Agents, and Crew AI. We will also highlight how Maxim AI provides a unified platform for agent tracing, evaluation, and monitoring, enabling teams to build trustworthy AI systems.

Why Observability Matters for AI Agents

Observability is the backbone of reliable AI systems. It encompasses:

- Distributed tracing: Capturing granular traces of agent decisions, tool usage, and model outputs.

- Session and node-level evaluations: Measuring quality, latency, and correctness at every stage of agent workflows.

- Real-time monitoring and alerts: Detecting performance regressions, hallucinations, and drift before they impact users.

- Human annotation and feedback: Integrating expert reviews for nuanced quality checks.

For agentic frameworks, observability is not just about logging—it’s about understanding decision flows, debugging failures, and ensuring alignment with business and user goals. Platforms like Maxim AI offer comprehensive tools for agent monitoring, tracing, and quality evaluation.

Framework Overview

Comparison: Observability Across LangGraph, OpenAI Agents, and Crew AI

| Framework | Observability Scope | Native Debugging | Eval Support | Best Fit For |

|---|---|---|---|---|

| LangGraph | Node + Edge + State | Visual graph trace + checkpoints | External via Maxim | Branching workflows, reasoning pipelines |

| OpenAI Agents | Session + Tool Calls | Built-in call history | Add-on via Maxim evals | Fast prototyping, managed runtime |

| Crew AI | Agent + Role + Task | Message + tool span logs | Node-level + session evals | Multi-agent teams w/ async roles |

LangGraph: Declarative Graph-Based Agent Workflows

LangGraph extends the LangChain ecosystem with a graph-first approach. Agent steps are modeled as nodes in a directed graph, and edges define transitions and branching logic. This architecture offers:

- Explicit state management: Each node receives and mutates a serializable object, supporting checkpointing and deterministic replay.

- Branching and recovery: Error edges and compensating actions allow granular failure handling.

- Visual traceability: Graph traces make it easy to debug and optimize complex workflows.

Observability Best Practices:

- Instrument each node and edge for agent tracing.

- Use session-level metrics to analyze multi-turn flows.

- Integrate Maxim AI’s tracing SDK for token-level and span-level monitoring.

Typical Use Cases:

- Customer support agents with escalation paths.

- Research pipelines with branching based on intermediate scores.

- Multi-step reasoning agents combining retrieval-augmented generation (RAG), function calls, and validators.

OpenAI Agents: Managed Runtime for Rapid Prototyping

OpenAI Agents provide a streamlined environment for agent development, emphasizing ease of use and tight integration with OpenAI models. Key features include:

- Tool-centric orchestration: Simple interfaces for tool registration and invocation.

- Built-in conversation history: Automatic threading of user interactions.

- Managed scaling: Cloud-native runtime abstracts scaling and resource management.

Observability Best Practices:

- Capture traces with metadata such as user ID, persona, and scenario.

- Monitor tool call outputs and latency.

- Pair with Maxim AI’s online evaluations to detect drift and regressions.

Typical Use Cases:

- Support assistants in integrating RAG and function calls.

- Sales and scheduling agents with organization-specific tools.

- Internal copilots benefiting from managed runtime features.

Crew AI: Role-Based Multi-Agent Collaboration

Crew AI focuses on multi-agent coordination through roles and tasks. Teams model crews of specialized agents that collaborate asynchronously or in rounds.

- Role and task-centric modeling: Mirrors real-world team structures.

- Shared context stores: Supports both private and shared memory for agents.

- Task-level error boundaries: Isolates failures and enables pragmatic recovery.

Observability Best Practices:

- Log each agent’s messages and tool calls as spans.

- Attach evaluator scores to sessions and nodes for trend tracking.

- Use targeted alerts for spike conditions such as excessive tool calls or low faithfulness.

Typical Use Cases:

- Content generation workflows with editor, fact-checker, and SEO roles.

- Due diligence pipelines with extraction and validation agents.

- Product research agents combining market scanning and competitive analysis.

Technical Deep Dive: Observability Patterns and Metrics

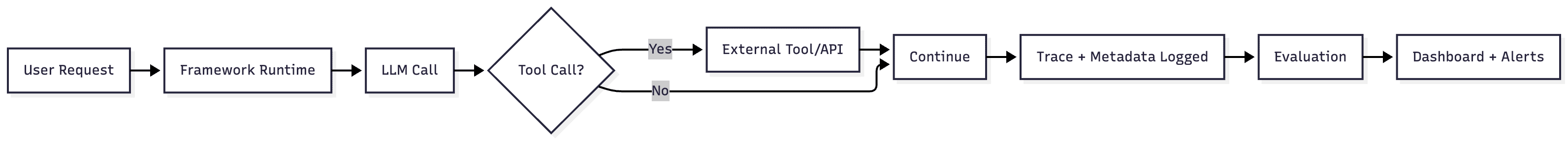

Distributed Tracing

Distributed tracing is essential for debugging and optimizing agent workflows. Maxim AI’s tracing capabilities support:

- Visual trace views: Step through agent interactions and spot issues quickly.

- Token-level tracing: Analyze model outputs at the finest granularity.

- Span-level metrics: Monitor latency, cost, and quality at each agent step.

Online Evaluations

Continuous quality monitoring is achieved through online evaluations:

- Automated metrics: Faithfulness, toxicity, helpfulness, and custom criteria.

- Flexible sampling: Evaluate logs based on metadata, filters, and sampling rate.

- Real-time alerts: Configure alerts for key metrics and route notifications to Slack or PagerDuty.

Human Annotation

Human-in-the-loop evaluation enables nuanced quality checks:

- Multi-dimensional reviews: Fact-check, bias detection, and domain-specific assessments.

- Automated queues: Trigger human reviews based on low scores or negative user feedback.

Data Export and Integration

- CSV and API exports: Seamlessly export trace and evaluation data for audits and external analysis.

- OTel compatibility: Forward logs to observability platforms like New Relic, Grafana, or Datadog.

Integrating Observability with Maxim AI

Maxim AI offers a unified platform for agent observability, simulation, and evaluation. Key integration steps include:

- SDK support: Robust, stateless SDKs for Python, TypeScript, Java, and Go.

- Framework compatibility: Native integrations with LangGraph, OpenAI Agents, Crew AI, and more.

- Enterprise security: In-VPC deployment, SOC 2 Type 2 compliance, and role-based access controls.

For technical walkthroughs, see the Maxim documentation and API reference.

Best Practices for Agent Observability

- Instrument every agent step: Capture detailed traces for model calls, tool invocations, and decision transitions.

- Monitor both session and node-level metrics: Analyze quality, latency, and correctness at each stage.

- Set up real-time alerts and evaluations: Detect regressions and performance issues before they impact users.

- Integrate human annotation workflows: Validate nuanced criteria and edge cases.

- Export and analyze data regularly: Use dashboards and reports to track trends and share insights with stakeholders.

For more on evaluation workflows, see Evaluation Workflows for AI Agents and AI Agent Evaluation Metrics.

Case Study: Maxim AI in Production

Organizations like Clinc and Thoughtful have leveraged Maxim AI’s observability suite to scale multi-agent systems with confidence. By integrating distributed tracing, online evaluations, and human feedback, these teams have reduced debugging cycles, improved agent reliability, and shipped production-grade AI faster.

Conclusion

Observability is the foundation of trustworthy, reliable AI agent systems. Whether you are building with LangGraph, OpenAI Agents, or Crew AI, integrating robust tracing, evaluation, and monitoring workflows is essential for success. Platforms like Maxim AI provide the tools and infrastructure to instrument, monitor, and optimize agentic workflows at scale.

To get started, explore Maxim’s demo, review the platform overview, and dive into technical guides on agent tracing and LLM observability.

Further Reading

- AI Agent Quality Evaluation

- Agent Evaluation vs Model Evaluation

- Prompt Management in 2025

- AI Reliability: How To Build Trustworthy AI Systems

- Agent Tracing for Debugging Multi-Agent AI Systems

- LLM Observability: How to Monitor Large Language Models in Production

- Evaluation Workflows for AI Agents

- Best AI Agent Frameworks 2025: LangGraph, CrewAI, OpenAI, LlamaIndex, AutoGen