How to Evaluate AI Agents Before Production: A Practical, End-to-End Framework

Pre-production evaluation is the difference between shipping a reliable AI agent and deploying a brittle system that fails under real-world scenarios. Teams that invest in rigorous agent evaluation reduce incident rates, control costs, and accelerate iteration cycles. This guide provides a structured framework (grounded in practical examples and linked to actionable resources) to evaluate your AI agents before they go live.

Why Pre-Production Evaluation Matters

Agentic applications introduce emergent complexity: multi-step reasoning, dynamic tool use, retrieval of external knowledge, and long-running conversations. Without a robust evaluation and observability strategy, you risk:

- Latent failure modes that only appear under certain personas or tasks.

- Poor retrieval behavior in RAG pipelines, leading to hallucination or irrelevant outputs.

- Inconsistent prompt behavior across versions and environments.

- Uncontrolled token usage and latency under realistic production loads.

A disciplined evaluation approach must combine simulation, llm evaluation (LLM-as-a-judge and statistical metrics), deterministic checks, and real-world observability. Maxim AI’s full-stack platform is designed to support this lifecycle (from experimentation to simulation, evaluation, and observability) with deep support for datasets, evaluators, tracing, and prompt management.

- Explore offline evaluation concepts and core primitives: Offline Evaluation Concepts.

- See how evaluations fit across pre-release and production: Offline Evaluation Overview.

- Learn how to test prompts versioned on the platform: Maxim Prompt Testing.

- Retrieve tested prompts safely in production: Prompt Management.

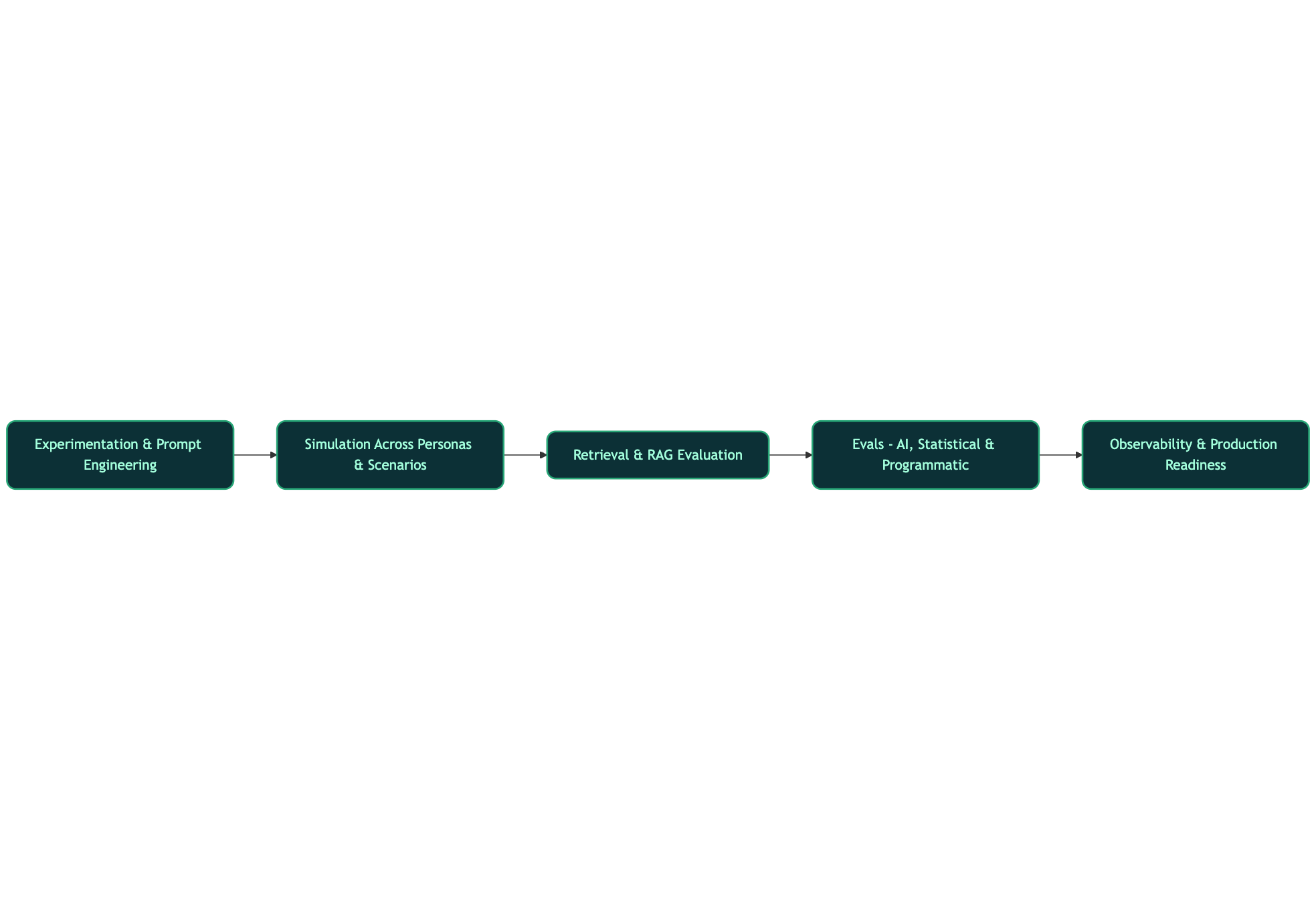

A Structured Framework for Agent Evaluation

A comprehensive approach includes five layers. Apply them sequentially and then iterate as you discover gaps.

1) Experimentation and Prompt Engineering

Start by building high-quality prompts and configurations in a controlled environment. Version prompts, test variables, and compare models, temperature, and max tokens.

- Build and iterate in a high-velocity environment: Experimentation (Playground++).

- Compare prompts systematically and establish baselines: Offline Evaluation Concepts.

- Manage and retrieve prompts with deployment rules and tags: Prompt Management.

Core practices:

- Use descriptive prompt version names and maintain strict version control.

- Record model configuration (model, temperature, system message, variables) as part of the prompt version.

- Establish a golden dataset with expected outputs for regression testing.

2) Simulation Across Personas and Scenarios

Agents are multi-step systems; single-turn tests are insufficient. Simulate real conversations and task trajectories across persona diversity and scenario complexity. This detects path-dependent failures (e.g., an agent picks an inefficient tool chain or fails midway through a task).

- Simulate and evaluate multi-step agent behavior: Agent Simulation & Evaluation.

- Run guided, repeatable tests with no-code agents and SDKs: SDK No-Code Agent Quickstart and Agent on Maxim.

- Evaluate custom agents implemented locally with orchestration libraries: Local Agent Testing.

What to measure:

- Task success rate across steps.

- Trajectory quality: tool choice appropriateness, recovery from errors.

- Latency and cost per step; end-to-end completion time.

- Session-level consistency for long conversations.

3) Retrieval and RAG Evaluation

For RAG systems, poor retrieval quality is the most common failure mode. Evaluate the retriever, the context selection, and the output faithfulness.

- Design evaluators for faithfulness, relevance, and precision: Evaluators.

- Manage context sources and test retrieval pipelines: Context Sources.

- Curate high-quality datasets and input-output mappings: Datasets.

Key metrics:

- Context Relevance (are the retrieved chunks relevant to the query?).

- Faithfulness (does the output align with retrieved content?).

- Context Precision (node-level relevance in the retrieved context).

- Semantic Similarity to expected outputs (statistical alignment).

A practical case in HR assistants demonstrates how these evaluators surface failure patterns and guide improvements: Evaluating the Quality of AI HR Assistants.

4) Evals: AI, Statistical, and Programmatic

Use layered evaluators to measure quality quantitatively and qualitatively:

- LLM-as-a-judge evaluators for tone, clarity, faithfulness, and bias: Evaluator Store.

- Statistical evaluators for semantic similarity and lexical overlap.

- Programmatic evaluators for schema validation, deterministic rules, and domain-specific constraints.

- Human-in-the-loop evaluations for nuanced judgment and alignment to product guidelines.

Run test suites across versions and datasets to quantify regressions and improvements. Visualize runs and compare across configurations to create a trustworthy release process: Prompt Comparisons (concepts + structure) and test run dashboards: Test Runs Comparison Dashboard.

5) Observability and Production Readiness

Even the best pre-release evals cannot foresee all production variation. Ensure you have tracing, logging, and in-production quality checks with real-time alerts.

- Monitor live systems: Agent Observability.

- Understand tracing concepts and span-level detail: Tracing Overview.

- Set up online evaluations for continuous quality checks: Online Evaluation Overview.

Production KPIs:

- Real-time latency and token budgets by endpoint and agent span.

- Error distribution and retriever failure rates.

- Hallucination detection and faithfulness drift under live traffic.

- Cost tracking by team, application, and customer.

Step-by-Step: Running Your First Pre-Production Eval

Follow this workflow to operationalize pre-release quality checks.

Step 1: Prototype and Version Prompts

Use the Playground to configure models and variables, then create prompt versions. Map your dataset columns to prompt variables to ensure consistent evaluation.

- Practical guide to testing prompt versions: Maxim Prompt Testing.

- Retrieval configuration and variable mapping: Context Sources.

- Dataset setup and expected outputs: Datasets.

Step 2: Build Reference Datasets

Create sets of realistic inputs and expected outputs that mirror production traffic. Add adversarial and edge cases (long inputs, ambiguous queries, tool errors).

- How datasets integrate into eval workflows: Offline Evaluation Concepts.

Step 3: Select Evaluators and Run

Combine AI, statistical, and programmatic evaluators based on the agent’s purpose, then run a test suite.

- Configure evaluators and custom judges: Evaluators.

Step 4: Analyze Reports and Iterate

Inspect evaluator scores, model metrics (latency, cost), and span-level behavior. Use the insights to refine prompting, chunking, and tool orchestration.

- Understand evaluation reports and drill into failure modes: Evaluating the Quality of AI HR Assistants.

Step 5: Promote to Staging and Set Observability

Once the agent meets thresholds, move to staging with controlled traffic and alerts. Validate the agent with online evals and tracing before production.

- Continuous monitoring and alerting: Online Evaluation Overview and Tracing Overview.

Practical Patterns: No-Code and SDK-Based Agent Testing

Maxim supports both no-code and local agent testing patterns:

- No-code agents: configure multi-step workflows and run evaluation suites directly: SDK No-Code Agent Quickstart and Agent on Maxim.

- Local custom agents: integrate CrewAI/LangChain orchestration, and use

yields_outputto report outputs, costs, and token usage: Local Agent Testing.

This dual approach ensures product and engineering teams can collaborate without blocking on code changes while still supporting sophisticated logic where necessary.

Prompt Management, Versioning, and Safe Rollouts

Reliability depends on how you deploy prompts. Use deployment variables (Environment, TenantId) and tag-based filtering for fine-grained control. Configure fallbacks and exact matching rules to avoid unintended prompt selection in production.

- Query and filter prompts with deployment variables, folders, and tags: Prompt Management.

Best practices:

- Environment Separation: strict dev/staging/production isolation.

- Graceful Degradation: specify fallback prompts for critical flows.

- Exact Matching: enforce constraints per variable when required.

- Caching: leverage SDK caching for performance.

Evaluating RAG: Example from HR Assistants

An HR assistant powered by RAG must cite relevant policy content, remain unbiased, and use friendly tone. In Maxim, you can attach policy documents as context sources, run dataset-driven tests, and evaluate with bias, faithfulness, and context relevance.

- Full walkthrough of building and evaluating an HR assistant: Evaluating the Quality of AI HR Assistants.

Key insights:

- If context relevance scores are low, refine chunking or add document re-ranking stages.

- Faithfulness checks catch hallucinations where answers deviate from provided policies.

- Tone evaluators confirm politeness and clarity for employee-facing interactions.

Observability and Governance with Bifrost (LLM Gateway)

As you move to production, standardize access to multiple providers and enforce governance with Bifrost, Maxim’s high-performance AI gateway. Bifrost offers automatic fallbacks, semantic caching, usage governance, observability, and vault-backed key management, critical for resilient, cost-effective operations.

- Unified interface and multi-provider support: Unified Interface and Provider Configuration.

- Automatic fallbacks and load balancing: Fallbacks.

- Semantic caching for latency and cost reduction: Semantic Caching.

- Observability and vault support: Observability and Vault Support.

This combination, robust pre-release evals plus production-grade gateway governance, creates a strong reliability foundation.

Common Pitfalls and How to Avoid Them

- Insufficient Persona Coverage: simulate across diverse user behaviors and intents, not just happy paths. Use Agent Simulation & Evaluation.

- Unmapped Variables: ensure dataset columns match prompt variables to avoid silent failures: Maxim Prompt Testing.

- Weak RAG Retrieval: monitor context relevance and precision; refine chunking or add re-ranking: see RAG evaluators in Evaluators and context configuration in Context Sources.

- No Staging Observability: add tracing and online evals before full rollout: Online Evaluation Overview and Tracing Overview.

- Missing Fallbacks: configure fallback prompts and exact matching rules: Prompt Management.

Conclusion

Evaluating AI agents before production requires a disciplined, end-to-end approach, prompt experimentation, simulation across scenarios, layered evaluators, and production observability. By adopting this framework and leveraging Maxim’s platform capabilities, llm evaluation, rag evaluation, agent observability, prompt management, and tracing, teams can ship trustworthy ai systems faster and with greater confidence.

Ready to put this into practice? Schedule a session: Book a Maxim Demo or start immediately: Sign up for Maxim.