Best AI Evaluation Tools in 2026: Top 5 picks

TL;DR

AI evaluation has evolved from a nice-to-have into mission-critical infrastructure as LLM applications move to production. This guide examines five leading evaluation platforms: Maxim AI delivers end-to-end lifecycle management with agent simulation, comprehensive evaluation frameworks, and production observability designed for cross-functional teams. LangSmith offers LangChain-native evaluation with deep tracing and annotation queues for teams invested in the LangChain ecosystem. Arize Phoenix brings open-source observability built on OpenTelemetry with strong community support. Confident AI powers the DeepEval framework with research-backed metrics and rapid dataset iteration.

Introduction

The gap between experimental AI prototypes and production-ready applications comes down to one critical capability: systematic evaluation. As organizations deploy LLM-powered systems at scale, the consequences of inadequate testing compound quickly. CNET faced major reputational damage after publishing AI-generated articles riddled with errors. Apple suspended its AI news feature in January 2025 after generating misleading headlines. Air Canada was held legally liable after its chatbot provided false refund information, establishing a precedent that organizations cannot disclaim responsibility for automated system outputs.

These high-profile failures illustrate why robust AI evaluation infrastructure matters. Unlike traditional software, where inputs produce deterministic outputs, AI agents operate probabilistically. The same prompt can generate different responses. Small changes cascade into major regressions. What works perfectly in testing can fail spectacularly with real users. Without systematic evaluation, teams are essentially flying blind, shipping changes without knowing if they've improved accuracy or introduced new failure modes.

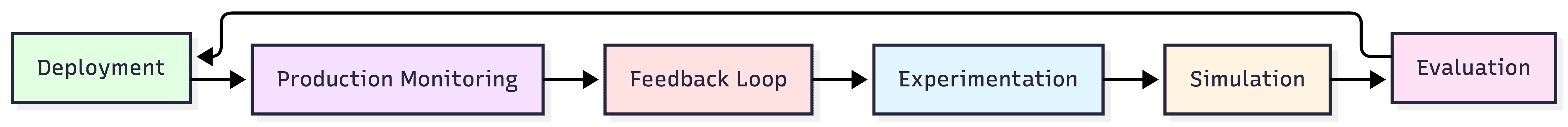

The AI evaluation landscape has matured significantly in 2026. Early tools focused narrowly on model-level metrics, treating evaluation as a feature bolted onto observability platforms. Modern evaluation platforms recognize that production AI requires comprehensive lifecycle management: pre-deployment testing through simulation and experimentation, systematic quality measurement using automated and human evaluators, continuous production monitoring to detect drift and regressions, and feedback loops that turn real-world failures into improved test coverage.

This guide examines five platforms leading the AI evaluation space, each optimized for different team structures and development workflows. Whether you're building multi-agent systems requiring extensive simulation, implementing evaluation in CI/CD pipelines, or seeking lightweight frameworks for rapid iteration, understanding the strengths and trade-offs helps you select the right foundation for shipping reliable AI applications.

The AI Evaluation Lifecycle

Table of Contents

- Quick Comparison

- Maxim AI: End-to-End AI Quality Platform

- DeepEvals

- LangSmith: LangChain-Native Evaluation

- Arize Phoenix: Open-Source Observability

- Confident AI: Research-Backed Metrics

- How to Choose the Right Platform

- Further Reading

Quick Comparison

| Platform | Best For | Key Strength | Evaluation Approach | Deployment |

|---|---|---|---|---|

| Maxim AI | Production AI agents requiring simulation, evaluation, and observability | End-to-end lifecycle coverage with cross-functional collaboration | Pre-deployment simulation + automated/human evals + production monitoring | Cloud or self-hosted |

| DeepEval | Python teams prioritizing test-centric LLM evaluation | Lightweight, code-first evaluation framework with reusable tests | Dataset-based and pytest-style evaluation with pre-built and custom metrics | Python library + cloud platform |

| LangSmith | LangChain/LangGraph applications | Deep LangChain integration with tracing and annotation queues | Offline datasets + online production evals with human review | Cloud or self-hosted |

| Arize Phoenix | Teams valuing open-source and OpenTelemetry standards | Vendor-agnostic observability built on open standards | Trace-based debugging with LLM-as-judge evaluators | Open-source, self-hosted or cloud |

| Confident AI | Python developers seeking lightweight evaluation frameworks | Research-backed metrics through DeepEval library | Pytest-style unit testing with pre-built and custom metrics | Python library + cloud platform |

Maxim AI: End-to-End AI Quality Platform

Platform Overview

Maxim AI provides comprehensive infrastructure for building, testing, and deploying production-grade AI agents. Unlike platforms that focus on a single stage of development, Maxim integrates simulation, evaluation, and observability into a unified workflow designed specifically for teams shipping complex AI applications that require systematic quality assurance.

The platform addresses a fundamental challenge facing AI engineering teams: while prompts and agent behaviors are critical to application success, most organizations lack systematic processes for testing changes before deployment. Maxim treats AI quality with the same rigor as traditional software testing, providing tools for comprehensive pre-deployment simulation, multi-dimensional evaluation frameworks, gradual rollout strategies, and continuous production monitoring.

What distinguishes Maxim is its cross-functional design. Many evaluation tools cater exclusively to engineering teams, creating bottlenecks when product managers or domain experts need to validate agent behaviors. Maxim enables non-technical stakeholders to participate directly in quality assurance through intuitive interfaces while maintaining the technical depth engineers require for systematic testing and debugging.

Key Features

Comprehensive Agent Simulation

Maxim's simulation suite enables teams to validate AI agents across hundreds of realistic scenarios before production:

- Multi-Turn Conversation Simulation: Test agents across diverse user personas, edge cases, and complex interaction patterns to understand behavior in realistic scenarios

- Trajectory Analysis: Evaluate complete agent decision paths rather than individual responses, understanding how agents reason through multi-step tasks

- Reproducible Testing: Re-run simulations from any step to isolate issues, validate fixes, and ensure consistent behavior across iterations

- Scenario Coverage: Build comprehensive test suites covering expected behaviors, boundary conditions, and adversarial inputs

Flexible Evaluation Framework

The evaluation system combines automated metrics with human judgment for comprehensive quality measurement:

- Evaluator Store: Access pre-built evaluators for common metrics (accuracy, relevance, hallucination detection, safety) or create custom evaluators tailored to specific application needs

- Multi-Granularity Evaluation: Run evaluations at different levels (individual responses, conversation turns, full sessions) depending on what you're optimizing

- Human-in-the-Loop Workflows: Define structured human evaluation processes for nuanced quality assessment and ground truth collection

- Comparative Analysis: Visualize evaluation results across prompt versions, models, or configurations to identify improvements and catch regressions

Advanced Experimentation Platform

The Playground++ transforms prompt development from trial-and-error into systematic experimentation:

- Versioned Prompt Management: Organize and version prompts directly from the UI with clear iteration history and easy rollbacks

- Multi-Model Comparison: Test prompts across LLM providers (OpenAI, Anthropic, Google, AWS Bedrock) side-by-side to evaluate quality, cost, and latency trade-offs

- Deployment Strategies: Deploy prompts with A/B tests, canary releases, or gradual rollouts without requiring code changes

- RAG Integration: Connect seamlessly with databases, retrieval pipelines, and external tools to test prompts with real context

Production Observability

The observability platform provides real-time insights into AI application performance:

- Distributed Tracing: Track requests through complex multi-agent systems, understanding how prompts, retrievals, and tool calls interact

- Quality Monitoring: Run automated evaluations on production traffic to detect quality degradations before they impact users

- Custom Dashboards: Create tailored views surfacing insights specific to your application's critical dimensions

- Alert Configuration: Set up alerts on quality metrics, latency thresholds, or cost anomalies for rapid incident response

Data Engine for Continuous Improvement

Maxim's data management capabilities support ongoing AI application refinement:

- Dataset Curation: Import, organize, and version multi-modal datasets (text, images, structured data) for evaluation and fine-tuning

- Production Data Enrichment: Continuously evolve datasets using production logs, evaluation results, and human feedback

- Data Labeling Integration: Leverage in-house teams or Maxim-managed services for high-quality ground truth data creation

- Targeted Evaluation: Create data splits optimized for specific testing scenarios or model comparisons

Best For

Maxim AI excels for organizations building production AI agents requiring systematic quality assurance across the full development lifecycle. The platform particularly suits teams when:

- Building Complex Multi-Agent Systems: Applications involve multiple AI agents with sophisticated interactions requiring comprehensive evaluation workflows

- Cross-Functional Collaboration: Product managers and domain experts need to drive improvements without engineering bottlenecks

- Enterprise Reliability Requirements: Organizations need robust AI reliability guarantees with systematic testing, monitoring, and quality gates

- Rapid Iteration with Stability: Teams want to experiment quickly while maintaining production stability through gradual rollouts and automated quality checks

- Comprehensive Lifecycle Management: Unified tooling across experimentation, pre-deployment testing, and production monitoring provides better ROI than stitching together multiple point solutions

Companies like Clinc, Thoughtful, and Comm100 rely on Maxim to maintain quality and ship AI agents faster. Teams consistently report improved cross-functional velocity, reduced time from idea to production, and higher confidence in deployed changes.

Request a demo to see how Maxim accelerates AI development for your team.

DeepEval

Platform Overview

DeepEval is a Python-first LLM evaluation framework similar to Pytest but specialized for testing LLM outputs. DeepEval provides comprehensive RAG evaluation metrics alongside tools for unit testing, CI/CD integration, and component-level debugging.

Key Features

- Comprehensive RAG Metrics: Includes answer relevancy, faithfulness, contextual precision, contextual recall, and contextual relevancy. Each metric outputs scores between 0-1 with configurable thresholds.

- Component-Level Evaluation: Use the @observe decorator to trace and evaluate individual RAG components (retriever, reranker, generator) separately. This enables precise debugging when specific pipeline stages underperform.

- CI/CD Integration: Built for testing workflows. Run evaluations automatically on pull requests, track performance across commits, and prevent quality regressions before deployment.

- G-Eval Custom Metrics: Define custom evaluation criteria using natural language. G-Eval uses LLMs to assess outputs against your specific quality requirements with human-like accuracy.

- Confident AI Platform: Automatic integration with Confident AI for web-based result visualization, experiment tracking, and team collaboration.

LangSmith: LangChain-Native Evaluation

Platform Overview

LangSmith is the observability and evaluation platform built by LangChain's creators, designed specifically for teams using the LangChain or LangGraph frameworks. The platform provides tracing, debugging, prompt management, and evaluation tools that integrate seamlessly with LangChain's abstractions, making it the natural choice for teams already invested in the LangChain ecosystem.

LangSmith excels at providing visibility into complex multi-step workflows. Teams can trace execution through chains, understand exactly where issues occurred, and access full context for each LLM call. The platform supports both offline evaluation using curated datasets and online evaluation running on production traffic.

Key Features

- Deep Chain Tracing: Capture every step of LangChain and LangGraph workflows with automatic instrumentation, providing complete visibility into agent execution paths

- Offline and Online Evaluation: Run evaluations on datasets for pre-deployment testing or on production traces for continuous quality monitoring

- Annotation Queues: Streamline human feedback collection through queues where subject matter experts can efficiently review and score outputs

- Prompt Playground: Iterate on prompts with dataset-based testing, comparing outputs across different models and configurations

- Multi-Turn Evaluation: Assess complete agent conversations rather than individual responses, measuring whether agents accomplish user goals across entire interactions

- Insights Agent: Automatically categorize production usage patterns and failure modes to understand how users actually interact with your agent

Best For

LangSmith works best for teams fully committed to the LangChain ecosystem who need deep visibility into chain and agent execution. The platform suits organizations building complex LangChain or LangGraph applications, Python-centric workflows where LangSmith's native integration provides immediate value, and teams prioritizing rapid prototyping where going from idea to running evaluations happens in minutes. However, teams using other frameworks or requiring vendor-neutral instrumentation may find the LangChain-first approach limiting.

Arize Phoenix: Open-Source Observability

Platform Overview

Arize Phoenix provides open-source AI observability built on OpenTelemetry standards, offering teams flexibility and data control without vendor lock-in. The platform focuses on tracing, evaluation, and debugging for LLM applications while maintaining vendor and framework agnosticism through its foundation on open standards.

Phoenix's approach appeals to teams who value open-source flexibility, want to self-host evaluation infrastructure, or need vendor-neutral instrumentation that works across different orchestration frameworks and LLM providers. The platform supports popular frameworks including LlamaIndex, LangChain, Haystack, and DSPy out of the box.

Key Features

- OpenTelemetry Foundation: Built on open standards ensuring instrumentation work remains reusable across platforms and avoiding vendor lock-in

- Comprehensive Tracing: Track LLM calls, retrieval steps, tool usage, and custom logic to understand application behavior and debug issues

- Prompt Management: Version, store, and deploy prompts with tagging and experimentation support for systematic prompt iteration

- Datasets and Experiments: Group traces into datasets, rerun through different application versions, and compare evaluation results systematically

- LLM-as-Judge Evaluators: Pre-built evaluation templates for common metrics plus custom evaluator support for application-specific quality criteria

- Prompt Playground: Experiment with prompts and models side-by-side, replay LLM calls with different inputs, and optimize before deployment

- Self-Hosting: Run Phoenix locally, in Docker containers, on Kubernetes, or use Arize's managed cloud instances

Best For

Arize Phoenix fits teams who prioritize open-source flexibility, want complete control over their data through self-hosting, or require vendor-neutral observability that works across diverse technology stacks. The platform particularly suits organizations already comfortable with OpenTelemetry, teams building framework-agnostic applications, and developers who value active open-source communities. However, teams seeking comprehensive lifecycle management beyond observability may need to combine Phoenix with additional tools for simulation and cross-functional workflows.

Confident AI: Research-Backed Metrics

Platform Overview

Confident AI powers the DeepEval framework, providing research-backed evaluation metrics through a Python-first approach that treats LLM testing like traditional software testing. The platform makes systematic evaluation accessible through pytest-style unit testing while offering a cloud platform for team collaboration and dataset management.

DeepEval's design philosophy centers on making evaluation feel natural for developers already familiar with testing frameworks. The library provides 14+ pre-built evaluation metrics based on latest research, supports custom metric creation, and integrates seamlessly with CI/CD workflows for continuous testing.

Key Features

- Research-Backed Metrics: Pre-built evaluators for hallucination detection, answer relevance, factual accuracy, and more based on peer-reviewed evaluation research

- Pytest-Style Testing: Write LLM tests using familiar pytest syntax with

assert_testfunctions that fail builds when quality thresholds aren't met - Custom Metric Creation: Build application-specific evaluators that integrate automatically with DeepEval's ecosystem

- Synthetic Data Generation: Generate test datasets and simulate conversations when real data is unavailable or insufficient

- Cloud Platform: Team-wide collaborative testing with shareable reports, dataset management, and experiment comparison

- Framework Integration: Works with LangChain, LlamaIndex, and any LLM application architecture

- Regression Testing: Run evaluations on the same test cases with each iteration to safeguard against breaking changes

Best For

Confident AI excels for Python development teams who want lightweight evaluation frameworks without heavy platform overhead, organizations prioritizing research-backed metrics over custom implementations, and teams familiar with pytest who want similar testing patterns for LLM applications. The platform suits rapid iteration workflows where developers run evaluations locally before pushing to shared environments. However, teams requiring comprehensive observability, cross-functional collaboration features, or extensive simulation capabilities may need additional tools to complement DeepEval's focused evaluation framework.

How to Choose the Right Platform

Selecting the appropriate AI evaluation platform depends on your team structure, technical requirements, and development stage:

Choose Maxim AI when:

- Building production AI agents requiring comprehensive quality assurance across the full lifecycle

- Cross-functional teams need to collaborate on quality improvement without engineering dependencies

- Your organization requires extensive agent simulation for testing complex scenarios before deployment

- Systematic evaluation workflows need to integrate with production monitoring and observability

- Unified tooling across experimentation, evaluation, and production provides better ROI than multiple point solutions

Choose DeepEvals when:

- Engineering teams prioritize evaluation tightly integrated with CI/CD workflows

- GitHub Actions integration for automated quality gates is essential

Choose LangSmith when:

- Applications are built entirely on LangChain or LangGraph frameworks

- Deep visibility into chain execution and agent decision-making is critical

- Teams are Python-centric and comfortable with LangChain abstractions

- Human annotation queues for collecting expert feedback at scale are valuable

- LangChain ecosystem alignment outweighs vendor-neutral instrumentation concerns

Choose Arize Phoenix when:

- Open-source flexibility and data control are primary requirements

- Teams want to self-host evaluation infrastructure without restrictions

- Vendor-neutral instrumentation built on OpenTelemetry standards is essential

- Framework-agnostic observability across diverse technology stacks matters

- Active open-source communities provide value for learning and extending capabilities

Choose Confident AI when:

- Python developers want pytest-style testing for LLM applications

- Research-backed evaluation metrics reduce custom implementation needs

- Lightweight frameworks are preferred over comprehensive platforms

- Rapid local iteration cycles matter more than extensive collaboration features

- Teams comfortable with code-first approaches don't require extensive UI-based tools

For most production AI applications, especially those involving multi-agent systems or enterprise reliability requirements, comprehensive platforms like Maxim AI deliver strongest ROI by providing integrated simulation, evaluation, and observability. Teams with simpler needs or specific framework commitments may find focused tools like DeepEvals for CI/CD integration, LangSmith for LangChain applications, Phoenix for open-source flexibility, or Confident AI for lightweight evaluation more appropriate.

Further Reading

Internal Resources

- AI Agent Quality Evaluation: Comprehensive Guide

- Evaluation Workflows for AI Agentsintegration with the LangChain framework, Arize Phoenix brings open-source flexibility, and Confident AI enables lightweight,

- LLM Observability: How to Monitor Large Language Models in Production

- Agent Tracing for Debugging Multi-Agent AI Systems

Platform Comparisons

External Resources

- The People's Choice of Top LLM Evaluation Tools 2025 (Confident AI)

- Comparing LLM Evaluation Platforms 2025 (Arize)

- LangSmith Evaluation Documentation

- DeepEval GitHub Repository

- Arize Phoenix Documentation

Conclusion

AI evaluation has evolved from optional to essential infrastructure for production LLM applications. The five platforms examined here offer distinct approaches: Maxim AI provides comprehensive lifecycle management with simulation and observability, High-profile failures from CNET, Apple, and Air Canada demonstrate that systematic evaluation isn't just a technical best practice but a business necessity. The right platform accelerates shipping reliable AI by catching issues before production, enabling confident iteration, and providing quality visibility over time.

Ready to elevate your AI evaluation workflow? Explore Maxim AI to see how end-to-end quality management transforms building and deploying AI agents at scale.