Agent Tracing for Debugging Multi-Agent AI Systems

TL;DR

Multi-agent systems introduce debugging complexity due to emergent behavior, cascading failures, and non-deterministic tool calls. Agent tracing solves this by capturing every decision, message, and state transition across all agents in a workflow. Platforms like Maxim AI provide distributed tracing, visual replay, automated evaluation, and in-context debugging for agent pipelines. Tracing enables faster root-cause analysis, improved reliability, and better optimization of latency, cost, and success rates. This guide explains how agent tracing works, why it matters, and how to implement it in production-grade AI systems.

Introduction

The rapid evolution of large language models has ushered in a new era of multi-agent systems, where teams of autonomous agents collaborate to solve tasks that exceed the capabilities of single-turn workflows and simpler RAG pipelines. These systems have become pivotal in domains ranging from conversational AI and document processing to autonomous decision-making automations. However, as the complexity and scale of these multi-agent architectures grow, so do the challenges in debugging, monitoring, and evaluating their behavior, decision-making, and actions at each step of the process.

Agent tracing has emerged as a foundational technique for understanding, diagnosing, and optimising multi-agent AI systems. By systematically tracking interactions, decisions, and state changes across agents, developers can pinpoint failures, optimize workflows, and ensure robust performance. This blog explores the technical landscape of agent tracing, its importance in debugging multi-agent systems, and how platforms like Maxim AI are transforming the agent development lifecycle with advanced observability and evaluation tools.

The Rise of Multi-Agent AI Systems

Why Multi-Agent Architectures?

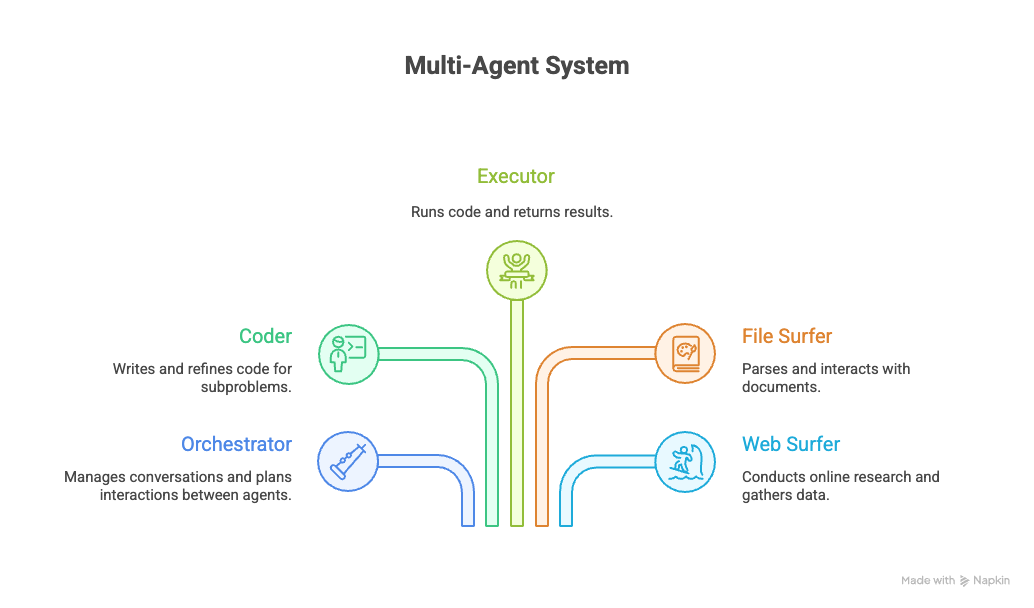

Single-turn AI applications, powered by large language models (LLMs), excel at singular tasks like answering user queries and writing poems. However, most real-world problems are messy, multi-turn, and need complex reasoning and actions, thus often requiring orchestration across multiple agents, each specializing in distinct functions, with each agent dealing with a domain of expertise to solve only a part of the problem given to the agents as a whole. For example, if you were building an agentic system to perform coding tasks, you would need:

- An Orchestrator that plans and manages the plan of action for the entire task.

- A Coder who writes and refines code.

- An Executor that runs code and returns results.

- A File Surfer for parsing, accessing, and storing input and output files.

- A Web Surfer for online research and data gathering.

This distributed approach leverages specialization, parallelism, and dynamic tool usage, enabling systems to tackle complex, multi-turn tasks (Epperson et al., 2025). Yet, it also introduces new debugging challenges, such as cascading errors, emergent behaviors, and non-deterministic outcomes in multi-turn agent interactions.

The Debugging Challenge: Complexity and Scale

Unique Failure Modes

Debugging multi-agent systems is fundamentally different from debugging simpler AI workflows. Key challenges include:

- Long, Multi-Turn Conversations: Errors may emerge deep within extended agent interactions, making root cause analysis non-trivial.

- Emergent Interactions: Agents may exhibit unexpected behaviors due to dynamic collaboration, tool usage, or changing plans.

- Cascading Errors: Fixes for one agent can inadvertently break others, especially when state and context are shared.

- Opaque Reasoning Paths: Without proper tracing, understanding why an agent made a specific decision is difficult.

- Tool Calling Failures: Without proper tracing to observe LLM tool calling and the response received by the LLM, we remain in the dark on whether our agents can access the tools.

Before vs After Agent Tracing

| Criteria | Without Tracing | With Agent Tracing (e.g. Maxim AI) |

|---|---|---|

| Root Cause Analysis | Manual, slow, often guesswork | 1-click replay of agent steps with logs |

| Visibility | Partial logs, missing context | Full state + tool call + message history |

| Multi-agent Interactions | Opaque, hard to attribute failures | Visual map of agent-to-agent communication |

| Failure Recovery | Restart full workflow | Reset to checkpoint + edit + rerun |

| Debugging Speed | Hours → days | Minutes |

| Production Monitoring | Basic logs + metrics | Real-time traces, alerts, evals |

Most AI application debugging platforms, focusing on model training or prompt engineering, fall short in this context (ACM CHI 2025). Developers need visibility into the entire agent team, messages exchanged, actions taken, tools used, and state transitions in order to ensure that their AI-powered applications are reliable and trustworthy.

Agent Tracing: Principles and Techniques

What is Agent Tracing?

Agent tracing refers to the systematic logging and visualization of agent interactions, decisions, and state changes throughout the lifecycle of an AI system. Effective tracing provides:

- Step-by-Step Action Logs: Every decision, tool call, and response is recorded.

- Inter-Agent Communication Maps: Visualizations of how agents delegate tasks and share information with detailed logs and traces of inter-agent interactions.

- State Transition Histories: Tracking and logging changes in agent memory, context, and environment.

- Error Localization: Pinpointing where and why failures occur within complex workflows.

Observability and Tracing in Practice

Observability extends tracing by making agent internals transparent and actionable. Observability enables teams to:

- See what steps agents took to reach outputs.

- Understand tool usage and data retrieval logic.

- Identify where reasoning paths diverged or failed.

- Measure performance, cost, and latency impacts.

Tracing tools, such as MaximAI, provide developers with:

- Comprehensive distributed tracing - Tracing that covers both traditional systems and LLM calls

- Visual trace view - See how agents interact step-by-step to spot and debug issues

- Enhanced support - Support for larger trace elements, up to 1MB, compared to the usual 10-100KB

- Data export - Seamless export of data via CSV exports and APIs

This helps teams identify failures, debug issues, improve reliability, and ensure alignment with business and user goals.

Step-by-Step: How to Trace a Multi-Agent Workflow in Maxim

- Enable tracing via SDK or API (

maxim.trace(start=True)). - Run the agent workflow — all tool calls, messages, and the states are captured automatically.

- Inspect the trace timeline in the UI to see agent-to-agent decisions.

- Jump to the failure point using filters (latency spike, hallucination, wrong output).

- Edit prompt or config, then rerun from checkpoint without re-executing full flow.

- Export traces or send to the eval pipeline to generate benchmark metrics.

Technical Deep Dive: Tracing Multi-Agent Workflows

Key Components of an Agent Tracing System

- Tracing and Logging: Captures all agent communications, including user inputs, tool calls, and responses.

- Checkpoints and Sessions: Enables resetting workflows to prior states for experimentation and error recovery.

- Visualization Dashboards: Provides graphical representations of agent trajectories, decision trees, and conversation forks.

- Configuration Management: Supports changing prompts and models on the fly.

- Evaluation: Integrates automated and human-in-the-loop evaluation for quality assurance.

Example: Tracing in Practice

Consider a multi-agent system solving a coding task. The Orchestrator delegates to the Coder, who writes Python code. The Executor runs the code, while the File Surfer parses output files. Tracing reveals:

- Which agent made each decision?

- What tools were used and why?

- Where the workflow deviated from the optimal path.

- How agent messages evolved over time.

If an error occurs (say, incorrect code execution) the tracing system allows developers to backtrack, edit the Coder’s instructions, and rerun the workflow from a specific checkpoint. Visualization tools highlight the forked conversation, making it easy to compare outcomes and find failure modes.

Maxim AI: End-to-End Agent Observability and Debugging

Maxim AI is at the forefront of multi-agent observability, offering a unified platform for tracing, evaluation, and quality assurance.

Key features include:

Granular Traces

- Visual Trace Logging: Maxim’s trace engine logs every agent action and decision, providing a comprehensive view of multi-agent workflows.

- Multi-Agent Workflow Visualization: Teams can analyze complex interactions, identify bottlenecks, and optimize agent collaboration.

Real-Time Debugging

- Live Issue Tracking: Developers can debug live agent interactions, resolve errors quickly, and monitor system health.

- Iterative Experimentation: Maxim supports resetting workflows, editing prompts, and rerunning scenarios without code changes. You could run A/B tests on your prompts and run custom evaluators to check agent performance in both pre-production and post-production stages.

Evaluation and Metrics

- Automated and Custom Evaluations: Maxim offers a library of pre-built and custom evaluators, supporting LLM-as-a-Judge, statistical, and programmatic evaluators (Evaluation Metrics).

- Human-in-the-Loop Workflows: Seamless integration of human reviewers for last-mile quality assurance.

- Reporting and Analytics: Generate detailed reports to track progress, share insights, and drive continuous improvement (Quality Evaluation).

Enterprise-Grade Observability

- Security and Compliance: In-VPC deployment, SOC 2 Type II, ISO 27001, HIPAA, and GDPR compliance.

- Role-Based Access Controls: Precise user permissions for collaborative debugging and running evals.

- Priority Support: 24/7 assistance for mission-critical deployments.

Explore detailed documentation and guides in Maxim’s Docs and Blog.

Case Study: Scaling Debugging in Conversational Banking

Clinc, a leader in conversational banking, leveraged Maxim AI’s tracing and evaluation platform to elevate their AI confidence (Clinc Case Study). By implementing granular trace logging and automated evaluation workflows, Clinc reduced debugging cycles, improved agent reliability, and achieved faster time-to-market for new features.

Best Practices for Agent Tracing and Debugging

- Implement Comprehensive Logging: Capture all agent actions, communications, and tool usage.

- Use Interactive Visualization Tools: Leverage dashboards to map agent trajectories and identify failure points.

- Integrate Evaluation Pipelines: Combine automated metrics with human reviews for robust quality assurance.

- Iterate on Agent Configurations: Regularly update prompts and workflows based on trace insights.

- Monitor in Production: Continuously observe agent behavior, set up real-time alerts, and resolve regressions promptly.

For a deeper dive into evaluation workflows, see Evaluation Workflows for AI Agents.

Resources & Further Reading

- Interactive Debugging and Steering of Multi-Agent AI Systems (arXiv)

- Build and Manage Multi-System Agents with Vertex AI (Google Cloud)

- Debugging Multi-Agent Systems (ScienceDirect)

- Weights & Biases: Debugging CrewAI Multi-Agent Applications

Conclusion

Agent tracing is indispensable for debugging, optimizing, and scaling multi-agent AI systems. As agent teams tackle more complex tasks, visibility into their interactions, decisions, and workflows becomes critical for ensuring reliability and performance. Platforms like Maxim AI empower engineering and product teams with advanced tracing, observability, and evaluation capabilities, making it possible to ship robust AI solutions with speed and confidence.

To learn more, explore Maxim’s documentation, blog, and case studies.