Voice Simulation: Testing Voice Agents the Way Users Experience Them

Introduction

Voice is rapidly becoming the next frontier of AI interaction (along with physical AI). As more companies deploy voice agents for customer support, sales, and service operations, the stakes have never been higher. A poorly tested voice agent doesn't just frustrate users - it can damage your brand, lose customers, and create operational chaos.

But testing voice agents is fundamentally different from testing text-based chatbots. Voice operates in real-time with emotion, tone, and nuance. Users interrupt, speak with different accents, and expect sub-2-second responses. To ship production-ready voice agents, you need automated testing across dozens of scenarios - angry customers, complex conversations, inbound and outbound calls, edge cases - evaluating how they handle interruptions, emotions, and accents. Manual testing with human actors is expensive, slow, and doesn't scale.

The problem? Most voice testing approaches today fundamentally don't work. When we built voice simulation at Maxim, we discovered why - and had to solve some hard technical problems to make realistic voice testing possible.

The Intuitive Approach Falls Short

The most common approach to voice simulation seems straightforward: speak using text-to-speech, transcribe your agent's responses, feed that to an LLM to generate the next response, and repeat. This STT→LLM→TTS pipeline is intuitive - it breaks down the problem into familiar components.

But it has four fatal flaws that make realistic testing impossible:

- Latency compounds into unusable delays. Each component adds delay. In practice: 4-6 second response times. Natural conversation requires around sub-2 seconds. The simulation creates an experience no real user would tolerate.

- Emotional tone is erased. Let’s say an angry customer says “I’m really frustrated” with raised volume, clipped pacing, and sharp emphasis. After speech-to-text, all of that emotional signal collapses into a flat transcription: “I’m really frustrated.” - Your agent never learns to handle actual emotion. The test passes, but real frustrated customers fail.

- Voice Activity Detection is a guessing game. When did the user finish speaking? Wait 500ms and you cut people off mid-thought. Wait 2 seconds and you create awkward silences. No threshold works for all scenarios.

Interruptions become impossible. Real conversations have overlapping speech. Consider this exchange:

Agent: "I can help you with that. First, I'll need your account num-" User: "I already gave that to the last person!"

In a real-time model, the agent hears the interruption as it happens and can adjust mid-sentence. The pipeline is sequential - it must transcribe, process, and generate in order. By the time it realizes the user interrupted, the agent has already "spoken" the full sentence in its output buffer. The result: awkward pauses and stilted exchanges that bear little resemblance to real conversation.

Real-Time Native Audio: A Different Architecture

The breakthrough is real-time, native audio processing. These models are fundamentally different from text-first LLMs: instead of converting speech into text tokens and reasoning turn by turn, they operate directly on streaming audio, processing acoustic signals end-to-end. This preserves timing, prosody, emotion, and interruptions as first-class inputs rather than losing them in transcription.

Here's what this means in practice: traditional text models process language as discrete tokens - words or subwords like "frus", "trated". Audio models instead process continuous acoustic features: pitch curves, energy patterns, speaking rate, pauses. When someone says "I'm really frustrated" with a rising pitch and clipped cadence, the model receives that emotional signal directly as part of the input - not as metadata or text annotation, but as the primary data itself. This allows it to generate responses that match the emotional register naturally, and to detect and respond to overlapping speech in real time.

This isn’t just a performance improvement - it’s a different interaction model altogether. The system hears speech as speech and generates speech directly, so overlapping dialogue, hesitations, and emotional cues work naturally. We built Maxim’s voice simulation on top of this architecture to ensure testing reflects how real conversations actually happen. But “possible” and “production-ready” are very different things.

The Gaps Between Real-Time and Reliable

Challenge #1: Latency Isn’t Predictable

Real-time voice models do respond quickly. Once connected to a voice agent over WebSockets (or even WebRTC), the realistic response time is typically in the 1-3 second range. But in our testing of these conversations, we saw something more problematic: mid-call, sometimes audio would sometimes arrive while the other agent hadn’t fully stopped speaking, and in other cases responses would stall entirely, taking 5-6 seconds with no change in complexity or intent. At first, this felt like the model was taking extra time to think - but examining the surrounding turns showed no need for additional reasoning. The delays weren’t tied to conversational complexity; they were random and deeply disruptive to flow.

The root cause was rarely a single factor. We experimented extensively with voice activity detection thresholds and configurations, but tuning alone wasn’t enough. Real-time voice models are highly sensitive to turn-taking signals, audio boundaries, and buffering behaviour, and even small inconsistencies in voice activity thresholds, audio framing, or stream cadence can compound unpredictably and stall generation. We addressed this by taking control of voice activity detection internally and aligning our audio streaming format precisely with how each real-time APIs expect input, managing turn boundaries and audio cadence ourselves to eliminate most mid-conversation stalls and make response timing feel consistent and natural.

Challenge #2: Role Switching Is a Structural Bias

Real-time voice models are overwhelmingly trained to behave as assistants. Their default instinct is to help, guide, and resolve. Voice simulation requires the opposite - the model must behave like a human caller, while the target agent does the helping.

Without careful control, models would periodically drift into assistant behaviour mid-conversation: offering help, asking diagnostic questions, or attempting to resolve the issue themselves. This wasn’t a prompting mistake - it was a structural bias baked into the training distribution.

We solved this by anchoring the model to a clear, persistent identity. Instead of abstract instructions like “act as a user,” we gave it a physical persona, a concrete situation, and strong contextual grounding around why it was calling. Combined with tighter prompting and scenario-specific context, this kept the model reliably in the customer role throughout the interaction.

Challenge #3: Multilingual Voice Simulation Is Still Hard

English voice simulation works well. We've observed relatively better performance in widely-spoken languages like Spanish and French compared to languages such as Japanese or Korean, assuming due to greater availability of conversational training data. However, even in these cases, quality and consistency still lag behind English. Languages with less represented training data - particularly those with different prosodic structures or limited digital voice content - continue to present significant challenges.

Many real-time voice APIs struggle outside English, particularly for role-played, customer-style conversations. Latency becomes less consistent, role adherence degrades, and responses can feel culturally off. This likely reflects gaps in training data - there is far less high-quality, conversational, role-specific audio available in many languages compared to English.

This remains an active area of work for us. We’re continuing to improve the quality of multilingual voice simulation through language-specific tuning and more culturally grounded personas.

What Voice Simulation Enables in Practice

Once voice simulation behaves like a real conversation, it changes how teams test voice agents. Instead of staging calls manually or replaying narrow scripts, teams can test how their agents behave across realistic scenarios at scale - before anything reaches production.

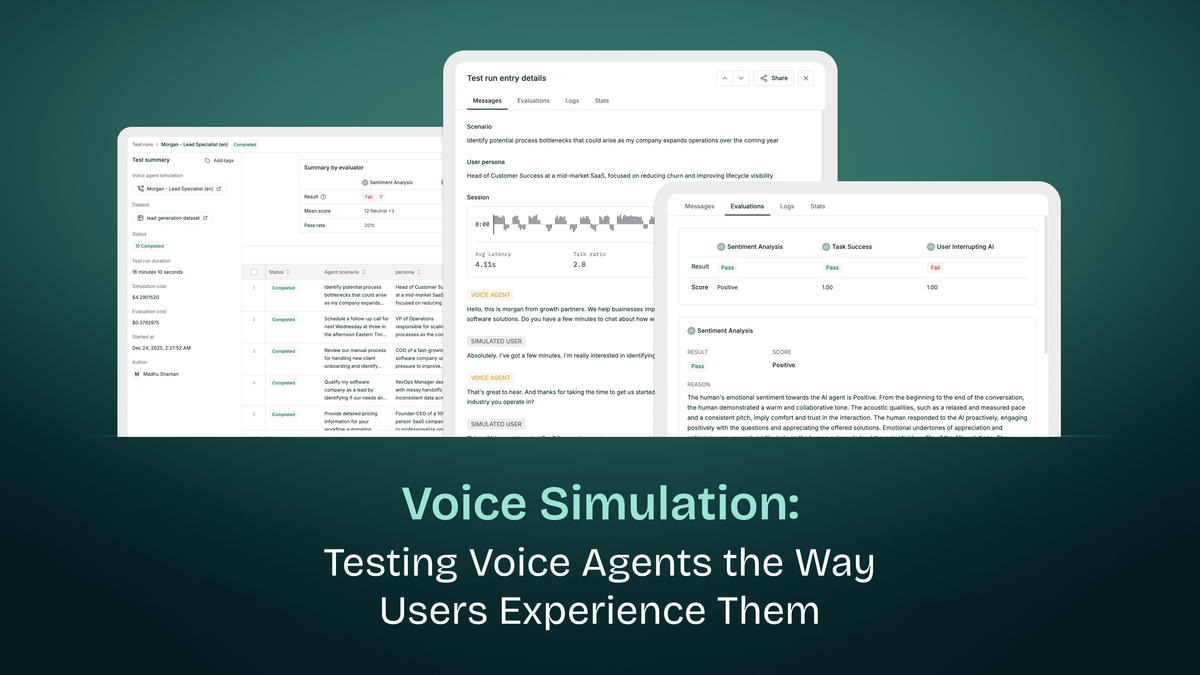

In Maxim, teams connect their existing voice stack directly by providing their voice provider credentials (for example, Twilio or Vapi) along with a phone number. From there, they can test inbound voice flows today and outbound calls soon (outbound is under development). To run a simulation, you define the scenario and persona for the simulated caller - who they are, why they’re calling, and how they should behave - and then run automated calls against your agent exactly as real users would.

Because these are full voice conversations, teams can evaluate far more than correctness. Maxim includes built-in evaluations for sentiment analysis, user satisfaction, interruptions (AI interrupting the user and vice versa), abrupt call termination, and a range of audio-level metrics such as speech rate, average response latency, signal-to-noise ratio, and talk ratio. Teams can also create datasets of scenarios and personas, run test suites repeatedly, and track how changes to their agent impact conversational quality over time.

The result is faster iteration with higher confidence. Voice-specific failures - awkward silences, poor interruption handling, emotional mismatches, or timing issues - surface during testing instead of in production. Teams move from “we tested a few calls” to “we understand how this agent behaves across real conversations,” and can fix issues before they reach users.

What This Means for Voice AI Development

Voice agents aren’t just chatbots with microphones anymore. Enterprises are already shipping real-time voice experiences powered by native audio models - whether it’s Google’s Gemini Live, Meta’s Ray-Ban Glasses assistant, or xAI’s Grok Voice Agent. These systems operate in real time, carry emotional weight, manage interruptions, and are judged as much on how they respond as what they say. Testing them with text-first pipelines guarantees blind spots that only surface in production.

The STT → LLM → TTS approach was a reasonable starting point when real-time voice APIs didn’t exist. Today, it’s increasingly holding teams back. Real-time, native-audio processing makes realistic voice testing possible - but only when paired with systems that handle turn-taking, latency, role adherence, and evaluation at scale.

We built voice simulation at Maxim because teams building voice agents deserve testing tools that reflect reality. Tools that surface voice-specific failures early, help teams iterate faster, and make it possible to ship with confidence - not hope.

Ready to test your voice agents realistically? Read our documentation to learn more.