User Simulation in AI: From Rule-Based Models to LLM-Powered Realism

What if you could test your AI system with thousands of diverse users without recruiting a single person? User Simulation makes this possible. Simulating human users - a fundamental application of AI has driven progress in both research and industry. By allowing machines to imitate real user interactions, user simulation advances domains like user behaviour modelling, synthetic data generation and system evaluation.

Traditional user studies can cost upwards of $50,000 and take months to complete, making them impractical for rapid iteration and testing. Recent breakthroughs in language models have dramatically improved the realism and flexibility of user simulation. In this blog, we'll explore the evolution of user simulation, its vital role in advancing AI, and how large language models are changing the landscape.

Why User Simulation Matters

For real world scenarios like conversational agents, recommender systems and interactive search, understanding how users interact with models and other tech is crucial for building systems that are effective. However, collecting data from real users is time-consuming, expensive, and often impractical at scale.

User Simulation enables researchers and engineers to quickly test algorithms, identify weaknesses, and optimise performance before exposing users to potential failures. It also creates large diverse datasets for training and evaluation, helping AI systems generalise better and avoid biases from limited real-world samples.

Key applications include:

- Conversational AI: Testing dialogue systems across diverse user personalities and communication styles. ex: chatbots, virtual assistants

- Recommendation Systems: Evaluating how different user preferences affect algorithmic performance. ex: Netflix, amazon

- Interactive Search: Understanding query patterns and user intent variations

The Evolution of User Simulation: From Rules to Intelligence

The journey of user simulation is identical to the broader evolution of artificial intelligence itself, moving from rigid, hand-crafted rules to data-driven intelligence.

- Rule-Based Simulation (1990s) : Early user simulators relied on explicit hand-crafted rules and scripts, making them predictable and easy to control, but limited in realism and diversity, as humans often acted differently than these rigid models. eg: if-else rules

- Statistical Models (2000s) : Probabilistic models trained on real data were then used, which introduced variability and better reflected real user behaviour, though they still struggled with complex, long-term patterns. eg: Markov models or Bayesian networks

- Neural Models (2010s) : Deep learning enabled simulators learn directly from large datasets, successfully capturing subtleties like ambiguous responses or evolving goals. But at end of the day, neural models are black boxes making it hard to debug failure cases.

- LLM-Based Simulation (2021 - Present): Today, large language models can simulate diverse, realistic, and adaptive user behaviours with minimal manual setup, leveraging their training on vast amounts of internet text data. This approach makes user simulation significantly more scalable and effective for training and evaluating various AI systems compared to traditional methods.

Modelling Human Behaviour: The Heart of Simulation

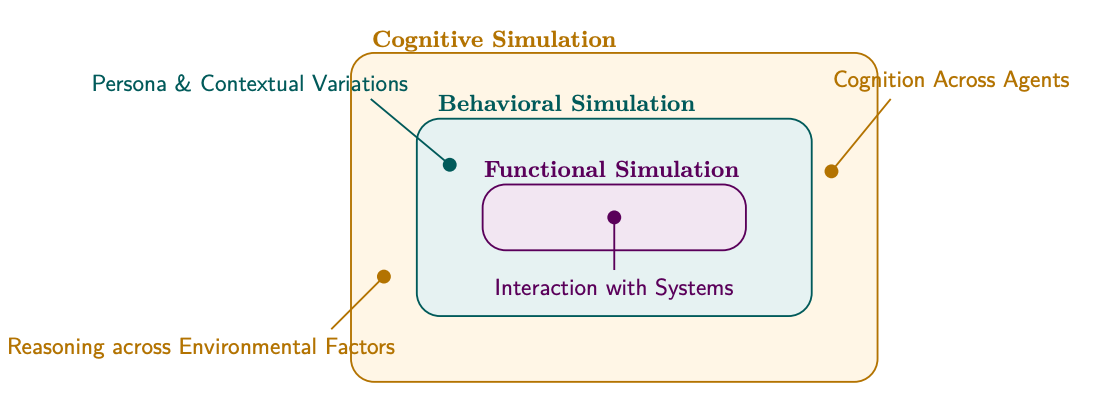

Depending on the intended use case, user simulators are designed to capture varying levels of human behaviours. User behaviour modelling in simulation can be structured into the following three levels:

- Functional Simulation:

- Focuses on basic, operational user actions like making information requests, confirming actions or providing standard responses. (e.g, “Can the chatbot answer a booking request?”)

- Behavioural Simulation:

- Goes beyond basic actions by introducing personalised or context-sensitive behaviours, such as expressing preferences, showing hesitation, or adapting responses based on prior interactions. (e.g, “a frustrated or anxious user”)

- Cognitive Simulation:

- This models the internal reasoning and decision-making processes of users, including setting goals, adapting strategies, and reasoning under uncertainty. (eg, a user negotiating or reconsidering options based on new information)

LLM-Based Simulation: Unlocking New Possibilities

Effective Prompting is the most common and flexible technique for LLM-based user simulation where we describe a target persona and scenario, and the LLM generates user-like responses accordingly. This approach is fast, adaptable, and allows for simulating diverse users by simply changing the prompt, but can sometimes lack reliability or fine control over outputs.

For realistic multi-turn simulations, providing LLMs with feedback on relevant/irrelevant information and the strategy of incorporating graded document summaries back into prompts enhances adaptation and generates higher-quality, human-like responses especially over extended sessions.

Simulation effectiveness can be evaluated through two primary approaches: measuring how well models trained on the synthetic data perform on real-world tasks, or employing LLMs as judges to evaluate the quality, diversity, and realism of generated responses

Other methods include parameter-efficient fine-tuning or Reinforcement Learning which further enhances LLM-based simulators by optimising them for specific goals or preferences, but direct use of LLMs with well designed context engineering and prompting strategies remains a strong and practical baseline for simulating realistic user behaviour.

Conclusion

Large language models have revolutionised user simulation, enabling more realistic and adaptive virtual users for AI testing and training. While techniques like prompting, feedback, and fine-tuning make simulations more human-like, key challenges persist: evaluating the true fidelity of simulated users, addressing gaps in relevance judgments and ensuring that simulators can model the full spectrum of real human behaviours. Future progress depends on building better evaluation frameworks and dynamic simulators that can truly capture the complexity of human interaction.

For a deeper dive, go through the papers: