The Skills vs MCP Debate: Understanding Two Layers of the Same Stack

How coding agents reshaped the tool integration landscape and what actually survived

We're in an interesting moment for AI's application layer. Agents can now write code (better than most programmers), call APIs, query databases, and orchestrate complex workflows. But the infrastructure underneath - how agents actually connect to the world is still being figured out.

Last year, Anthropic introduced Skills: simple markdown files that teach agents how to do things. Almost immediately, a narrative took hold. "Skills killed MCP." "Shell access is all you need." I've seen this take enough times that I wanted to dig into what's actually happening.

The short version: Skills did replace MCP for some use cases - specifically, the ones that were over engineered to begin with. But MCP didn't die. It found its proper scope. Getting this wrong means either over-engineering simple integrations or under-engineering complex ones.

The Numbers Don't Show a Replacement

Before we dig into architecture, consider what happened so far:

MCP's growth:

- 97 million+ monthly SDK downloads by January 2026 (aggregated across TypeScript, Python, and other MCP ecosystem packages, including CI traffic, up from ~8 million cumulative by April 2025)

- 10,000+ MCP servers with first-class client support (ChatGPT, Claude, Cursor, Gemini, Copilot, VS Code)

- Adopted by OpenAI, Google, Microsoft, AWS

- Fortune 500 backing from Block, Bloomberg, Amazon

Skills' rise:

- 112,362+ skills on SkillsMP marketplace

- OpenAI adopted the standard for Codex CLI

- Integrated across Anthropic's product line and products like OpenClaw

If Skills truly “killed” MCP, you’d expect MCP adoption to flatten or decline. Instead, both rose in parallel. That doesn’t mean they don’t compete. They do, especially for simple, stateless integrations. But they compete at the margins, not at the core.

What Each Technology Actually Does

The confusion stems from conflating two different layers.

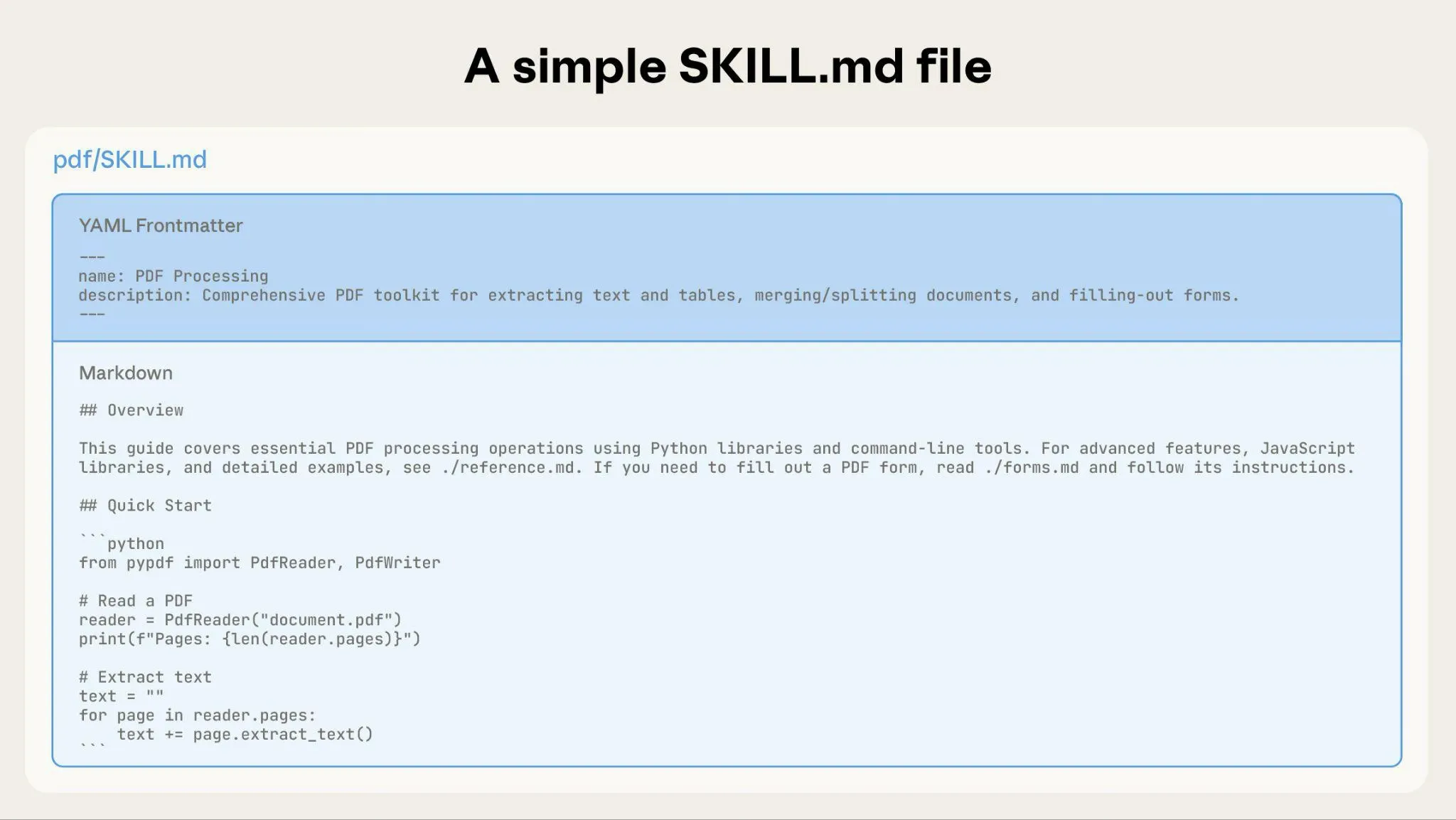

Skills are the guidance layer. They're reusable, filesystem-based resources that combine instructions and optional assets (scripts, templates, examples) into a portable unit.

At their simplest, a Skill is a markdown file with YAML frontmatter. At their richest, a Skill is a folder containing documentation, code snippets, and reference materials. Either way, a Skill tells an agent what to do and how to do it: company conventions, API patterns, workflow steps. But Skills don't execute anything themselves.

No server, no protocol, no infrastructure. The agent reads it and follows the patterns.

MCP is the execution layer. It's an open protocol that standardizes how agents connect to external tools and data. MCP defines three primitives: tools (actions the model can call), resources (data the application can access), and prompts (reusable templates). The protocol handles the plumbing: tool discovery, authentication, persistent connections, and structured communication via JSON-RPC.

Skills are the recipe. MCP is the kitchen.

A Skill might say "query our analytics database for user retention metrics." MCP is what maintains the connection pool, handles authentication, executes the query, and returns results.

What Skills Actually Replaced

Skills didn’t replace MCP broadly. They effectively supplanted many naive MCP servers that never needed to exist.

Three categories stand out:

1. Simple API wrappers

Many early MCP servers were glorified curl commands with extra infrastructure. A Skill that documents the API endpoint and expected parameters does the same job with zero maintenance overhead.

2. CLI tool wrappers

Building an MCP server for GitHub, AWS, or Docker commands was always overengineered. Coding agents typically handle gh pr create and aws s3 ls well - they've encountered these patterns extensively. A Skill documenting your team's conventions is simpler and more effective.

3. Stateless integrations

If your integration doesn't need persistent connections, complex auth, or state management, an MCP server adds complexity without benefit.

Skills didn’t eliminate MCP. They pushed it down the stack. Instead of sitting at the agent boundary for every integration, MCP increasingly handles infrastructure concerns like persistence, authentication, and enterprise SDK complexity, while agents interact through lighter-weight surfaces. That shift is what opened the door to newer patterns focused on reducing context load and simplifying agent ergonomics.

The Token Efficiency Factor

There's a quantitative argument here too. Traditional MCP loads all tool definitions into the agent's context window upfront:

| Approach | Context Cost | Scaling |

|---|---|---|

| 50 MCP tools with full schemas | ~8,000 tokens | Grows linearly with tools |

| Skills file teaching 10 CLI patterns | ~400 tokens | Stays flat |

| Code Mode (agent writes code) | ~200 tokens base | Only imports what's needed |

When you're paying per token and context windows have limits, this difference compounds. Agents complete tasks faster when they're not wading through thousands of tokens of tool definitions they won't use.

Code Mode: A Middle Path

The token efficiency problem hints at why a third pattern emerged. If Skills are too lightweight for complex integrations and MCP tool schemas are too heavy for context windows, what sits in between?

Code Mode threads this needle: instead of loading hundreds of tool definitions, agents write TypeScript that orchestrates tools programmatically inside a sandbox.

The core insight: LLMs are better at writing code to call tools than at calling tools directly. They've seen vastly more TypeScript during training than tool-calling examples. So instead of exposing hundreds of tool definitions, you expose a sandbox where the agent writes TypeScript that orchestrates tools programmatically.

Cloudflare popularized this pattern. Rather than loading all tool schemas into the context window, the agent is given a small set of meta-tools and writes code that runs inside an isolated sandbox, with no network access except through bound integrations. For example, Bifrost implements this with four meta-tools: listToolFiles, readToolFile, getToolDocs, and executeToolCode.

The agent writes TypeScript inside the sandbox. Tool bindings provide already-authorized clients, so there are no API keys in prompts and no massive schemas in context. Early adopters report meaningful gains, roughly ~50% lower token usage and noticeably faster execution compared to classic MCP tool calling. The sandbox also constrains blast radius if something goes wrong.

Importantly, Code Mode doesn’t replace MCP. It reframes it. MCP still handles connections, auth, and SDK complexity, but agents interact with it through code instead of JSON-RPC calls. MCP becomes programmable infrastructure rather than an explicit tool surface.

This shift, from “call tools” to “navigate abstractions,” is what enables the next idea.

Filesystem-First Agents (FUSE-style)

One recent blog post by Jakob Emmerling proposed a more radical idea: instead of tools or code bindings, give agents a sandboxed filesystem view of backend systems, often implemented using FUSE.

In this model, APIs, databases, and object stores appear as directories and files, and agents use familiar commands like ls, cat, and grep to explore and operate on them. Under the hood, filesystem operations are translated into real backend calls, listing a directory becomes a query, reading a file fetches data, and writes map to updates.

The appeal is conceptual simplicity. Rather than teaching an agent dozens of tools, you give it a structured tree to navigate. Partial results can live on disk instead of inside the model’s context window.

This doesn’t replace MCP. MCP would typically still sit underneath as the adapter to real services. The filesystem is just a higher-level interface. I haven’t seen this pattern in production yet, but it’s an interesting way to think about collapsing tools and resources into a single navigable surface.

Where MCP Remains Essential

But here's what Skills, Code mode can't replace - the use cases where MCP genuinely adds value:

1. Persistent connections and state

When your agent needs to run multiple queries against a database, you don't want to open a new connection each time. MCP servers maintain connection pools, handle transactions, and manage session state across operations. This is why you see dedicated MCP servers for PostgreSQL, MongoDB, and Snowflake rather than Skills wrapping SQL commands.

2. Complex authentication

OAuth 2.0 with token refresh, SAML, enterprise SSO - these flows are genuinely hard to get right. Salesforce, for example, launched hosted MCP servers in beta last year specifically because their OAuth implementation has enough quirks that centralizing it makes sense.

3. Enterprise SDK complexity

Some systems are simply painful to integrate directly. Salesforce spans multiple APIs, SAP requires specialized connection handling, and platforms like Zapier wrap thousands of integrations behind a single surface. These aren’t “just use curl” cases - MCP absorbs complexity that would otherwise leak into every agent interaction.

4. Performance-critical operations

Starting a Python interpreter on every call adds ~2 seconds of latency. An MCP server loads once and stays resident. For agents making hundreds of calls in a workflow - think data pipelines or batch operations - this difference compounds quickly.

5. Non-coding environments

Browser extensions, mobile apps, voice assistants, no-code platforms. These can't execute shell commands.

The Security Trade-offs: Architecture Matters

Last year's security landscape revealed that both approaches have vulnerabilities - but different vulnerabilities rooted in their architecture.

MCP centralizes risk. Tool poisoning exploits self describing tools - malicious instructions hidden in tool descriptions can manipulate agent behavior. Permission sprawl happens because scoping requires explicit design. A compromised MCP server affects every agent using it. Real vulnerabilities like CVE-2025-6514 in mcp-remote affected 437,000+ environments.

Shell access distributes risk. No single point of compromise, but also no built-in guardrails or audit trails. Agents have whatever permissions the shell has. Exfiltration is trivial - agents can write scripts uploading files anywhere.

AI gateways solve this. The security challenges above aren't inherent to MCP - they're symptoms of running MCP servers without governance. Instead of connecting agents directly to MCP servers, route through a gateway that filters exposed tools, requires approval for dangerous operations, and logs everything. Bifrost, for example, lets teams scope tool access (a server exposes 50 tools, you allow 5), choose execution modes (manual approval vs. auto-execute), and generate audit logs for compliance.

The key insight: MCP provides capability, the gateway provides governance. Production teams are converging on this separation.

What Mature Teams Actually Do

Most production teams end up using both.

A typical production setup:

| Layer | Technology | Examples |

|---|---|---|

| Guidance | Skills | Git conventions, API documentation, workflow patterns |

| Simple execution | Shell access | CLI tools, one-off API calls, data transformations |

| Complex execution | MCP servers | Databases, OAuth integrations, enterprise systems |

The decision framework:

- Stateless + simple auth? → Skills + shell

- Persistent connections needed? → MCP

- Complex OAuth or enterprise SDK? → MCP

- High-frequency operations? → MCP

- Non-coding environment? → MCP

- Standard CLI tools? → Skills + shell

Conclusion

The "Skills killed MCP" narrative contained a kernel of truth: many MCP servers were unnecessary complexity. Skills and shell access exposed that.

But the full story is about right-sizing. MCP didn’t die. It moved down the stack. Skills didn’t replace MCP. They handled guidance MCP was never designed for. Code Mode reframed MCP as programmable infrastructure. Filesystem-style interfaces hint at ways to simplify how agents interact with systems. Underneath it all, MCP continues to do what it does best: persistent connections, authentication, and enterprise integration.

Anthropic's decision to donate MCP to the Linux Foundation wasn't a retreat. It was recognition that MCP's value lies in complex, stateful integrations - exactly where open governance and vendor neutrality matter most.

This isn't a technology dying. It's an ecosystem maturing from "MCP for everything" to a layered architecture where each component does what it does best.

Skills teach agents how to work. MCP gives agents the infrastructure to work.

Both add value. Both survived. And the teams building production agents are using both.