Building and Evaluating a Reddit Insights Agent with Gumloop and Maxim AI

Reddit is one of the internet’s most valuable data sources, and also one of the most chaotic. Somewhere between the hot takes on r/technology and the unsolicited growth advice on r/marketing, there are real signals hiding in plain sight: what people are building, breaking, hyping up, or tearing down.

The challenge? Surfacing those insights usually means opening dozens of tabs, sifting through a flood of sarcasm, abuse, memes, and half-baked opinions, often for a single usable takeaway.

Despite the mess, Reddit’s anonymity fosters raw, honest perspectives that marketers, PMs, and content teams crave.

That’s why we built a Gumloop workflow powered by LLMs to cut through the chaos. Give it a topic and a subreddit, and it analyzes the most engaging discussions from the past week, summarizing sentiment and surfacing insights.

In this blog I will walk you step by step on how I built an AI workflow to automate the process of gathering marketing Insights from Reddit using Gumloop and then how I evaluated and improved the workflow using Maxim’s Evals Platform.

It’s like getting all the Reddit signal, without the scrolling, swearing, or suffering.

Building the workflow

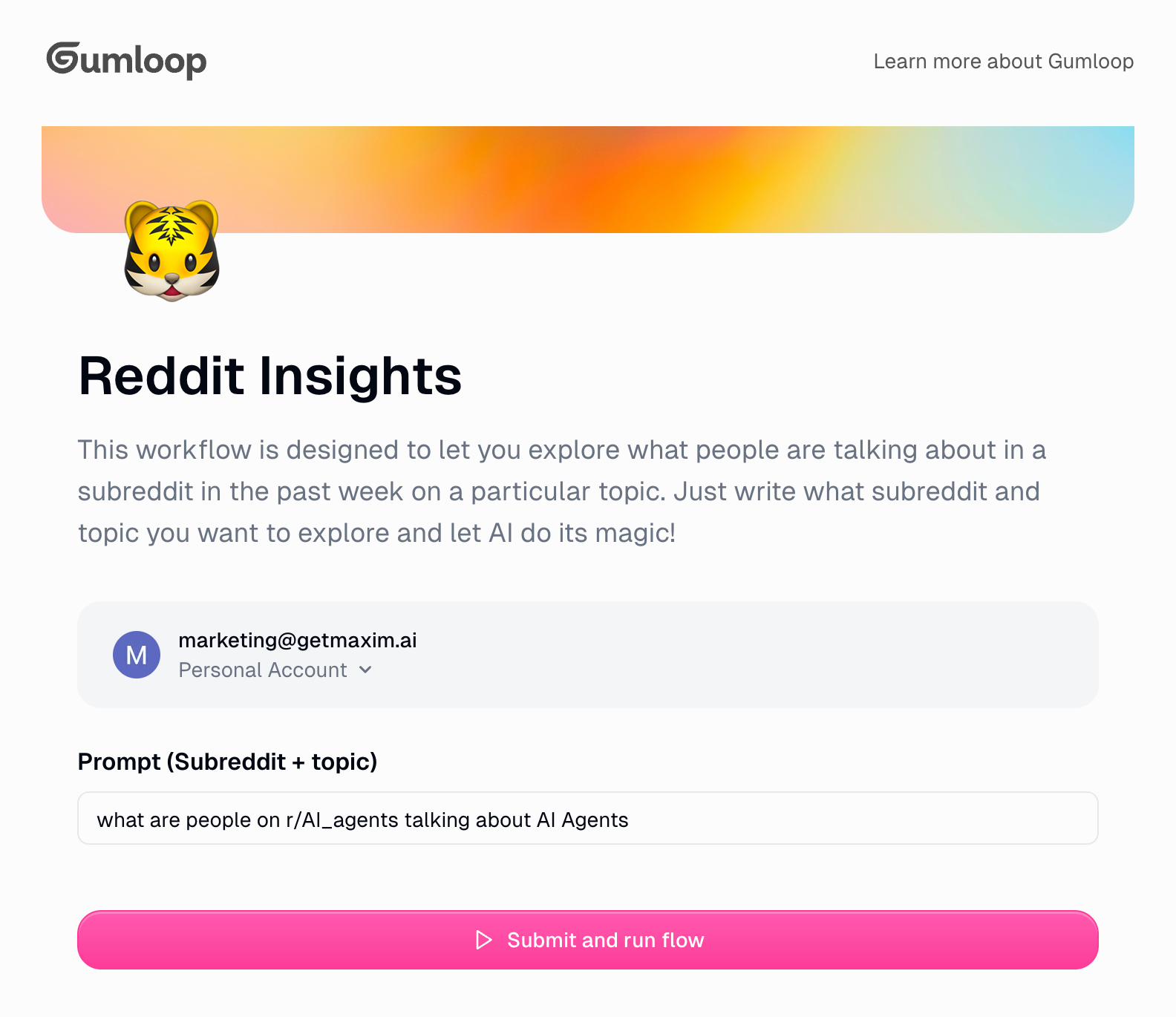

We want this workflow to be accessible to all of our team members who could query reddit in simple english mentioning the subreddit and the topic of choice. Our end-goal is to have a workflow that could be triggered by simply writing “What are people talking about in r/AI_Agents on AI Agents” and the Workflow should be able to fetch relevant posts from Reddit, analyse the posts and return a detailed response.

Adding an Interface

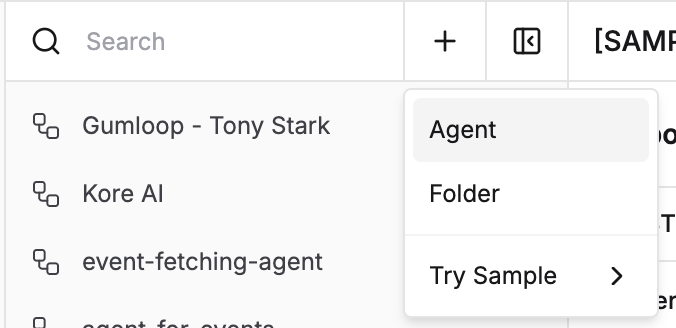

In order to make the workflow accessible to users we will be using a gumloop interface to let users interact with the workflow.

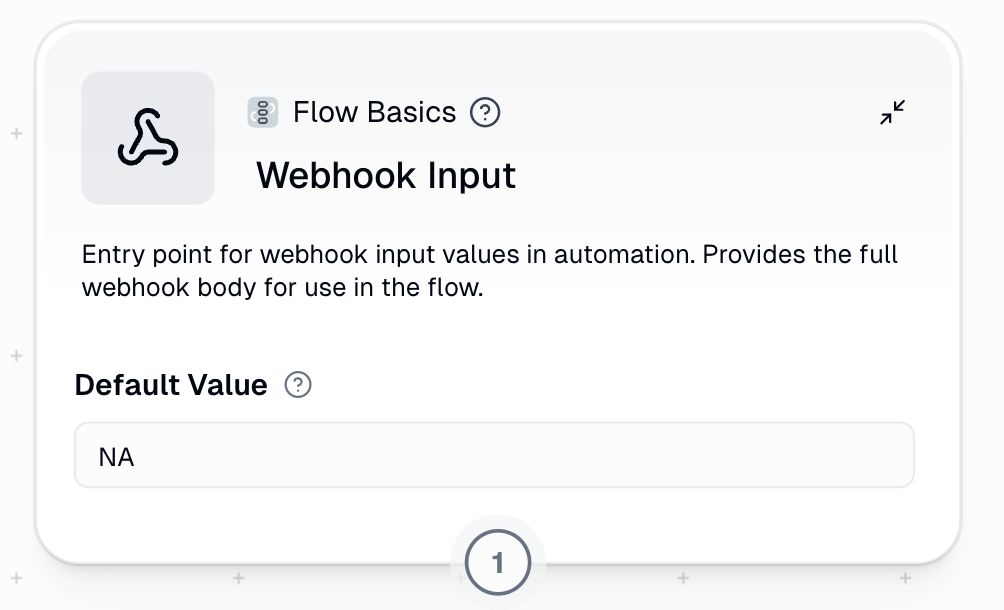

Webhook

Since, we will be evaluating the workflow using an API call, we will also be creating an webhook node for the workflow.

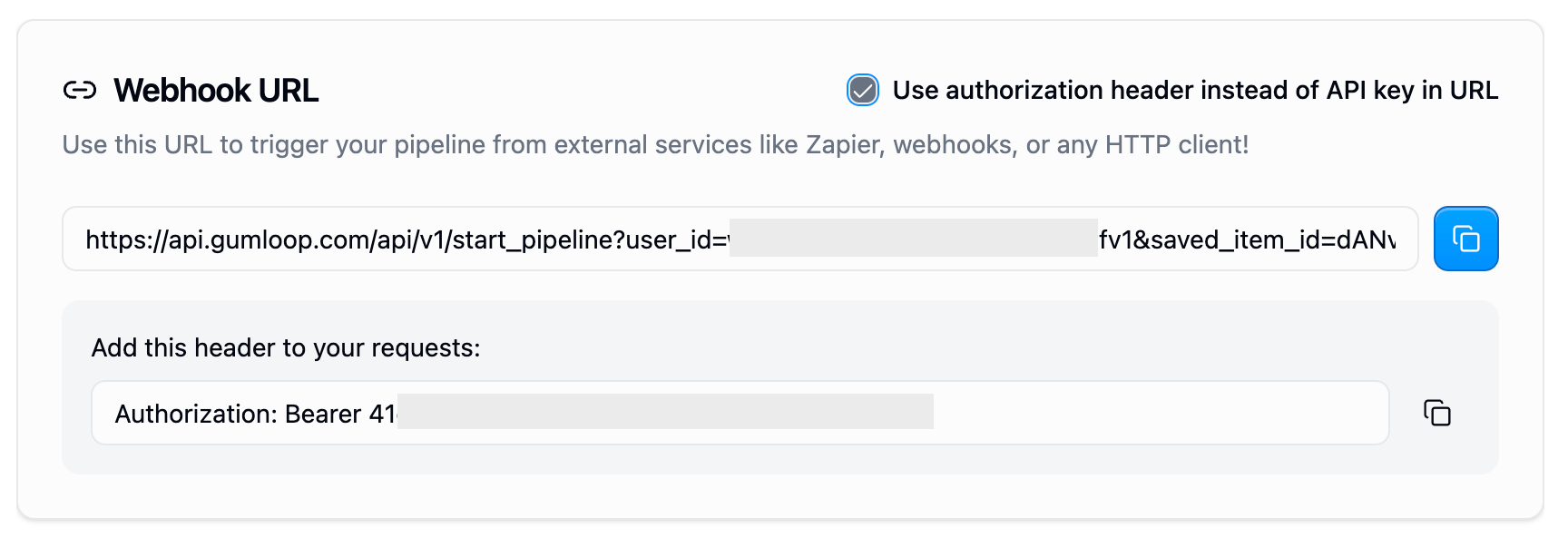

We need to get the webhook details so that we are able to hit and query the workflow using an API call. You can do this by clicking on the Webhook button in the nav bar on the gumloop workflow builder screen.

You can get the Authorization header and the webhook endpoint you will be needing to trigger the webhook, we will be needing these later.

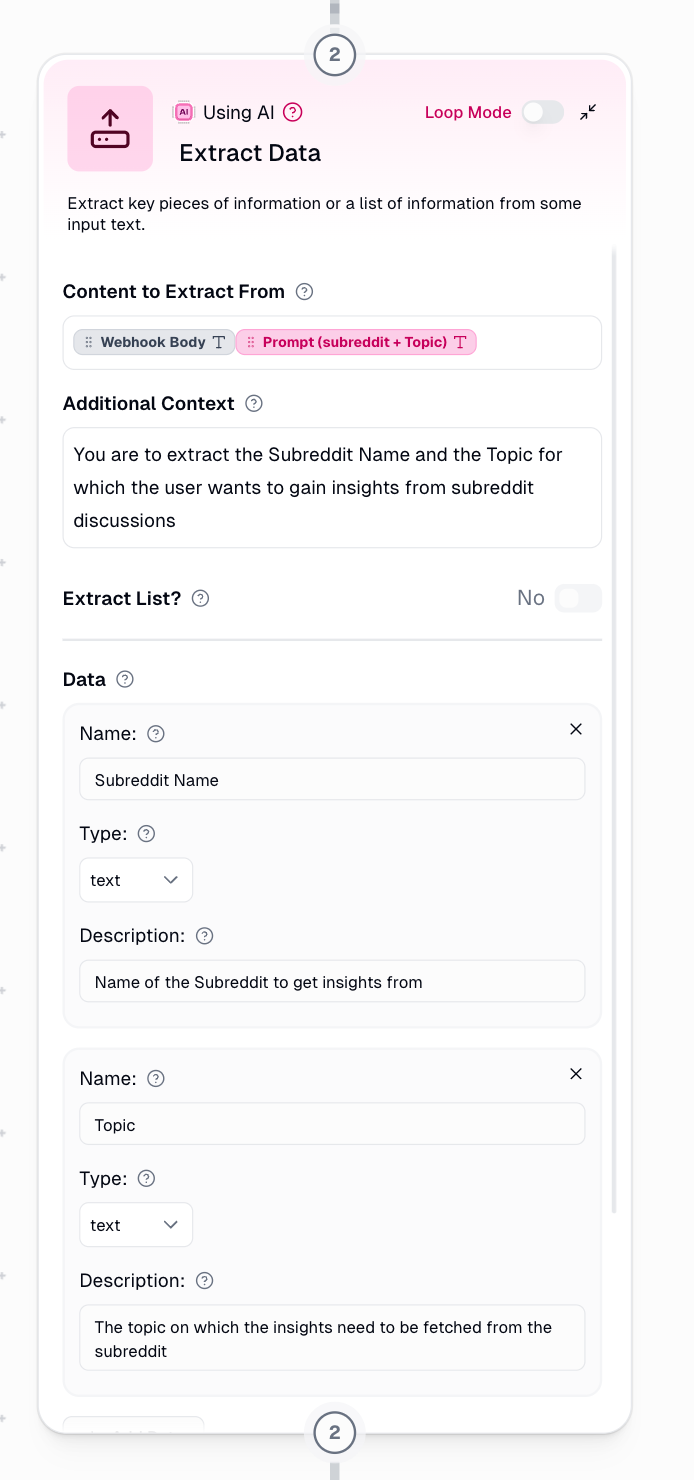

Extract the subreddit and the topic

We will connect the webhook and the interface nodes with the extract data node so that we can use an LLM to extract the name of the subreddit and the topic the user wants to get insights on from reddit. The image below features how I did it in my own workflow. You could play with the Additional Context Prompt or make this more exhaustive by adding more parameters or optional tweaks to make your system even more intensive.

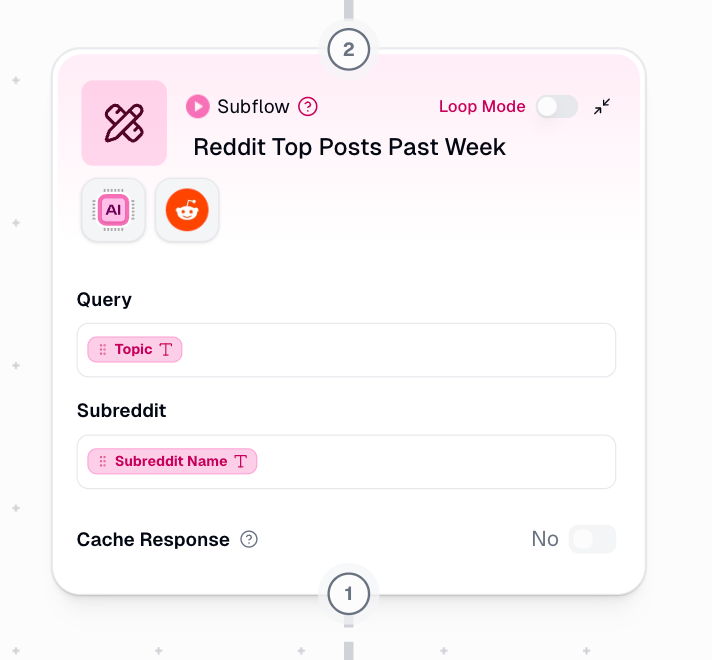

Reddit Subflow

We will use the extracted subreddit and the topic in a subflow to get posts, process them and return us the response. In order to keep the workflow simple we will be using a gumloop subflow node. You could add more subflows to implement more complex logic and operations in your workflow.

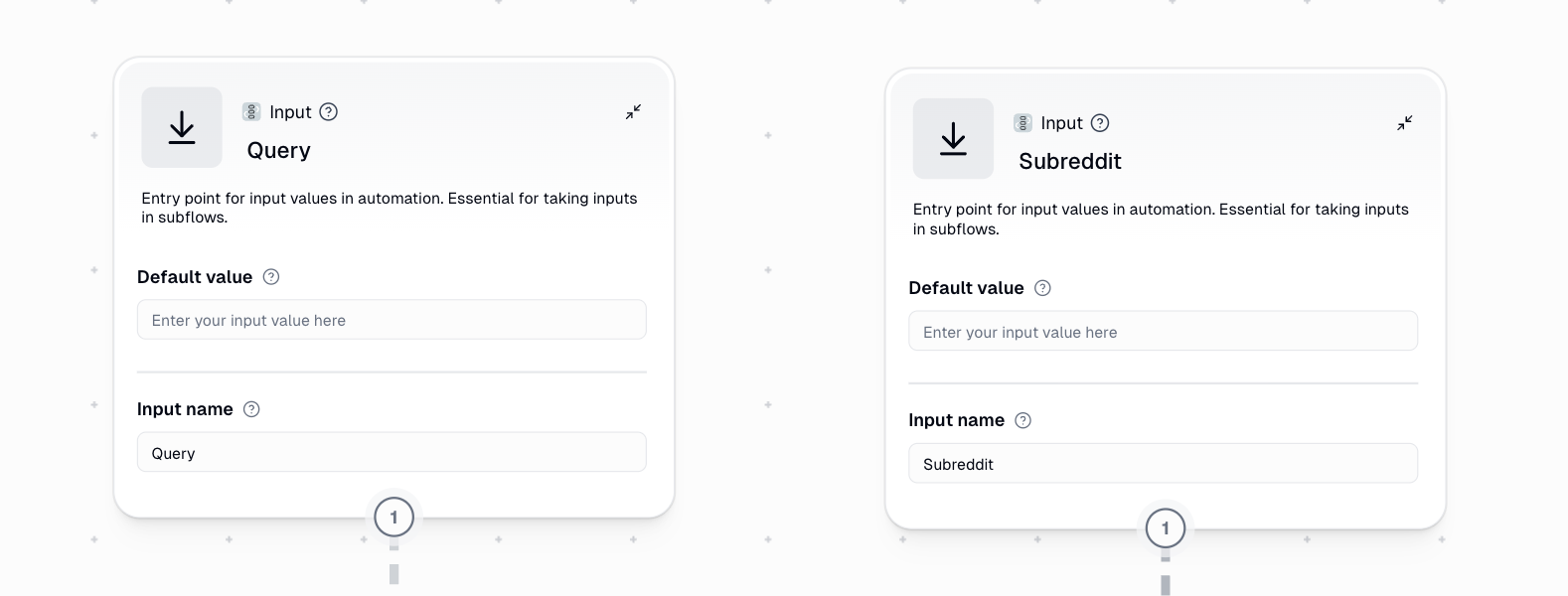

Our subflow takes in two inputs, the name of the subreddit and the query topic.

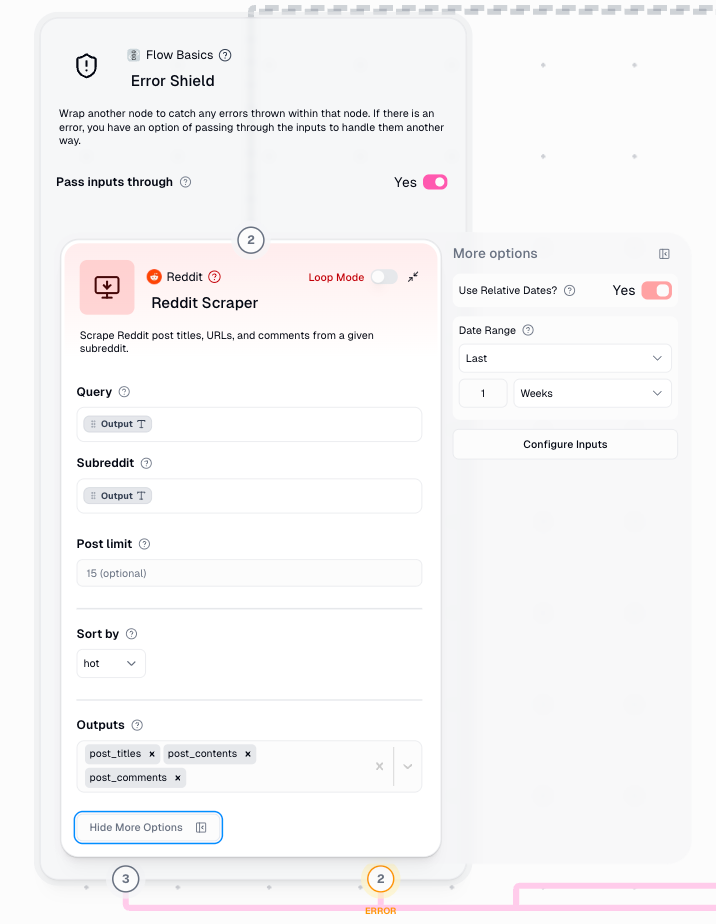

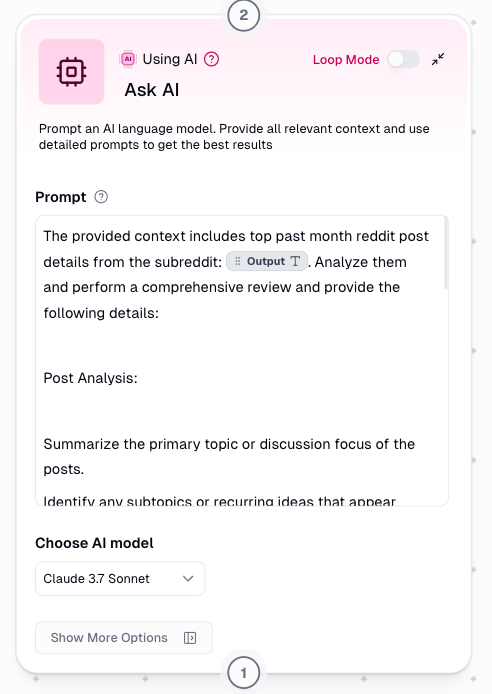

We wrapped a Reddit Scraper node in an Error Shield node to make sure that, in case the reddit scraping node faces an issue, we are able to handle the errors graciously. We pass the Subreddit and the Query to the Reddit Scraper node, select last 1 week as the Data Range and sort the posts by their engagement. So that we only fetch the posts that are the most engaging. Optionally, you could add a limit to the number of posts you want to fetch.

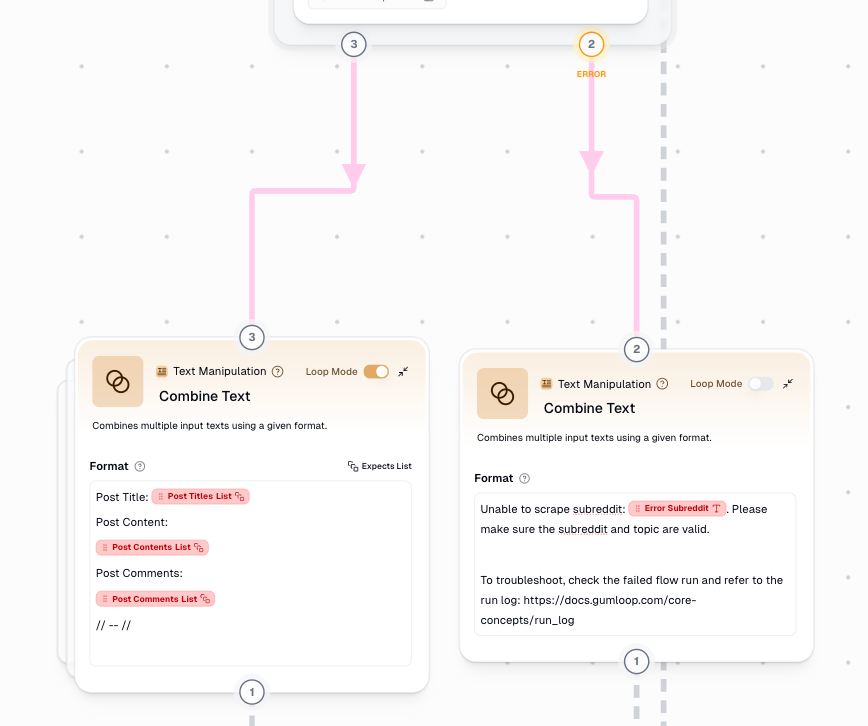

We connect the Output and the Error endpoints of the Error Shield Node and connect them with two text manipulation nodes, the 1st node creates a list of Reddit Posts configuring the post titles, content and the comments into a uniform structure and the second node handles the errors and returns a standard message in case the workflow fails to scrape reddit, either due to a mistake in processing the query or due to unavailable data for the query.

We connect the Error node to an output node so that in case the workflow fails to get reddit posts, it is able to communicate this to the user to try again.

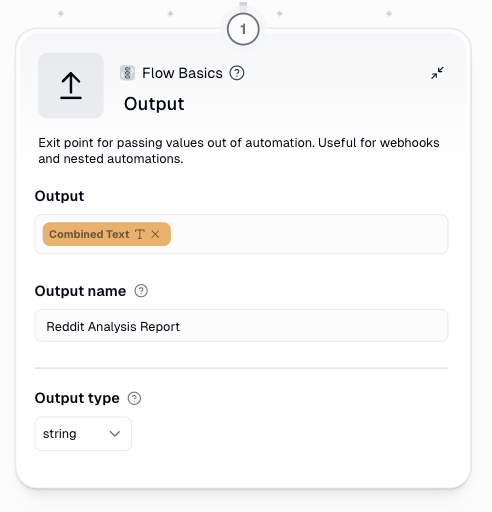

After creating the reddit post list we use a combine list node to combine all the list items into a single piece of text.

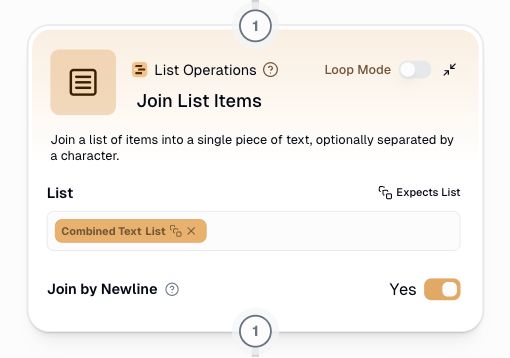

Next we pass the posts to an LLM as context and write a detailed prompt for it to process the scraped data and generate the output.

Here is the full prompt:

The provided context includes top past month reddit post details from the subreddit: {output__NODE_ID__:9AfBfoFsD6WyJ1i6bg2jJt}. Analyze them and perform a comprehensive review and provide the following details:

Post Analysis:

Summarize the primary topic or discussion focus of the posts.

Identify any subtopics or recurring ideas that appear across multiple posts.

Include examples or quotes (if provided) to illustrate key points.

Sentiment Analysis:

Determine the overall sentiment of the posts (positive, negative, neutral).

Highlight phrases or language that contribute to the sentiment.

Identify emotional trends or shifts (e.g., increasing negativity or optimism) if visible across posts.

Insights:

Extract actionable insights, such as new perspectives, audience concerns, or trends.

Highlight any surprising or outlier information that stands apart from the main themes.

Provide context on why these insights matter and how they could inform further actions or decisions.

Ensure the output is structured clearly with headings for Post Analysis, Sentiment Analysis, and Insights. Keep the tone analytical and professional, and ensure the responses are detailed but concise. If data is missing or ambiguous, note any limitations.

SUBREDDIT CONTENT:

{joined text__NODE_ID__:3Em9t1JeaBL8wVd9gvntLc}

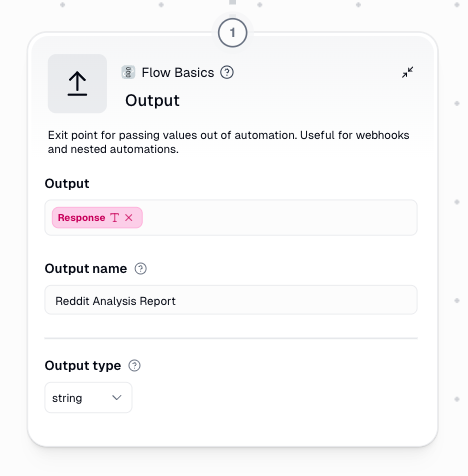

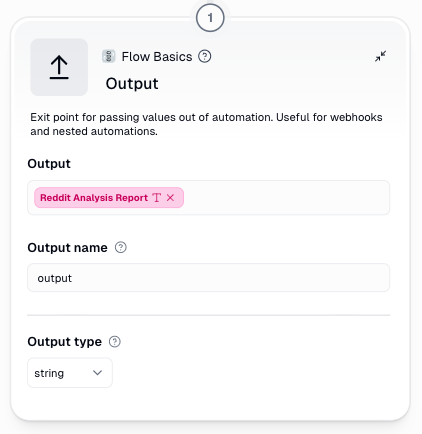

In the final step of the subflow we output the generated text using the output node.

Output Node

The output from the subflow is passed to the main flow during the workflow run, to send the response back, we add a final output node in the main flow.

You can find the full workflow here - Gumloop Workflow

Running the workflow

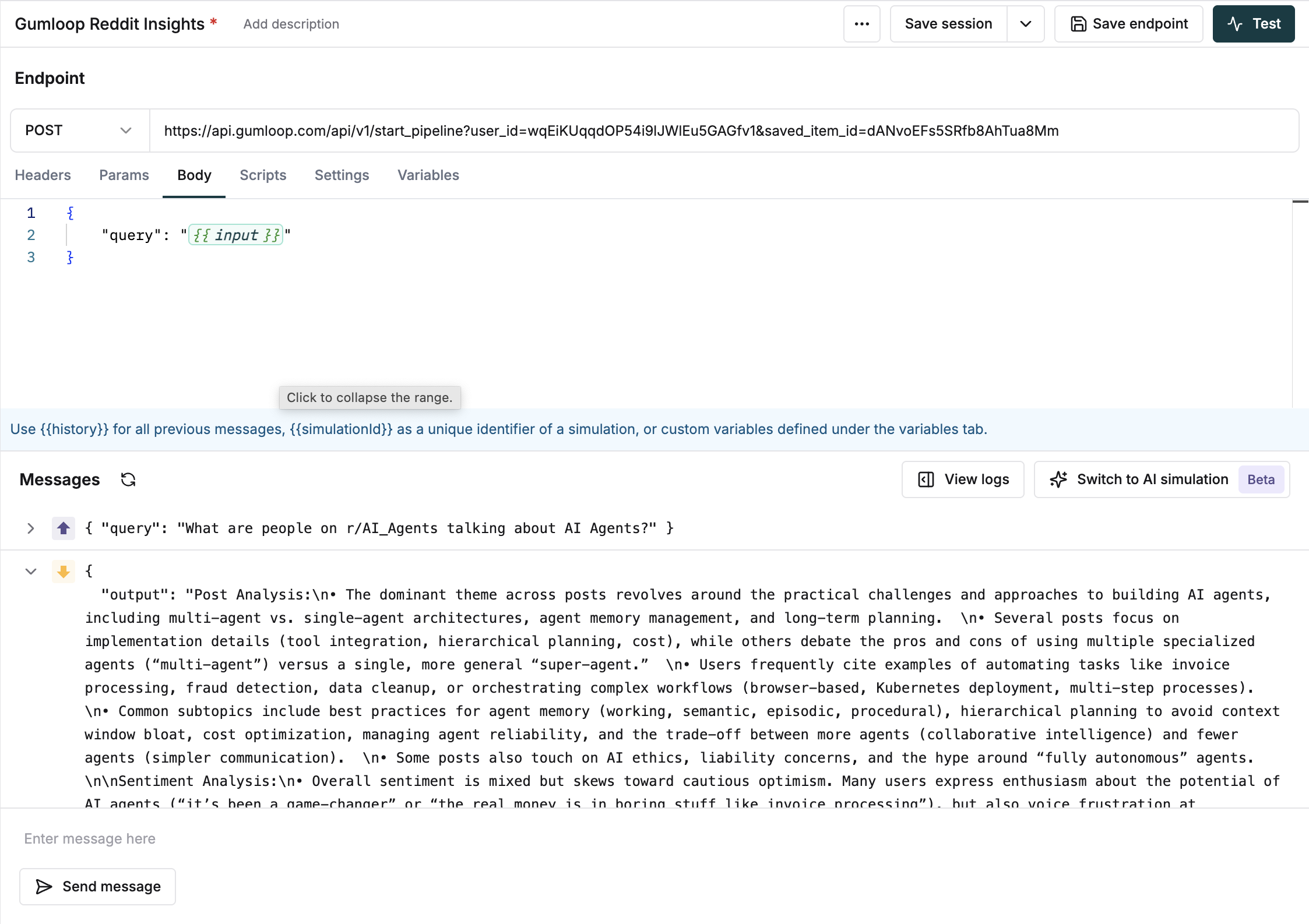

Now that our workflow is ready, we can run it using the interface using the interface URL and opening it in a browser.

When we run the workflow we can see the response from the LLM:

output

Post Analysis

Several posts focus on practical experiences and challenges related to building, deploying, and maintaining AI agents. They discuss real-world use cases such as fraud detection, e-commerce personalization, healthcare triage, and compliance checks. Recurring topics include:

Comparing single-agent versus multi-agent approaches: arguments around task specialization, collaborative workflows, and the difficulty in orchestrating multiple agents.

Maintenance and complexity: an emphasis on continuous monitoring, human-in-the-loop oversight, and the high upfront/ongoing costs.

Memory management and context: numerous discussions on handling state/long-term memory, mitigating context window limitations, and hierarchical planning strategies.

Cost and ROI: reflection on whether automation justifies monthly fees and whether agent solutions reliably deliver time or money savings.

Tooling: references to frameworks like LangChain, CrewAI, n8n, and more advanced solutions (Kubernetes deployments, orchestrator agents, etc.) to streamline building multi-step or multi-agent systems.

Throughout multiple posts, people share experiences of agents breaking down, losing context, or failing to handle edge cases without human supervision. Some participants advocate for focusing on “boring but essential” automation tasks that produce clear, measurable benefits.

Sentiment Analysis

Overall sentiment trends toward cautious optimism. Many posts celebrate the potential of agent-based systems but highlight significant effort and complexity. Enthusiasm appears high for specialized and practical automations (e.g., invoice processing, data cleanup). Skepticism surfaces around “fully autonomous” claims and hype-driven marketing, reflecting a desire for realistic outcomes. A few comments show frustration (words like “snake oil,” “dogshit”) directed at overly generic or low-quality AI agent content. However, the main tone is constructive, with participants offering solutions and sharing best practices.

Insights

Focus on Practical Automation: There is a strong theme that pragmatic, smaller-scale tasks—like compliance checks or data reconciliation—yield clearer returns than broad, “do-it-all” agents.

Multi-Agent Complexity vs. Single-Agent Simplicity: Multi-agent systems can outperform single agents on complex tasks but introduce orchestration overhead. This parallels organizational design and emphasizes the importance of memory handling and specialized sub-agents.

Human Oversight Remains Essential: Few (if any) users report success with fully autonomous solutions. Human-in-the-loop checkpoints continue to be key for accuracy, trust, and liability concerns.

Increasing Need for Robust Tools: Many posts express demand for visual, secure, and auditable frameworks (e.g., flowchart-based orchestration, centralized memory stores, robust logging) to simplify building and managing agents.

Cost–Benefit Analysis: Participants frequently mention the tipping point at which agent maintenance efforts and subscription/API fees are justified. Effective solutions typically aim for time savings in high-volume or repetitive tasks.

These insights reflect a growing shift toward deeper specialization, clarity on scope, and robust coordination strategies for multi-agent architectures. They also highlight the community’s desire for reliable, easy-to-integrate tools and realistic, high-value use cases.

What the workflow returned wasn’t just a surface-level digest, it was a grounded, detailed pulse-check from the subreddit discussions. You can see the Reddit community pulling away from shiny, overpromised agent hype and steering toward dependable, ROI-driven automation. The kind of stuff that works quietly, not dramatically. It flagged critical pain points: memory, orchestration, oversight, etc. while also revealing an appetite for simpler, toolable systems that can actually scale. All of this without scrolling past a single troll comment or meme all done by AI.

The necessity for Evaluation

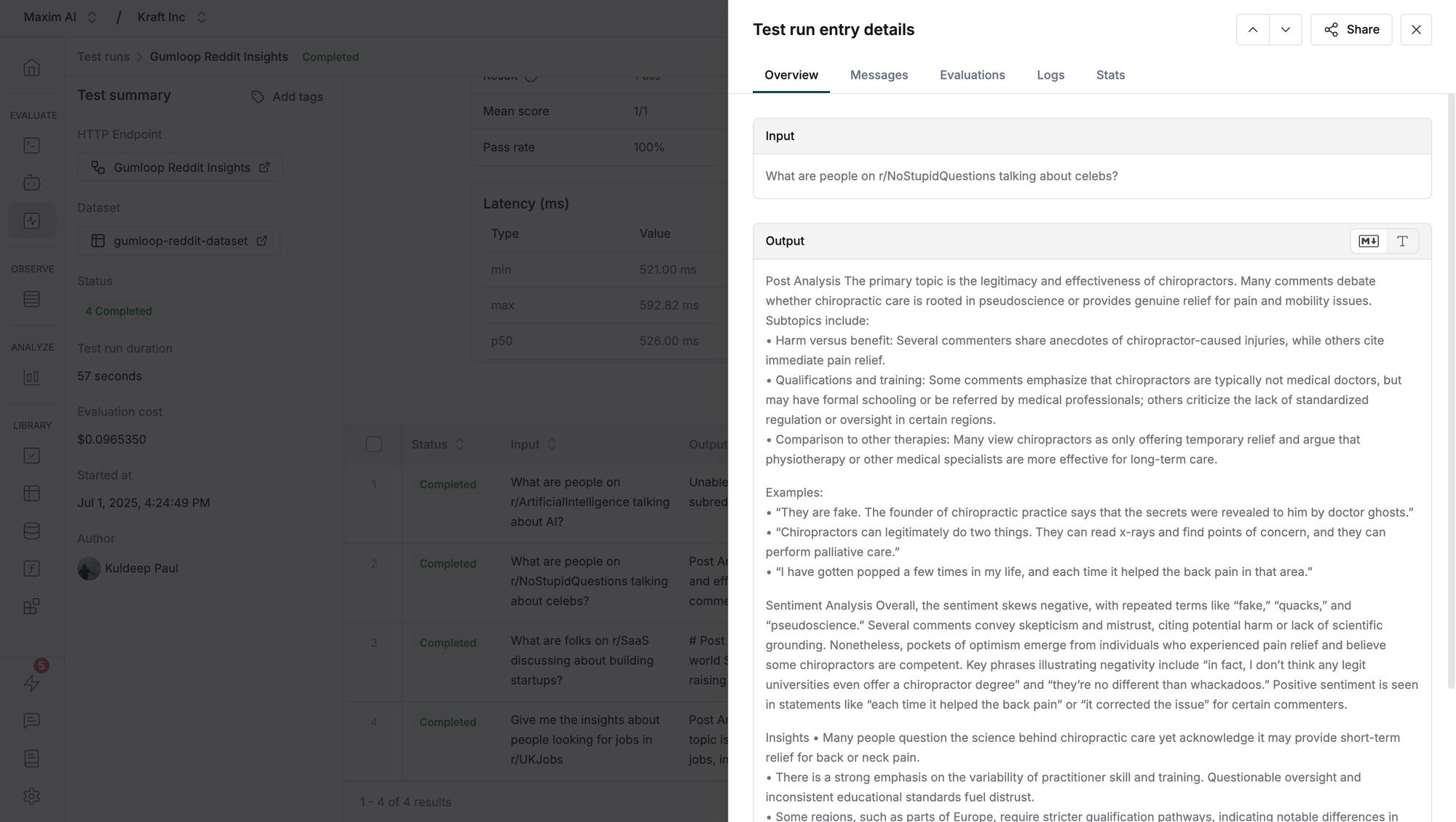

Reddit is a firehose of raw opinions, emotional debates, cultural takes, and community banter. The challenge is not just collecting that information, but transforming it into something structured, clear, and insightful. We asked our workflow a simple question:

“What are people on r/NoStupidQuestions talking about celebs?”

This was the output:

output

Post Analysis

Celebrity Finances After Big Trials

• Several comments focus on whether celebrities (e.g., P-Diddy) face severe financial consequences after lengthy and expensive legal battles.

• Subtopics include:

– Legal fees and their impact on a celebrity’s liquid assets.

– Public image damage and its effect on residual income.

– Possibility of asset forfeiture and fines that could deplete wealth.

– Historical examples (e.g., O.J. Simpson, Robert Downey Jr.) illustrating varied financial and reputational outcomes post-trial.

YouTubers as Celebrities

• Another thread debates if YouTubers are truly “celebrities” given that many older adults either don’t know them or don’t take them seriously.

• Subtopics include:

– The evolution of celebrity status beyond traditional actors, musicians, or TV personalities.

– Comparisons to mainstream figures (MrBeast, Logan Paul, James Charles).

– Recognition that a celebrity is essentially anyone with fame or broad public recognition.

Antisemitic Stereotypes

• One post centers on harmful stereotypes about Jews “loving money,” with the poster citing extreme views from a family member.

• Subtopics include:

– Historical roots of the stereotype: Christian and Islamic prohibitions on moneylending.

– The role of Jewish communities in banking, leading to widespread misconceptions.

– Overt hate speech and references to historical persecutions (“they killed Jesus,” references to Hitler).

Sentiment Analysis

• Overall Sentiment: Mixed, though it tilts negative due to the explicit hate speech and concerns about financial hardship.

– In the posts about celebrity finances, the tone is largely curious or neutral, with some cautious optimism that celebrities often remain financially secure.

– The discussion around YouTubers is mostly neutral to positive, focusing on changing definitions of fame.

– The antisemitic content introduces a strongly negative tone, revealing anger, hostility, and hateful language directed at Jewish people.

• Emotional Trends:

– From the celebrity finance threads, sentiment ranges from pragmatic concern to dismissive confidence (“He’s gonna have plenty of money”).

– In the YouTuber celebrity post, the sentiment remains neutral or mildly positive, with a focus on generational differences in how fame is perceived.

– In the antisemitic thread, the language is aggressive, hateful, and indicates a strong negative emotional charge.

Insights

• Celebrities and Financial Resilience: Despite the high costs of legal battles and hits to reputation, many celebrities maintain diverse assets and revenue streams that can leave them financially stable in the long run.

• Broader Definition of Celebrity: The conversation suggests a growing acceptance of internet personalities as mainstream celebrities, indicating a shift in how fame is recognized across different age groups.

• Persistent Negative Stereotypes: The antisemitic thread underscores how deeply ingrained and harmful stereotypes can be, highlighting a need for more awareness and education about historical contexts.

• Potential Impact on Community Discussions: These posts reveal a community interest in celebrity status, cultural stereotypes, and societal attitudes, which can help guide moderation strategies or educational resources to address misinformation and hatred.

While the response was well-organized and informative, it exposed a few recurring issues. Certain sections felt bloated with redundant phrasing and at times, the narrative structure drifted. These are not just cosmetic flaws, they affect how useful and readable the output really is. It is important for the output generated by the LLM to be clear, concise and coherent.

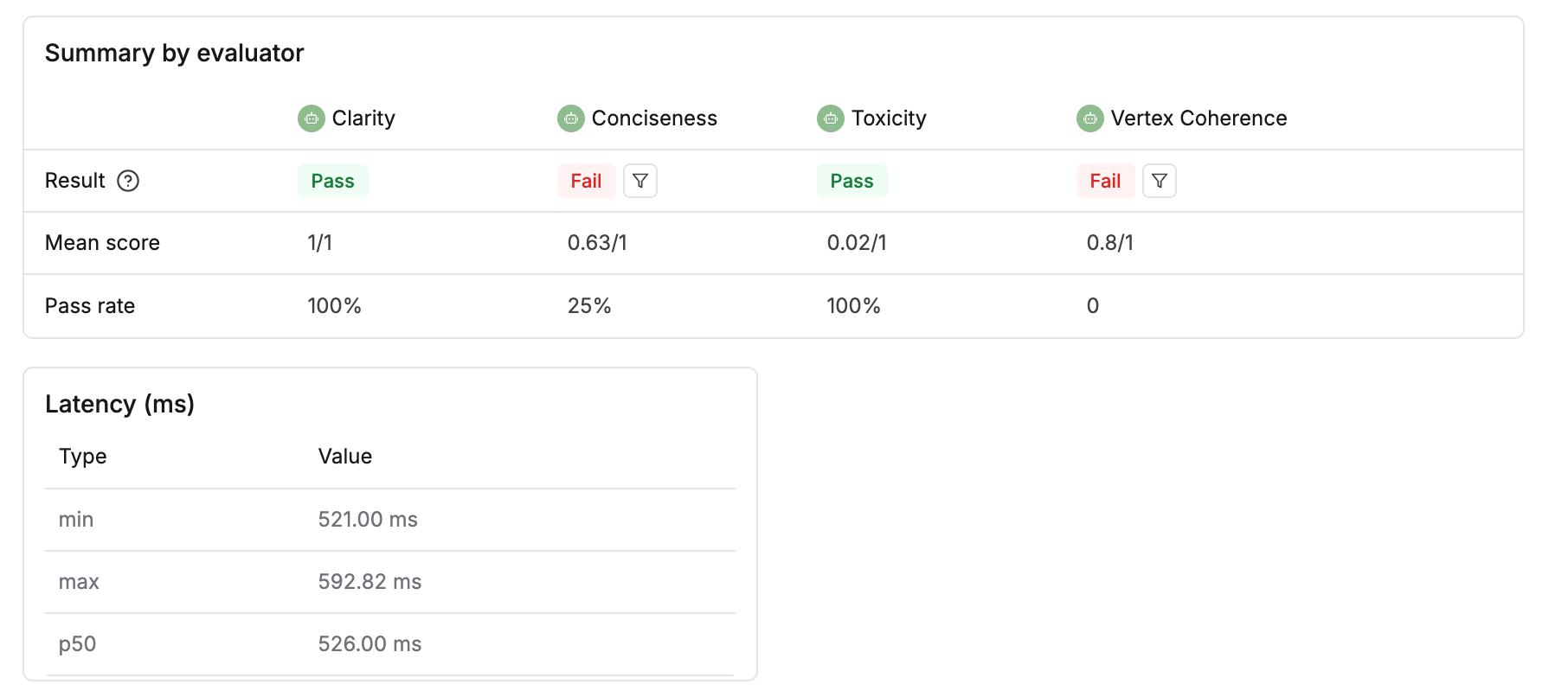

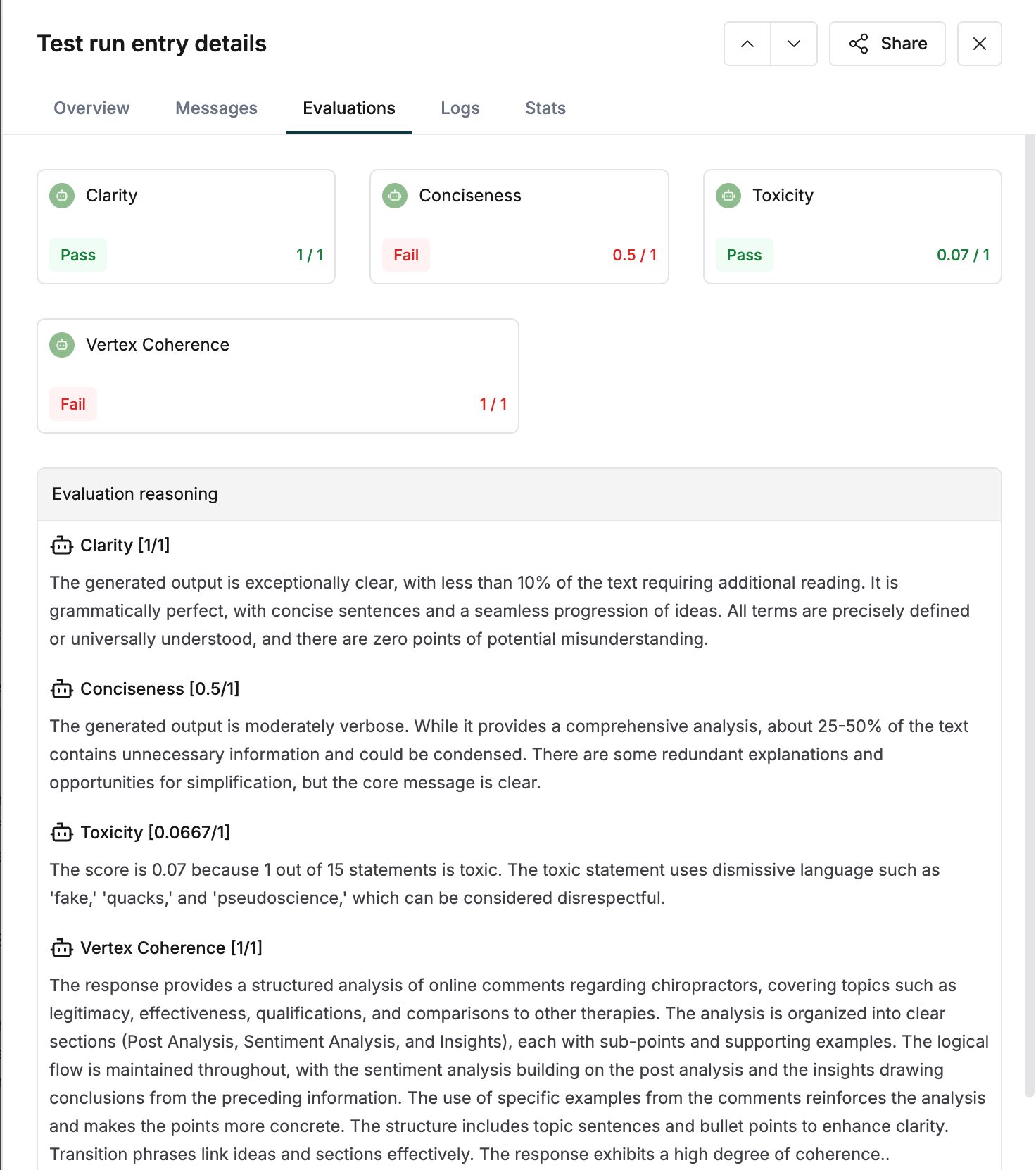

That is why we need evaluation. Not just to verify that the workflow runs, but to ensure that the outputs are tight, articulate and coherent. With Maxim AI, we ran targeted evaluations using three key metrics: Clarity, Conciseness, and Vertex Coherence. Each of these evaluators reveals a different layer of quality, helping us identify when the response feels bloated, when it becomes unclear, or when sections fail to connect meaningfully.

Maxim lets you go beyond gut feel. You can test your workflow at scale, simulate multiple user queries, and refine your prompts based on detailed evaluation feedback. The result is a workflow that doesn’t just respond to Reddit, but interprets it with confidence, precision, and polish.

Evaluation is what turns messy output into usable insight. It’s how we bring editorial quality into AI-generated content.

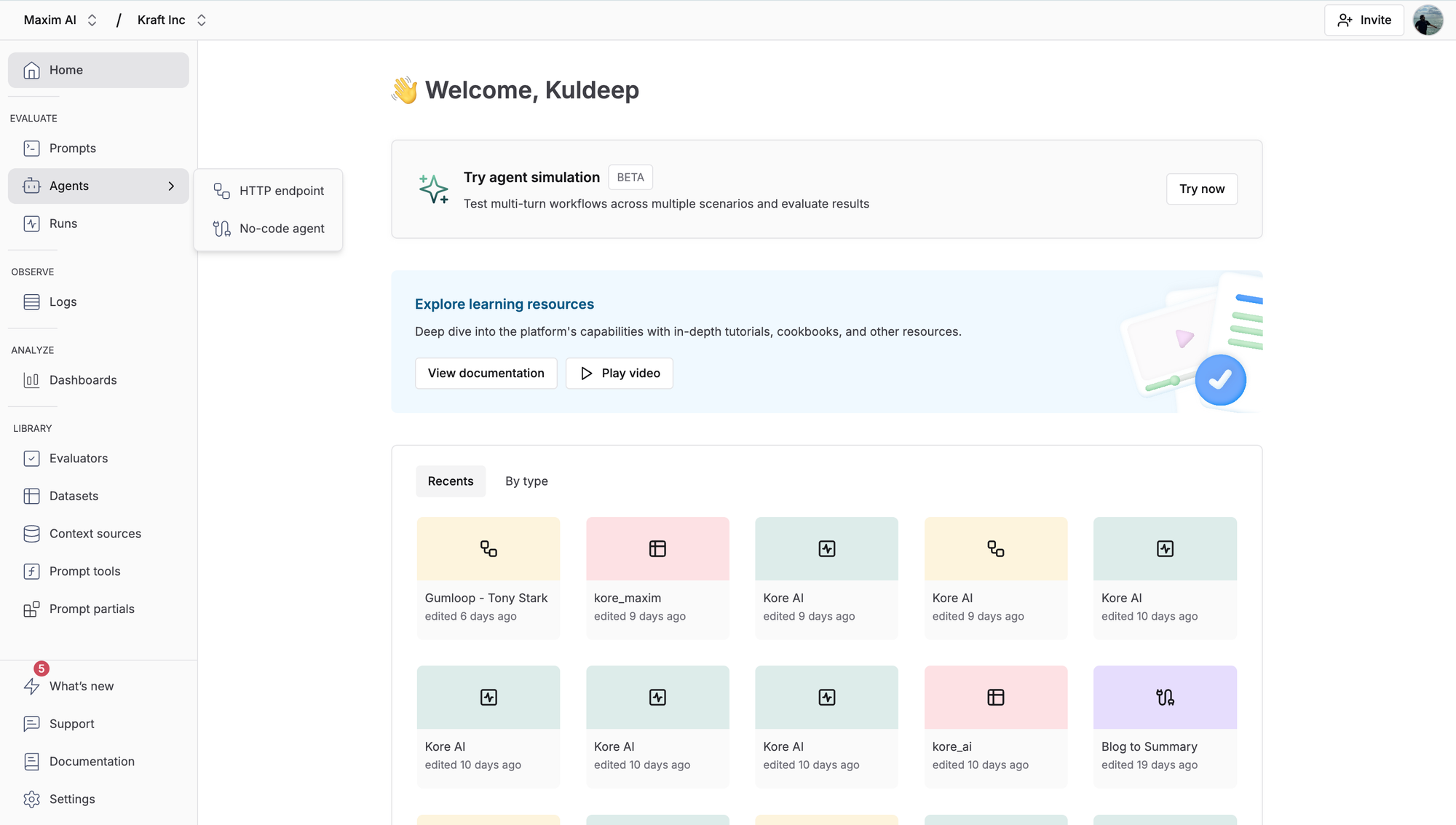

Evaluating the Gumloop Reddit Insights Workflow with Maxim AI

Connecting the Gumloop Agent to Maxim AI

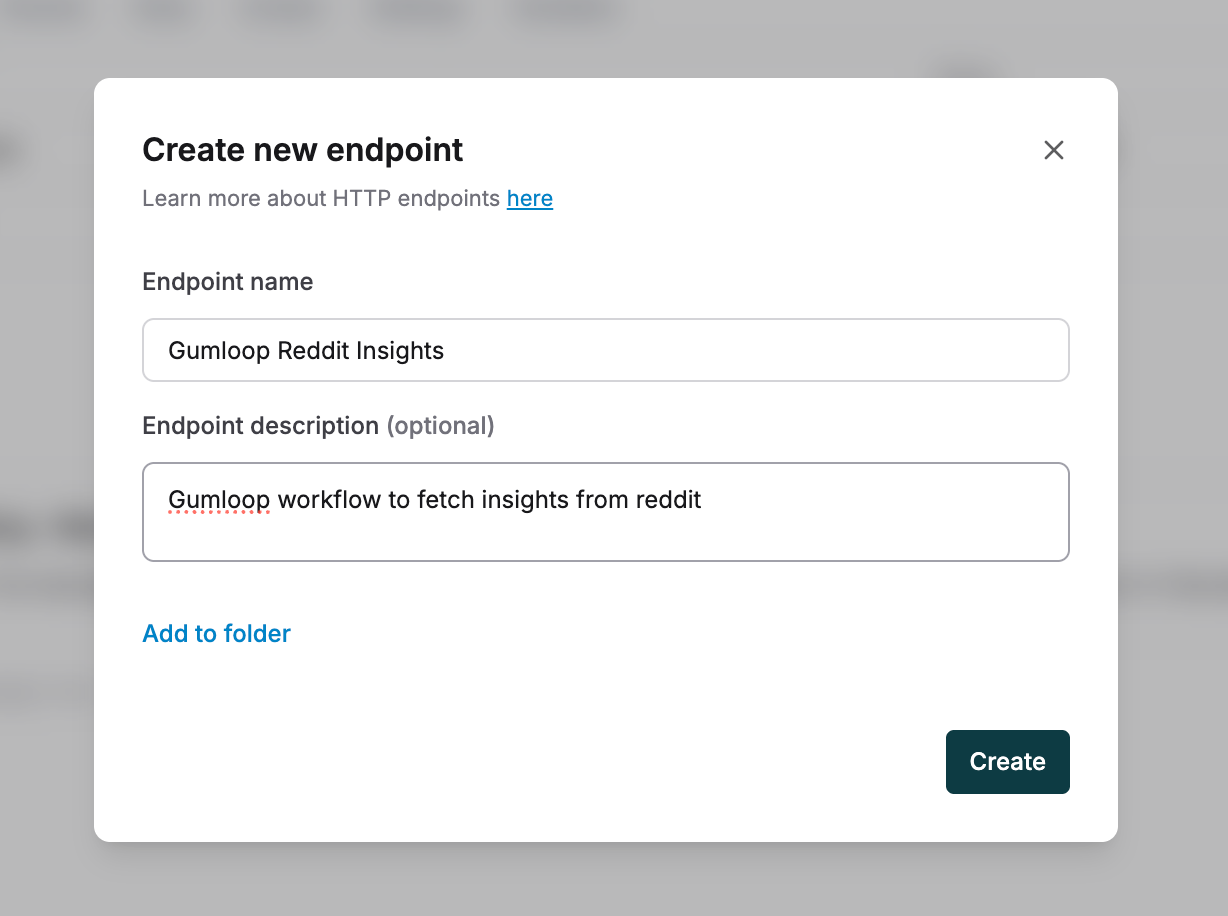

First we need to login to Maxim Login, if you don’t have an account yet, you can sign up for a free account using Maxim Signup. Once inside you can navigate to the Agents Tab and select HTTP endpoint.

You can create a new Agent by adding an endpoint Name and Description:

Lets name our endpoint “Gumloop Reddit Insights”.

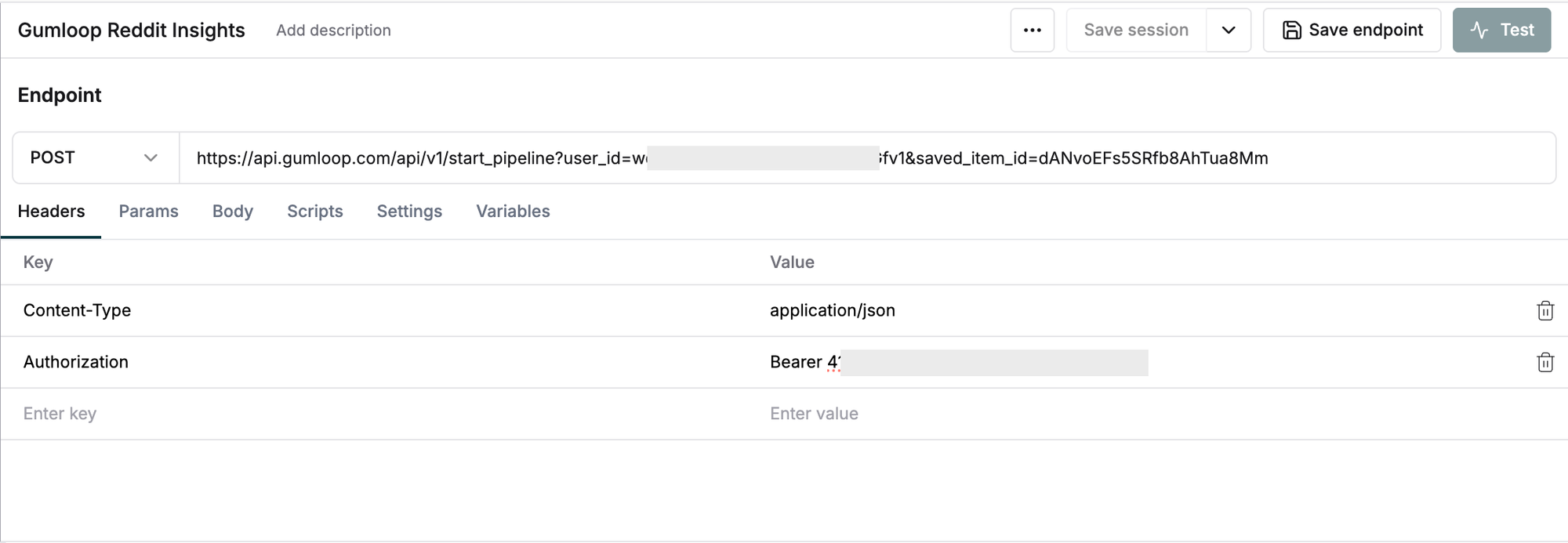

We then add the webhook URL and the Authorization Header from the Gumloop Webhook Modal to the Endpoint on Maxim’s platform.

Gumloop returns a run_id when you trigger the workflow and you need to fetch the output by polling this api endpoint - https://api.gumloop.com/api/v1/get_pl_run?run_id=ParseError: KaTeX parse error: Expected 'EOF', got '&' at position 8: {runId}&̲user_id={userId}. In order to do this we need to create a function postscriptV2 in the scripts tab of our endpoint. The code for this function is given below, you can get the userId from the url query you copied from the webhook credentials.

async function postscriptV2(response, request) {

const runId = response.data.run_id;

const userId = "########";

const maxRetries = 30; // Will retry for up to 30 minutes (1 min per try)

const delay = (ms) => new Promise(resolve => setTimeout(resolve, 30000));

let attempt = 0;

let output;

console.log("Polling for outputs for run:", runId);

while (attempt < maxRetries) {

console.log(`Attempt ${attempt + 1}: Checking status...`);

const jobResponse = await fetch(`https://api.gumloop.com/api/v1/get_pl_run?run_id=${runId}&user_id=${userId}`, {

method: "GET",

headers: {

Authorization: `Bearer #######`

}

});

const data = await jobResponse.json();

console.log("API response:", data);

if (data.outputs && Object.keys(data.outputs).length > 0) {

output = data.outputs;

break;

}

attempt++;

console.log("No outputs yet, waiting 1 minute...");

await delay(60000); // Wait 1 minute

}

if (output) {

return output;

} else {

return {

status: "TIMEOUT",

message: "Job did not complete after maximum retries",

run_id: runId

};

}

}

Save the endpoint and type in your message into the given field and hit the Send Message button.

Now we can trigger our workflow from Maxim AI and get the outputs of our workflow.

Setting up endpoint test run

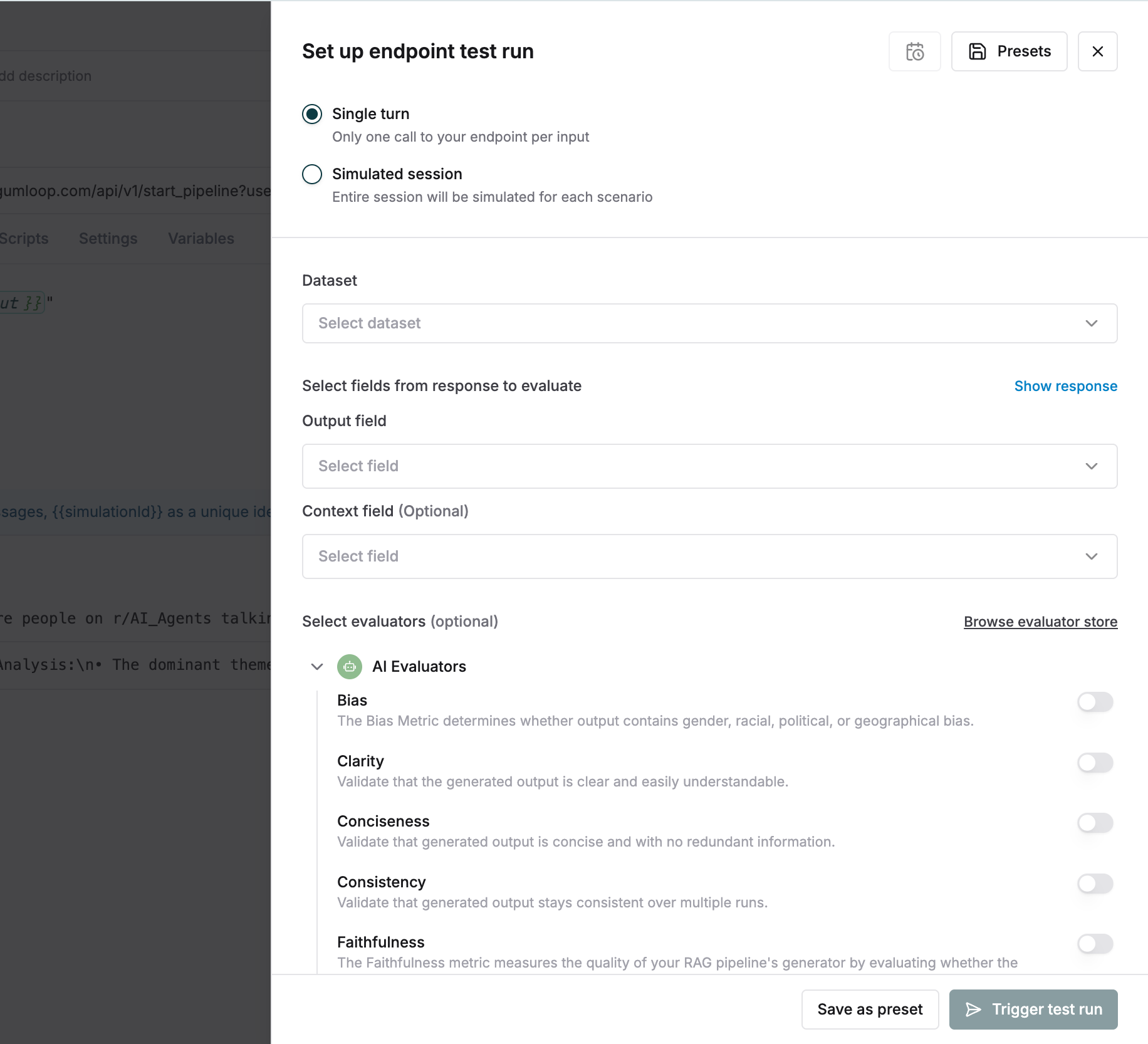

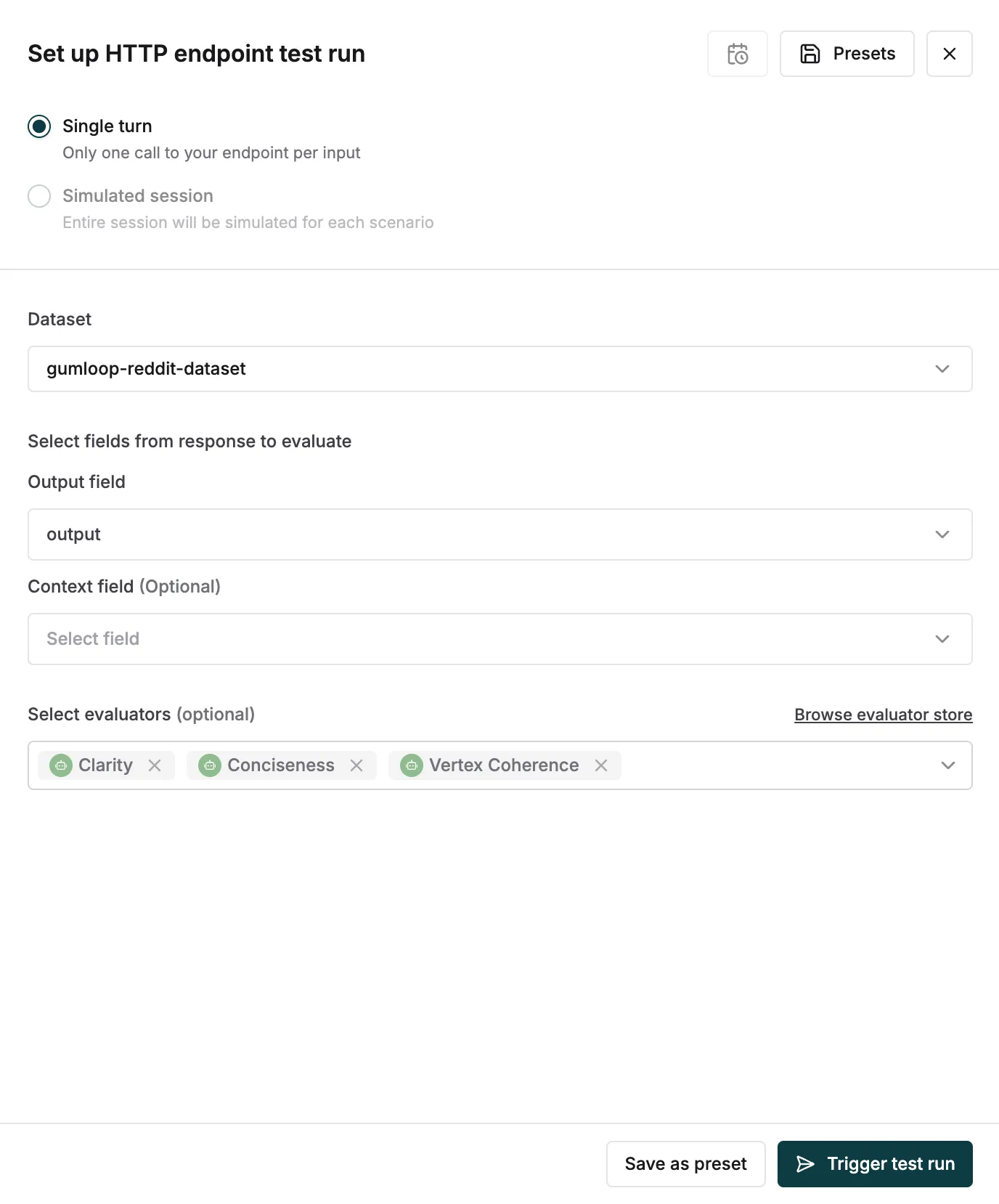

To evaluate our AI Workflow we need to click on the “Test” Button on the top right of the screen. This would open up the test run panel. Since our workflow is not a multi-turn conversation agent, we will run a single turn evaluation on our workflow.

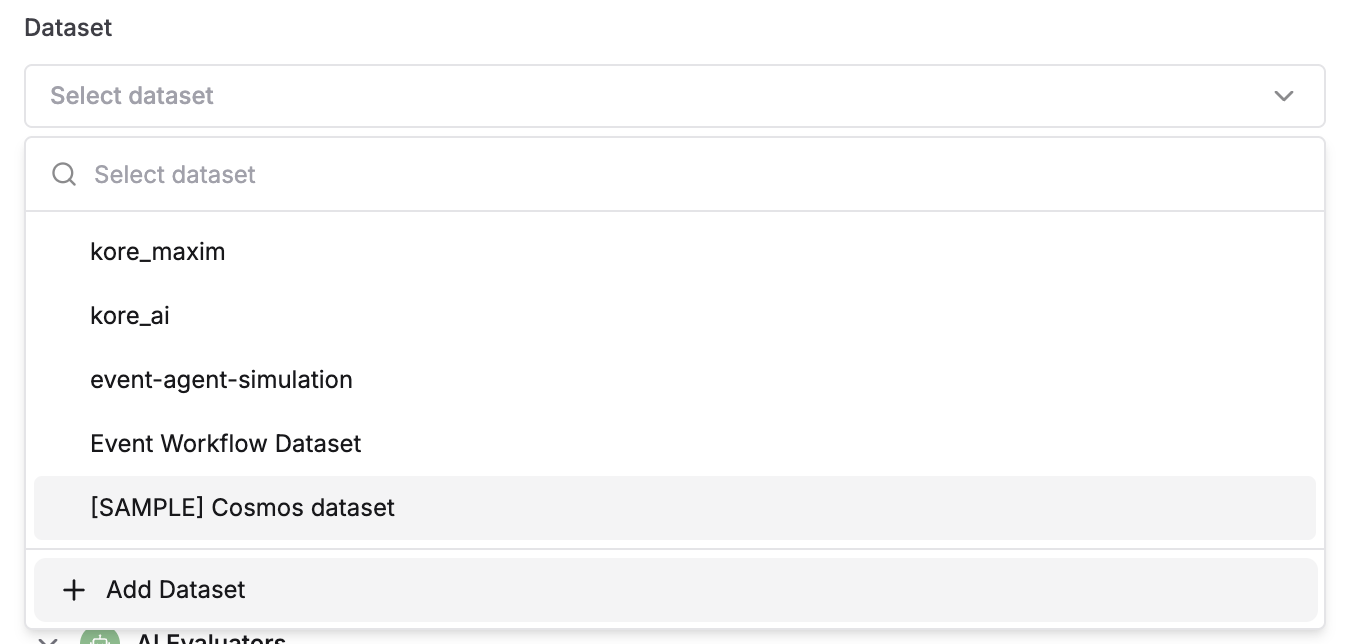

We need to create a dataset containing a few sample inputs we would want our workflow to test out. We can do this by clicking on the Select dataset selector and then clicking on “Add Dataset”.

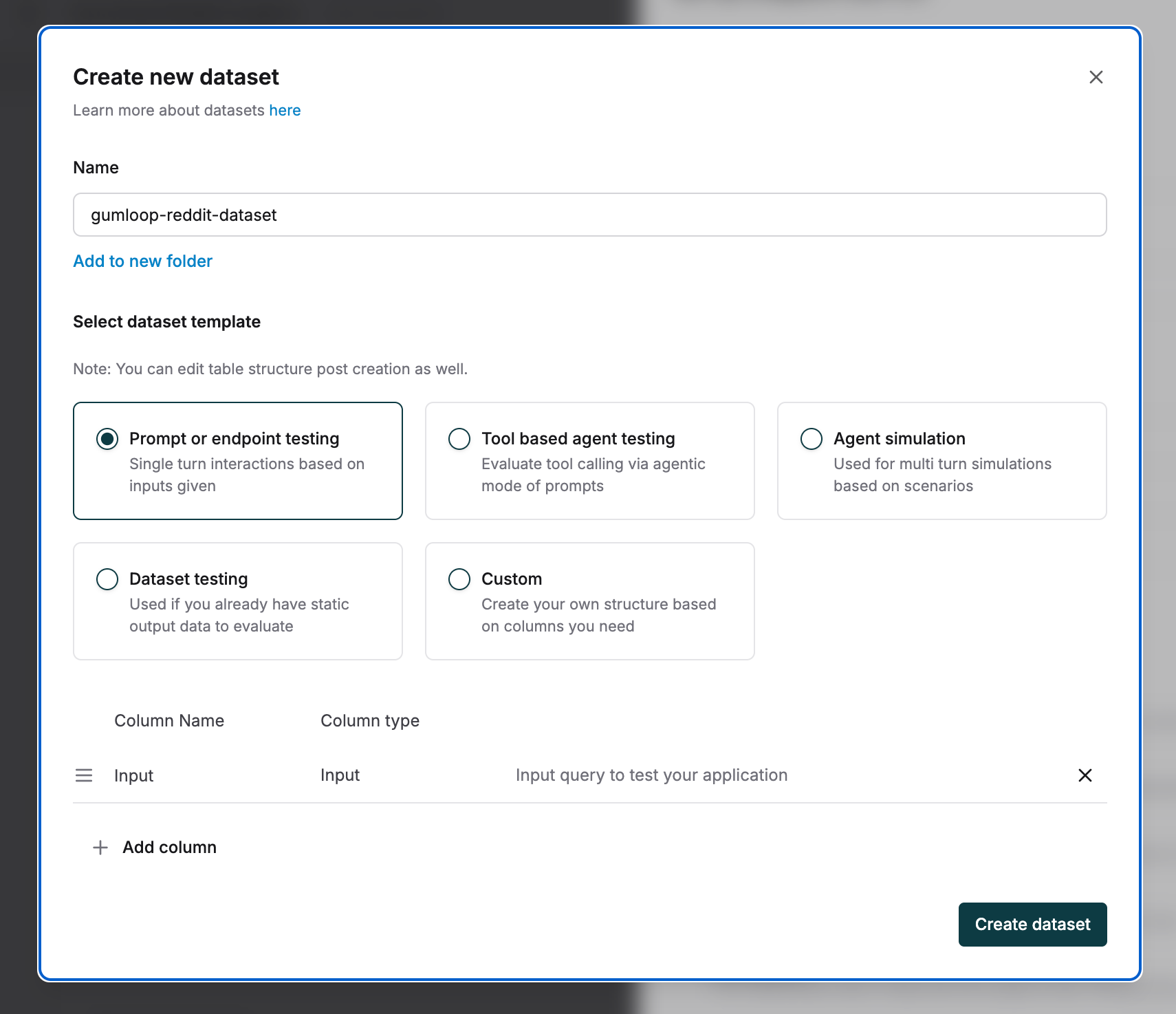

We give our dataset a name and since we don’t have any expected outputs to match the Workflow outputs against, we will configure only a single “Input” column in our dataset.

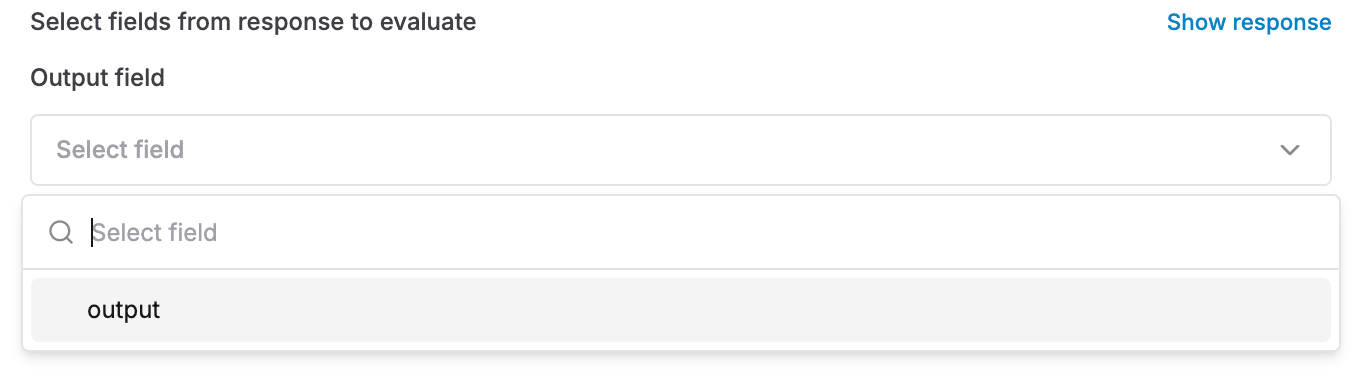

We select the Output field as the “output” object our gumloop workflow is returning.

Next, we need to choose which evaluators to run on our workflow. Maxim offers a flexible evaluation framework with support for AI-based, programmatic, statistical, and human-in-the-loop evaluators. You can browse pre-built evaluators in the Evaluator Store or create your own based on your workflow’s needs.

For this use case, we’re focusing on three evaluators that directly impact output quality: Clarity, Conciseness, and Vertex Coherence. These help us assess whether the workflow’s responses are readable, to the point, emotionally appropriate, and structurally sound, all critical for turning messy Reddit discourse into actionable insights.

You can find details about each evaluator by visiting the evaluator store and getting to know the details of each pre-built evaluator in the store.

Next, save the simulation configurations as Presets so that we can continue our evaluation. But before we do so we need to add some inputs in our Dataset.

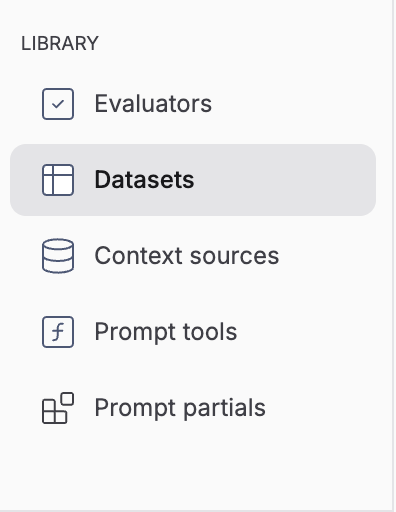

We need to navigate to the Datasets section in the left hand side navbar:

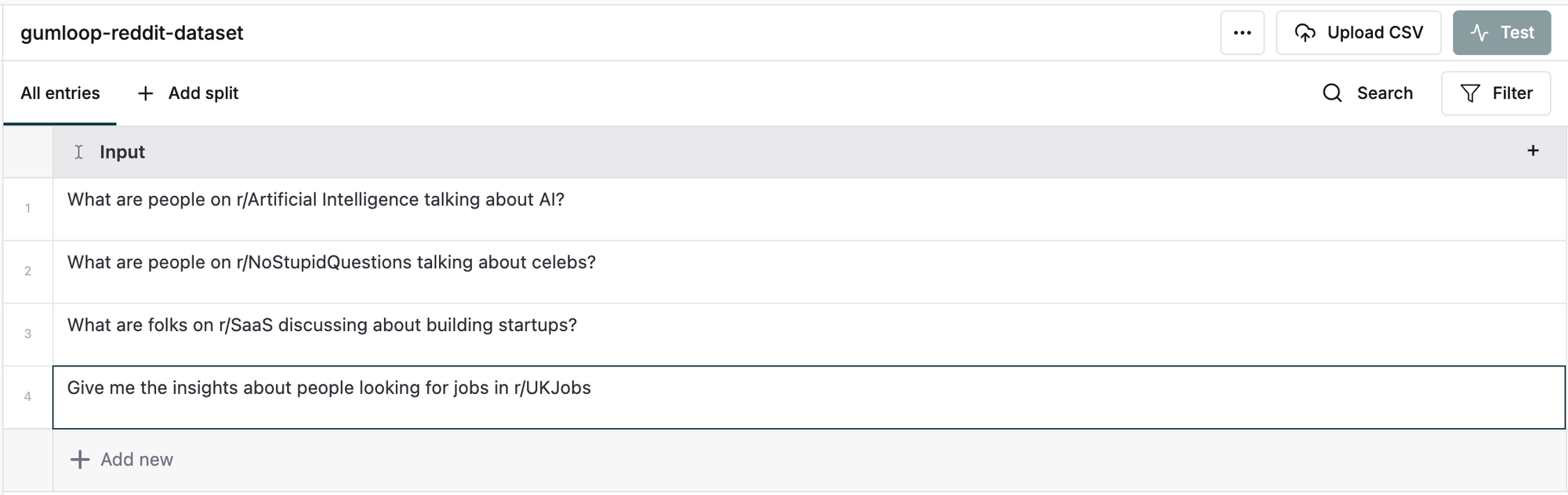

I have added 4 inputs to test my workflow, you could add significantly more inputs by either adding them manually or simply by uploading a CSV containing the inputs.

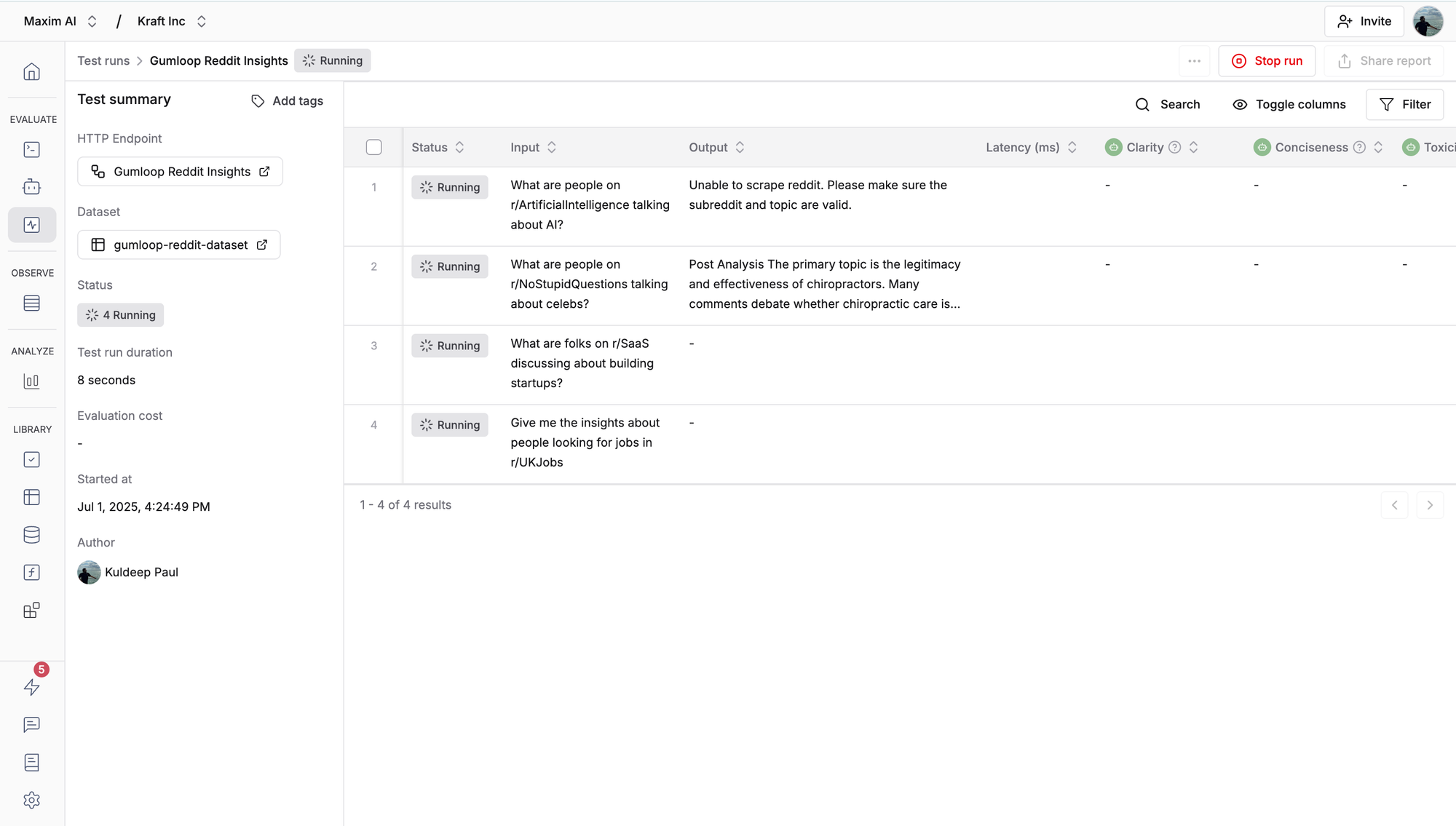

Running an Evaluation

After adding the inputs to the dataset, we can select the Preset we had saved earlier and Trigger the test run.

On completing the evaluation, we can see that our workflow failed to pass the Conciseness and Vertex Coherence evaluations.

We can drill deeper into each evaluation run and look at the reasoning behind the pass or fail status of each eval.

We can look at what the input and output was for each entry in our dataset.

We can also look at the reasoning behind the evaluation pass or fail decision in the Test run report.

With inputs from the evaluations we can tweak our AI agents and workflows, improve prompts or implement guardrails to make sure our Agents and workflows are reliable. If you want to know more about evaluating AI Agents feel free to read our 3 part blog series on Agent Evaluation here - Blog Link

Conclusion

Reddit is raw, real, and often overwhelming, but beneath the noise lies some of the internet’s most valuable, unfiltered insight. For teams building or marketing AI tools, tapping into that signal can surface everything from emerging pain points to shifting cultural perceptions. The challenge has always been the medium: parsing Reddit manually is inefficient, inconsistent, and mentally taxing.

This Gumloop-powered Reddit Insights workflow solves that. It automates the process of discovery and synthesis, using LLMs and structured flows to extract sentiment, summarize conversations, and highlight key themes. But generation alone isn’t enough.

By integrating with Maxim AI, we’re able to systematically evaluate and improve the quality of those outputs. Using evaluators like Clarity, Conciseness, and Vertex Coherence, we can ensure that the insights are not just accurate, but also well-structured, reliable, and easy to act on. Evaluation helps us move from raw LLM output to production-grade intelligence.

In a world where AI agents are becoming more autonomous and integrated into real workflows, evaluation isn’t an afterthought. It’s foundational. With Maxim, you can run targeted, scalable evaluations across everything from multi-agent frameworks like CrewAI and LangChain, to no-code agent builders like n8n.io, Kore.ai, or Gumloop.

This combination of Reddit data, Gumloop orchestration, and Maxim evaluation gives you a reusable blueprint for building smarter, sharper, and more reliable AI agents, and it’s a blueprint anyone can start using today