Are Small Language Models the Future of Agentic AI?

Recent research from NVIDIA presents a compelling argument that small language models (SLMs) represent the future of agentic artificial intelligence systems. The paper challenges the current industry paradigm of deploying large language models for all agent tasks, proposing instead that smaller, specialized models offer superior operational characteristics for most agentic workflows.

Defining the Small vs Large Model Distinction

The researchers establish clear working definitions: SLMs are language models capable of running on consumer devices with practical inference latency for single-user applications, while LLMs encompass all models that exceed these constraints. In practical terms, models below 10 billion parameters typically qualify as SLMs under this framework.

This definition anchors the discussion in deployment reality rather than arbitrary parameter counts, recognizing that the boundary between "small" and "large" will shift as hardware capabilities advance.

The Capability Argument

The paper presents substantial evidence that modern SLMs have achieved performance levels previously exclusive to much larger models. Several examples demonstrate this capability convergence:

Microsoft's Phi-2 model with 2.7 billion parameters matches the commonsense reasoning and code generation performance of 30-billion parameter models while operating approximately 15 times faster. The Phi-3 small variant achieves language understanding comparable to models up to 70 billion parameters from the same generation.

NVIDIA's Nemotron-H family demonstrates that hybrid Mamba-Transformer architectures can achieve instruction-following accuracy comparable to dense 30-billion parameter models while requiring only a fraction of the inference computation. Similarly, the Hymba-1.5B model outperforms larger 13-billion parameter models on instruction-following tasks while delivering 3.5 times greater token throughput.

These performance gains extend beyond raw benchmarks to practical applications. The DeepSeek-R1-Distill series shows that reasoning capabilities can be effectively distilled into smaller models, with the 7-billion parameter variant outperforming proprietary models like Claude-3.5-Sonnet and GPT-4o on specific reasoning tasks.

Economic Efficiency in Agentic Systems

The economic argument centers on the fundamental cost structure differences between small and large model deployment. Serving a 7-billion parameter SLM typically costs 10-30 times less than a 70-175 billion parameter LLM across multiple dimensions: latency, energy consumption, and computational requirements.

This efficiency advantage becomes particularly pronounced in agentic workflows, where systems may perform hundreds or thousands of model invocations per user session. The cumulative cost savings can be substantial, especially when considering the repetitive nature of many agent tasks.

Fine-tuning represents another economic advantage. Parameter-efficient techniques like LoRA (Low-Rank Adaptation) enable SLM customization within GPU-hours rather than the weeks required for large model adaptation. This agility allows rapid specialization and behavioral modification as requirements evolve.

Edge deployment capabilities further enhance the economic proposition. Modern inference systems enable local execution of SLMs on consumer-grade hardware, providing real-time responses with lower latency and stronger data control while eliminating ongoing API costs.

Operational Flexibility and Specialization

The research emphasizes that agentic systems naturally expose only narrow subsets of language model capabilities. Most agent interactions involve structured tasks: parsing specific data formats, generating templated responses, or executing predetermined workflows. This constraint mismatch suggests that general-purpose LLMs may be overqualified for typical agentic use cases.

SLMs offer superior operational flexibility through easier specialization. Organizations can maintain multiple expert models tailored for different agent functions, each optimized for specific interaction patterns. This modular approach enables rapid iteration and adaptation to changing requirements.

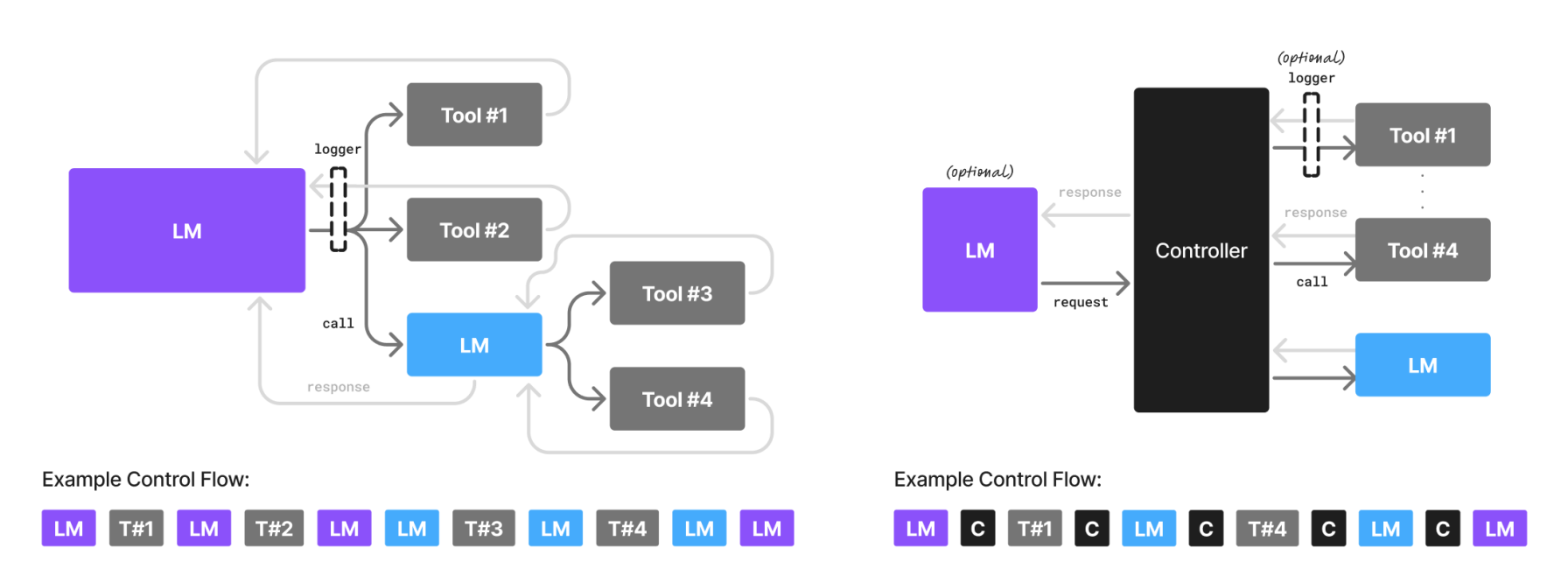

The paper advocates for heterogeneous agentic systems where different sized models handle tasks of varying complexity. Simple routing logic can direct routine queries to specialized SLMs while escalating complex reasoning tasks to larger models when necessary.

Addressing Counter-Arguments

The research acknowledges several alternative perspectives that challenge the SLM-centric approach.

The scaling laws argument contends that larger models will always outperform smaller ones on language understanding tasks. However, the authors counter that recent architectural innovations demonstrate performance benefits from size-specific design choices rather than simple parameter scaling. Additionally, fine-tuning and test-time compute scaling can bridge performance gaps for specific applications.

Economic centralization concerns suggest that distributed SLM deployment may lack the economies of scale achieved by centralized LLM services. The paper acknowledges this trade-off while noting improvements in inference scheduling and infrastructure modularization that support more flexible deployment patterns.

Implementation Framework

The research provides a practical six-step conversion algorithm for migrating from LLM-based to SLM-based agent systems:

Data collection involves logging all agent interactions with appropriate privacy controls to build training datasets for specialized models. Task clustering employs unsupervised techniques to identify recurring patterns suitable for SLM specialization.

Model selection considers inherent capabilities, benchmark performance, licensing constraints, and deployment requirements. Fine-tuning leverages task-specific datasets with parameter-efficient techniques to create specialized models.

The framework emphasizes iterative refinement, with continuous data collection enabling periodic model updates and performance improvements.

Critical Limitations

While the research presents compelling arguments, several limitations warrant consideration. The capability assessment relies heavily on benchmark performance, which may not capture the nuanced reasoning required in production environments. Real-world agent tasks often involve handling unexpected inputs and edge cases where larger models' broader knowledge bases provide advantages.

Context window constraints in most SLMs may limit their effectiveness in complex, multi-turn interactions that require maintaining substantial conversation history or processing lengthy documents.

The operational complexity of maintaining multiple specialized models, routing systems, and fallback mechanisms may offset some claimed efficiency gains. Organizations must weigh the benefits of specialization against the overhead of managing heterogeneous model deployments.

Conclusion

The NVIDIA research presents a thoughtful analysis of efficiency opportunities in agentic AI systems. The evidence supporting SLM capabilities for specific tasks is compelling, and the economic arguments for selective deployment have merit. However, the future likely involves sophisticated orchestration rather than wholesale replacement of large models.

The most practical approach appears to be hybrid architectures that match model capabilities to task requirements: specialized SLMs for routine, well-defined operations and larger models for complex reasoning and edge case handling. This balanced approach can capture efficiency gains while maintaining system robustness.

The research contributes valuable insights to ongoing discussions about optimal resource allocation in AI systems. As the technology landscape continues evolving, the principles of matching model capabilities to task requirements and considering total cost of ownership will remain crucial for building effective agentic systems.