AlphaEvolve : AI for Scientific Discovery

Introduction

Consider a scenario: You're facing a complex optimization challenge with no known solution - the kind that requires inventing entirely new algorithms, not just tweaking existing ones. There's no textbook answer, no established approach. Existing coding models like Claude, Gemini 2.5 can implement known solutions, but they struggle to venture into uncharted territory to create efficient algorithms that don't yet exist. That's what AlphaEvolve tackles: automatically discovering sophisticated algorithms for open-ended problems where the optimal approach is unknown.

At its core, AlphaEvolve is an LLM Code SuperOptimization Agent that leverages language models and automatic evaluators for algorithmic discovery. It develops sophisticated algorithms for challenging scientific problems through automatic evaluators that verify it’s solutions, creating a continuous optimization feedback loop. So far, it has delivered SOTA solutions across multiple domains, Google even used AlphaEvolve to optimize the critical layers their computation stacks that include data centers, hardware and software - which is very meta! Before diving into how AlphaEvolve works, let's examine its predecessor: FunSearch.

FunSearch: Laying the Groundwork for AlphaEvolve

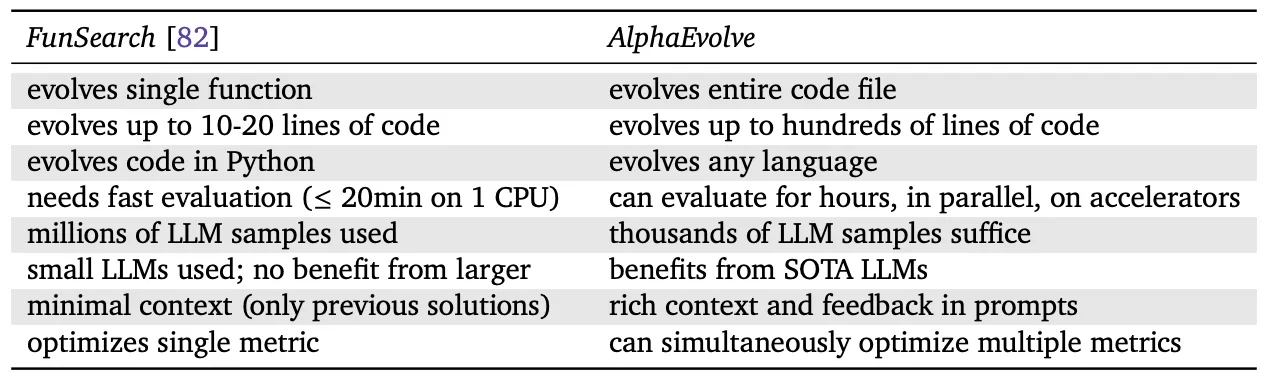

The idea of combining evolutionary methods with coding LLMs has been previously demonstrated with FunSearch, back in 2023.

FunSearch, short for "searching in the function space," is an AI system that works by pairing a LLM with an automated evaluator where the LLM creatively generates potential solutions to a problem in the form of computer programs. These programs are then automatically executed and assessed by the evaluator, which filters out incorrect or nonsensical outputs and identifies the most promising ones. It operates through an iterative, evolutionary process: the best-performing programs are fed back to the LLM, which then builds upon them to generate new, improved programs, creating a self-improving loop.

FunSearch laid crucial groundwork, demonstrating the immense potential of pairing LLMs with automated evaluators. Now, AlphaEvolve pushes this paradigm to an entirely new frontier, enabling even more sophisticated and wide-ranging algorithmic discovery.

How AlphaEvolve Works: An Inside Look

Defining Problem and Evaluation Criteria (”What?”):

Before AlphaEvolve starts its whole process, the user needs to provide some key information for setting up the initial environment for AlphaEvolve:

- Task Description: Clear Description of the task that needs to be accomplished

- Defining Evaluation Criteria: An Evaluation function for automatically assessing the quality of a generated solution - usually a python function (h), that takes a generated solution as input and returns a set of scalar evaluation metrics to be maximised.

- Initial Solution and Codebase: Basic completed implementation of the program or codebase (even if rudimentary) must be provided

Specific blocks of code can be marked using special comments (# EVOLVE-BLOCK-START and # EVOLVE-BLOCK-END) where any code between these blocks are the starting point for AlphaEvolve's improvements and remaining code in the script and codebase acts as context

- Additional Context and Configuration: Explicit Context like problem equations, descriptions and relevant links and literature (pdfs) for the problem can be given. Configuration of the choice of LLMs and hyperparameters can be done here.

DeepMind's experiments utilized Gemini 2.5 Flash (for breadth) and Gemini 2.5 Pro (for depth) LLMs to enhance AlphaEvolve's performance.

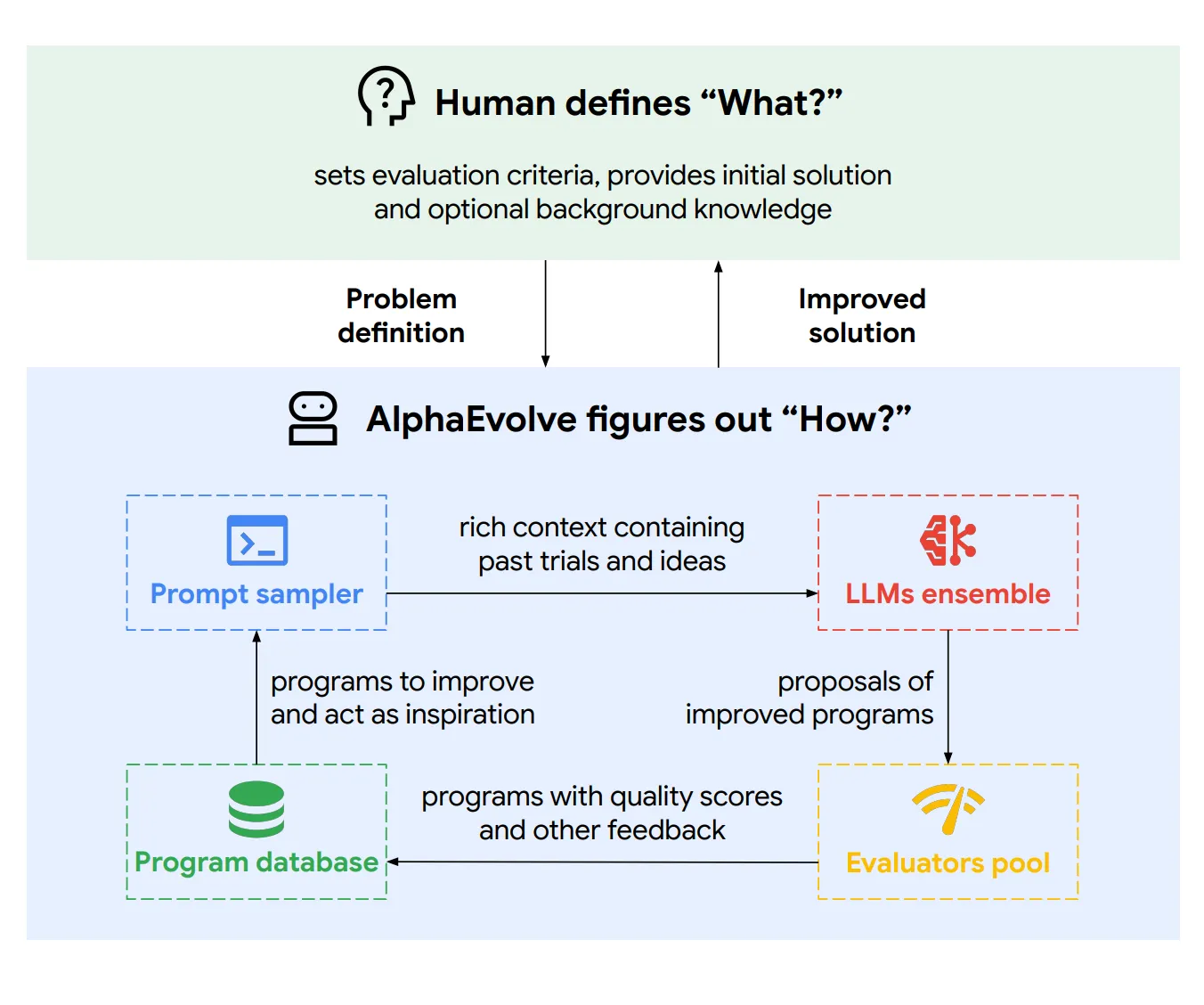

The AlphaEvolve Engine: Iterative Improvement ("How?")

Once the problem is defined, AlphaEvolve orchestrates an autonomous, asynchronous pipeline to iteratively improve the initial solution. This pipeline consists of several components working together in an evolutionary loop:

- Program Database: Stores generated solutions and evaluation results, balancing exploration (finding new approaches) and exploitation (improving existing ones).

- Prompt Sampler: Samples high-performing programs from the database to construct rich prompts for LLMs. The system prompts include the context, detailed instructions and previous even the code examples with scores.

- LLMs Ensemble: This is the main component where the LLM’s take in the information in the prompt and generate proposals for improving the code. The LLMs (Flash for breadth, Pro for depth) are typically instructed to provide changes as diff blocks, allowing for targeted modifications within the codebase. For very short code, a full rewrite of the block can be requested

- Applying Changes: The proposed code modifications (diffs) from the LLMs are applied to create new candidate program.

- Updating the Database: The newly evaluated programs and their results (scores) are added back into the Program Database. This updates the pool of knowledge that the Prompt Sampler draws upon for the next iteration, driving the evolutionary process towards better solutions.

Evaluators Pool: A vital component that automatically assesses each new program generated by the LLMs using the user-defined evaluation function (h) as mentioned above. This provides objective feedback on program quality and supports optimising for one or more metrics simultaneously.

Efficiency gains are achieved through evaluation cascades (hypothesis testing) and parallelization across machines

There’s no single, definitive condition for the AlphaEvolve loop to end. The AlphaEvolve loop runs iteratively until a given computational budget is exhausted.

Key Achievements

- AlphaEvolve found a procedure using 48 scalar multiplications for 4x4 complex-valued matrix multiplication, marking the first improvement over Strassen’s algorithm in this specific setting after 56 years

- Improving complex, open mathematical problems like Minimum Overlap Problem set by Erdos (Number theory) and finding an improved construction for the Kissing Numbers problem in 11 dimensions (geometry)

Kissing Numbers is a 300 year old geometry challenge that asks: “How many spheres can touch one central sphere in 11D space?”

AlphaEvolve pushed the known lower bound from 564 → 593.

- By finding algorithms that required fewer scalar multiplications, AlphaEvolve surpassed the state-of-the-art for a total of 14 different matrix multiplication targets

- Beyond its academic triumphs, AlphaEvolve has delivered tangible, high-impact results within Google's own compute ecosystem. It notably sped up Gemini's matrix math kernels by 23%, achieved 32.5% gains in GPU-level FlashAttention code, and rewrote Verilog hardware code for TPUs.

Conclusion:

In conclusion, AlphaEvolve stands as a landmark breakthrough in the quest for automated scientific and algorithmic discovery, demonstrating the potential of language models + automatic evaluations. It represents a significant leap beyond previous methods like FunSearch. By combining powerful LLMs with robust evaluators, AlphaEvolve enables both the discovery of new knowledge and the evolution of code generation into true algorithmic innovation. Ultimately, this empowers AI agents to begin autonomously tackling complex, hard science problems, starting a new frontier in human-computer collaboration for discovery!

For a deeper dive, refer to the full paper: AlphaEvolve Paper

Funsearch Paper: FunSearch Paper