Agent Simulation & Testing Made Simple with Maxim AI

Generative AI agents aren’t just answering questions. They keep track of context, call APIs, enforce policies, and handle sensitive data. If you ship without proper testing, expect hallucinations, privacy leaks, and broken flows. Agent Simulation in Maxim makes quality control systematic, run data-driven tests, catch failures before users do, and keep your product sharp.

For this blog, we’re using the flight booking agent as our reference point. Every example, workflow, and test comes straight from how you’d build, simulate, and evaluate a travel agent in production. Key concepts include:

• Simulation Overview (why, personas, advanced settings)

• Simulation Runs (datasets, test-run configuration, evaluator results)

• Tracing & Dashboards (root-cause analysis after a run)

1 What is agent simulation?

Agent simulation pairs a synthetic simulator (virtual user) with your AI agent in a controlled environment. Each session:

- Starts from a predefined scenario (“Book an economy flight NYC → SFO for 20 April”).

- Applies a persona (polite, impatient, frustrated, expert).

- Runs for a fixed number of turns or until a success condition is met.

- Logs every request, response, and tool call.

- Evaluates the transcript with objective rubrics (PII, trajectory, hallucination, latency, cost).

Because the user side is synthetic, you can execute hundreds of scenarios in minutes and surface long-tail failures long before customers see them.

2 Why Agent Simulation Matters

Agent simulation matters because it enables rigorous testing, validation, and improvement of autonomous AI systems.

It is the backbone of building reliable, scalable, and safe AI agents, especially in production environments. By simulating agents, you can observe how they interact with each other, with users, and with external systems before deploying them live. This process uncovers edge cases, failure modes, and emergent behaviors that are nearly impossible to predict with static testing or isolated unit tests.

3 How Maxim AI handles Simulation

- You pick your agent and load up the scenarios you think are critical for testing.

- Maxim runs multi-turn conversations using synthetic users and custom personas.

- Every session gets logged: requests, responses, tool calls, the works.

- Evaluators score your agent for accuracy, data leaks, speed, and whether it followed the rules.

- You get dashboards for every run, so you can spot issues side-by-side across versions.

- Plug it into your CI/CD and automate the whole process. You catch failures before your users do.

4 Wrap the agent in a Maxim workflow

The agent you’ll see in this workflow is a flight booking agent—built to handle real travel requests. Every step, every test, every scenario in Maxim is shown using this agent. So if you want to know how simulation works in practice, this is as close as it gets to a real-world application.

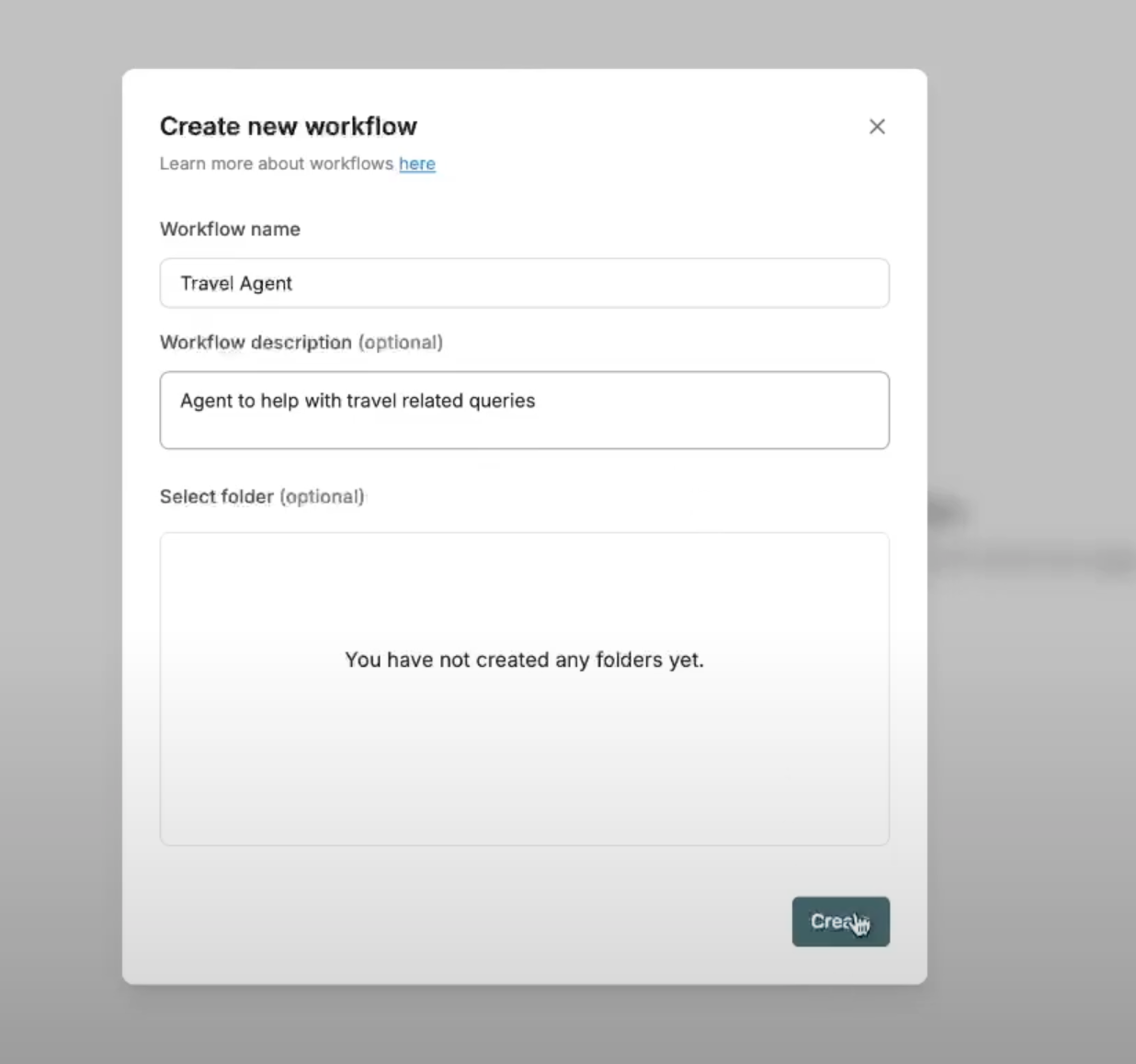

4.1 Create the workflow

Open Workflows → New Workflow, then enter:

Name: Travel Agent

Description: Assists with flight & hotel bookings.

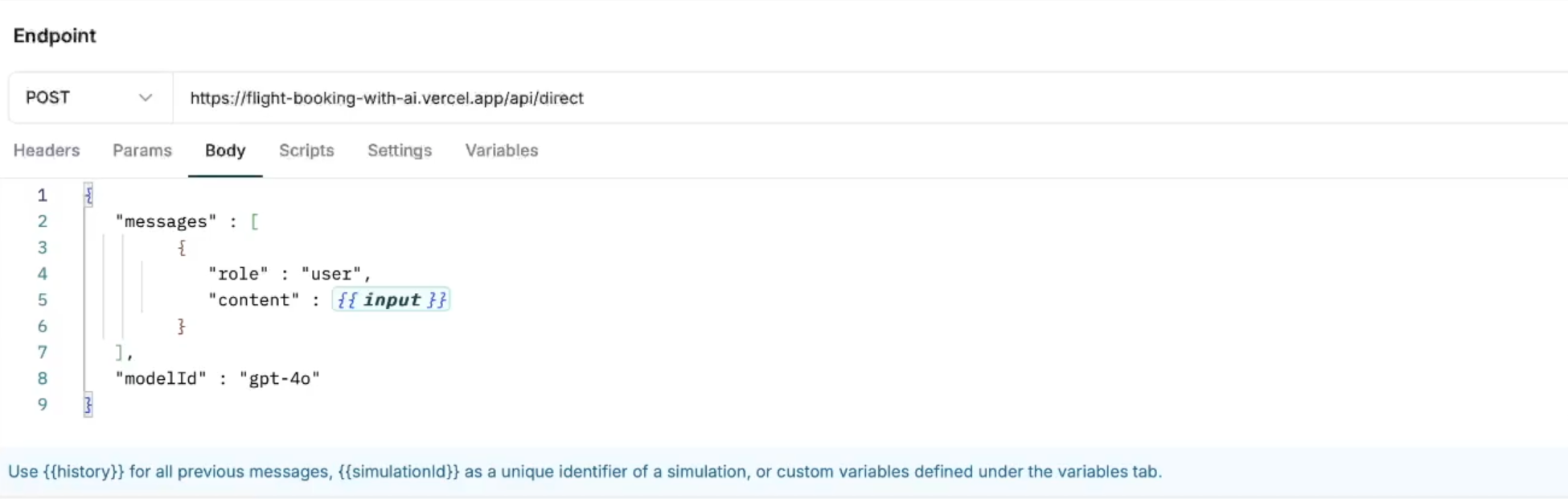

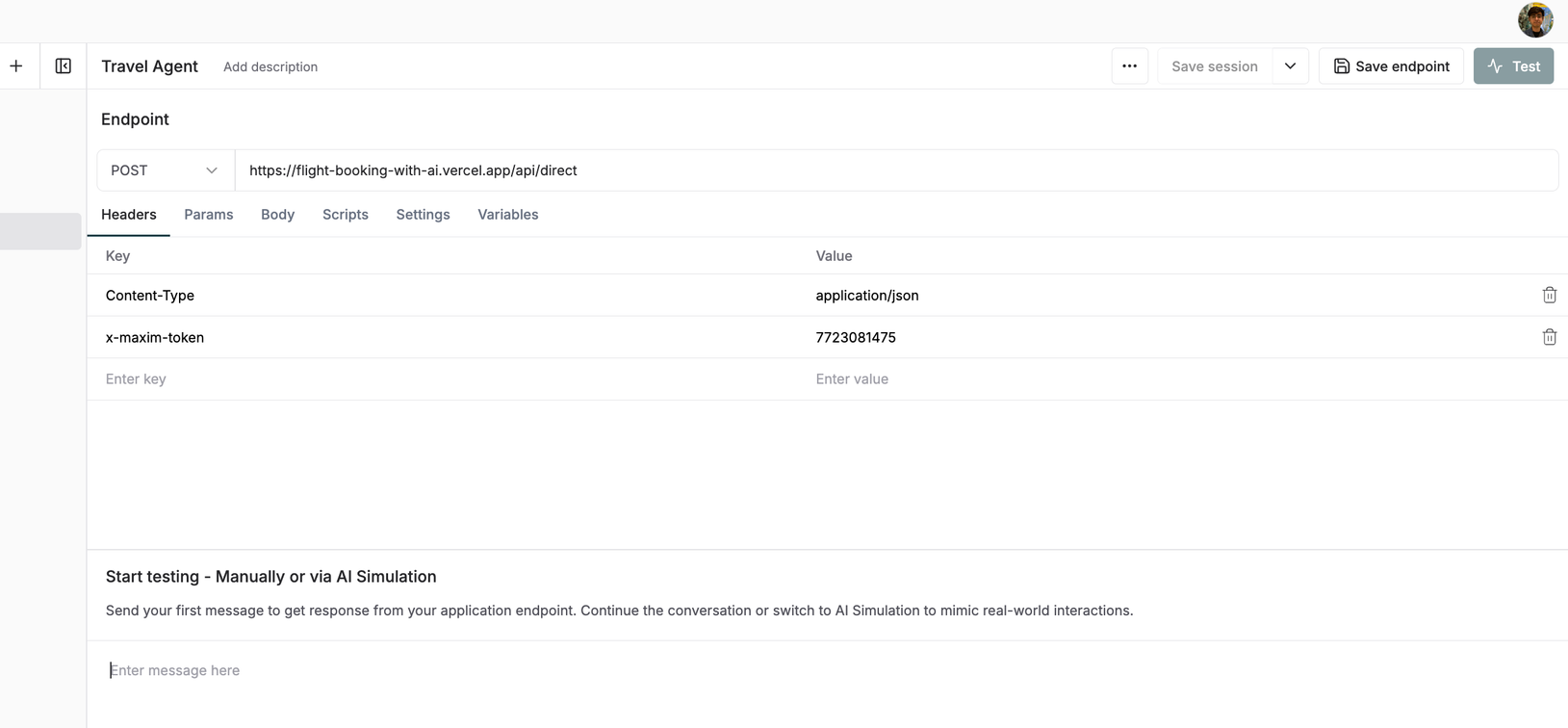

4.2 Define the request

The flight booking agent was already built and deployed on Vercel, running live as a real API. We connected Maxim directly to that endpoint. Every simulation, every test, every scenario in this workflow hits the actual agent, not a mock or a playground. This way, when you see results, you know they’re coming from the real production setup, just like your users would.

POST https:///flight-booking-with-ai.vercel.app/i/direct

{

"messages": [

{"role": "user", "content": "{{input}}"}

],

"model_id": "gpt-4"

}

{{input}} binds to the simulator’s message.

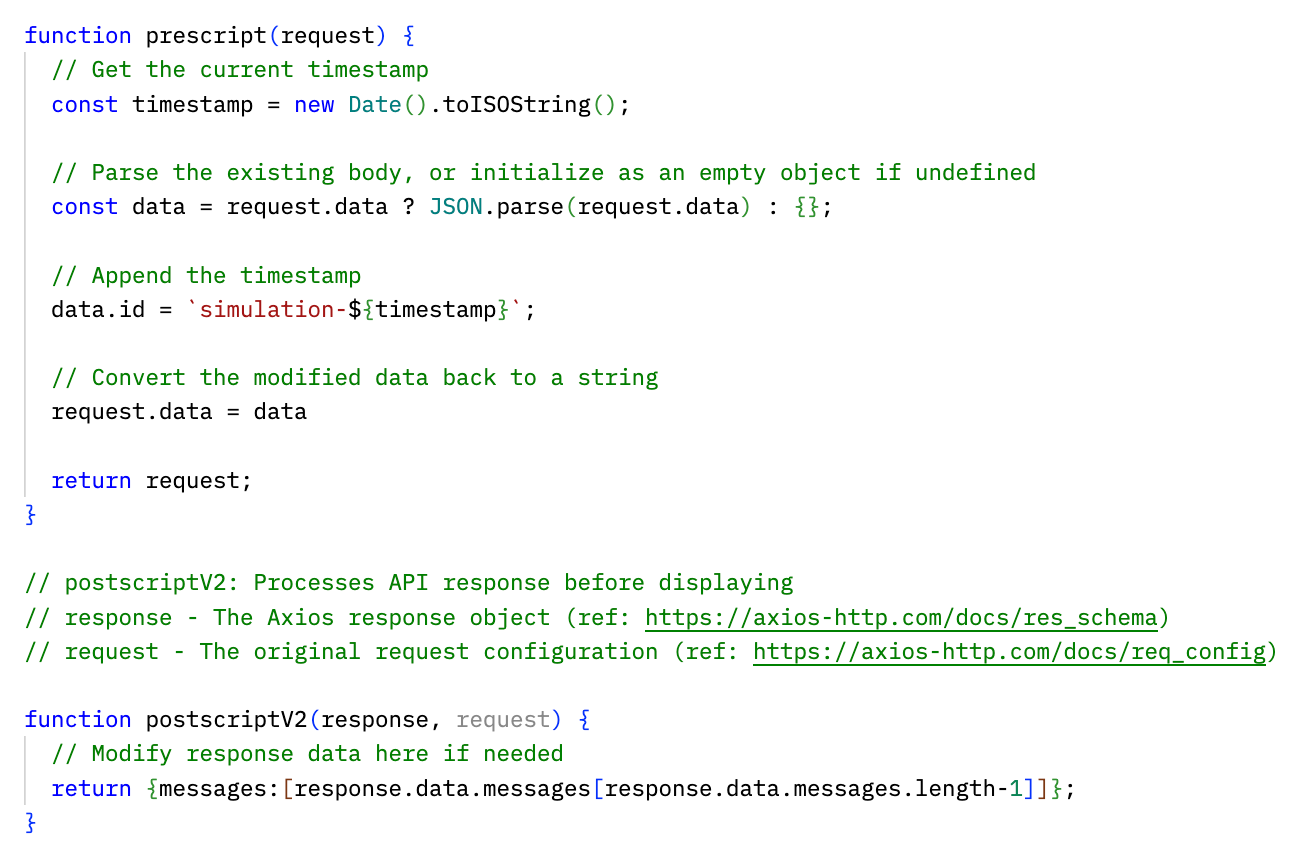

3.3 Inject a unique simulation ID (pre-script)

export default function preScript(request) {

// ISO timestamp → ensures uniqueness per run

const ts = new Date().toISOString();

// Parse the existing body (may be "{}" the first time)

const data = JSON.parse(request.data || "{}");

// Attach a correlation ID the backend can log

data.id = `simulation-${ts}`;

// Overwrite the request body

request.data = JSON.stringify(data);

return request; // Must return the mutated request object

}

4.4 Return only the assistant’s final utterance (post-script)

export default function postScript(response) {

// Convert raw string → object

const full = JSON.parse(response.data);

// Grab the assistant’s last message

const last = full.messages.at(-1);

// Strip everything else; evaluators need only this

return { messages: [last] };

}

4.5 Authentication headers

x-maxim-token: 12345-demo-secret

(Bearer tokens and mTLS are equally supported.)

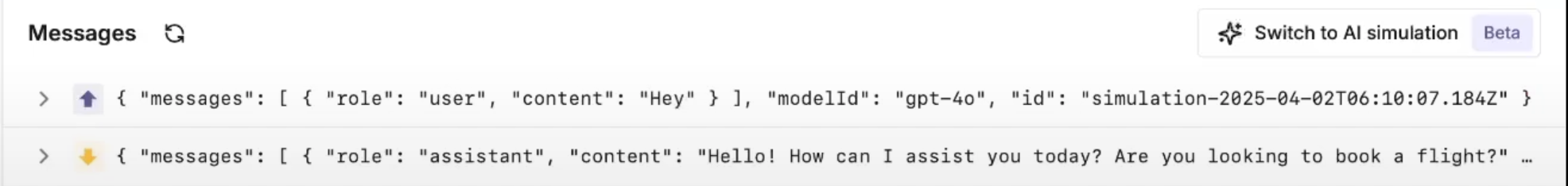

5 Manual smoke test

Type Hey and press Send. You should receive a greeting from the agent, confirming headers, body shape, and scripts all work.

6 Simulation parameters

- Scenario Narrative + business constraint.

- Persona Emotion, politeness, domain knowledge.

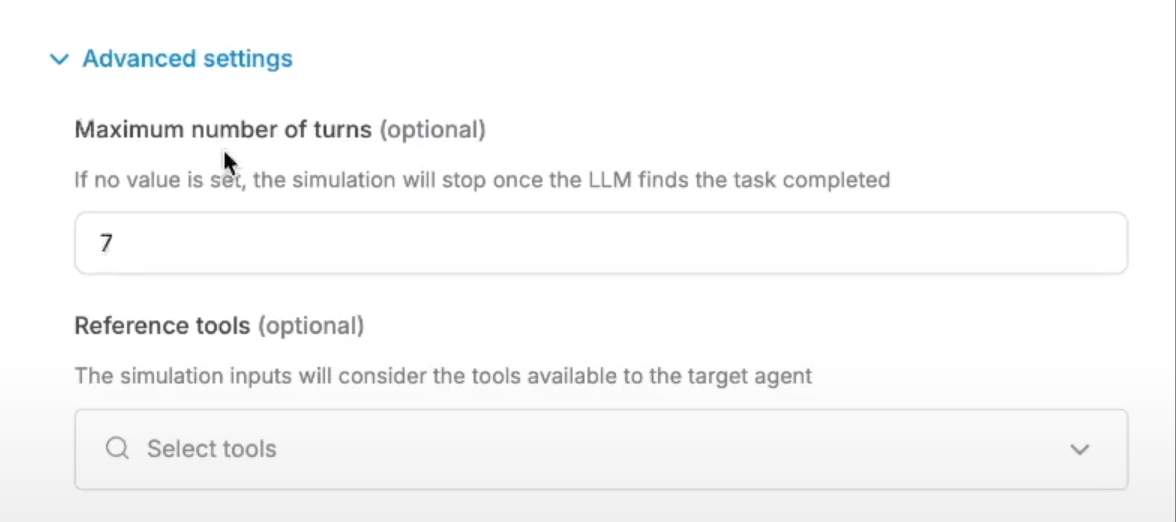

- Advanced settings• Max turns caps loops (e.g., 8).• Reference tools

refund_processor, etc.• Context sources policies or specs to curb hallucinations.

7 Create an Agent Dataset

Use the table below when you recreate the CSV/JSON inside Maxim. Each row is a separate simulated conversation; the “Expected steps” cell is intentionally brief (Maxim will show full text on hover).

| Scenario (user input) | Expected steps (compressed) |

|---|---|

| Book an economy flight NYC → SFO on 20 Apr 2025 | 1 Clarify date & cabin → 2 List two-or-more economy options → 3 Ask user to choose → 4 Confirm booking |

| Book the cheapest round-trip London ↔ San Francisco in March | 1 Return cheapest date pair → 2 Collect passenger count & names → 3 Verify selection → 4 Issue tickets |

| Family of four wants flights to Hawaii for summer vacation | 1 Show 2–3 family-friendly itineraries → 2 Gather traveller details → 3 Confirm choice → 4 Complete booking |

| Business-class seat on direct Air India BLR → SFO, 16 Feb | 1 Return nonstop options (if any) → 2 Ask user to pick one → 3 Confirm fare → 4 Finalise purchase |

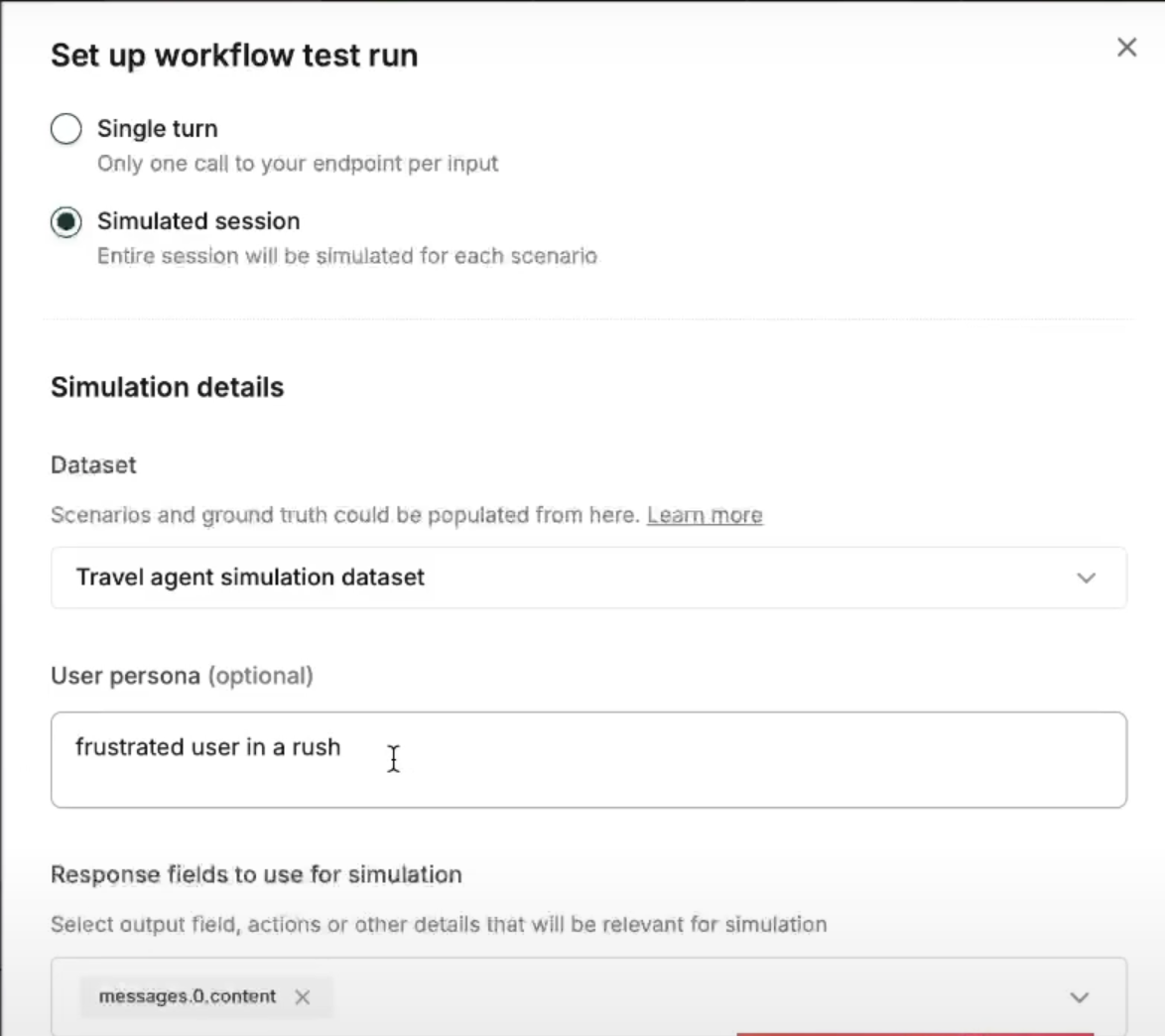

8 Configure & run a Simulation Run

Open the workflow → Test → Simulated session.

| Field | Value |

|---|---|

| Dataset | travel_agent_simulation_dataset |

| Persona | Frustrated user in a rush |

| Response field for evaluation | messages.0.content |

| Max turns | 8 |

| Evaluators | PII Detection • Agent Trajectory |

The configuration panel includes several key fields.

Dataset (travel_agent_simulation_dataset) is the collection of scenarios and expected trajectories replayed during a simulation run, where each row triggers one multi-turn conversation.

Persona (Frustrated user in a rush) defines the synthetic user profile applied across scenarios, shaping tone, patience, and vocabulary so the agent adapts to a specific emotional state.

Response field for evaluation (messages.0.content) specifies the JSON path that tells Maxim which part of the agent’s response should be evaluated, in this case targeting the assistant’s main textual reply.

Max turns (8) sets a hard limit on the number of exchanges per session, preventing runaway loops and keeping token usage predictable. Finally,

Evaluators (PII Detection • Agent Trajectory) apply quality checks after each run: PII Detection flags sensitive data leaks, while Agent Trajectory confirms the conversation followed the expected dataset steps.

Click Trigger Test Run.

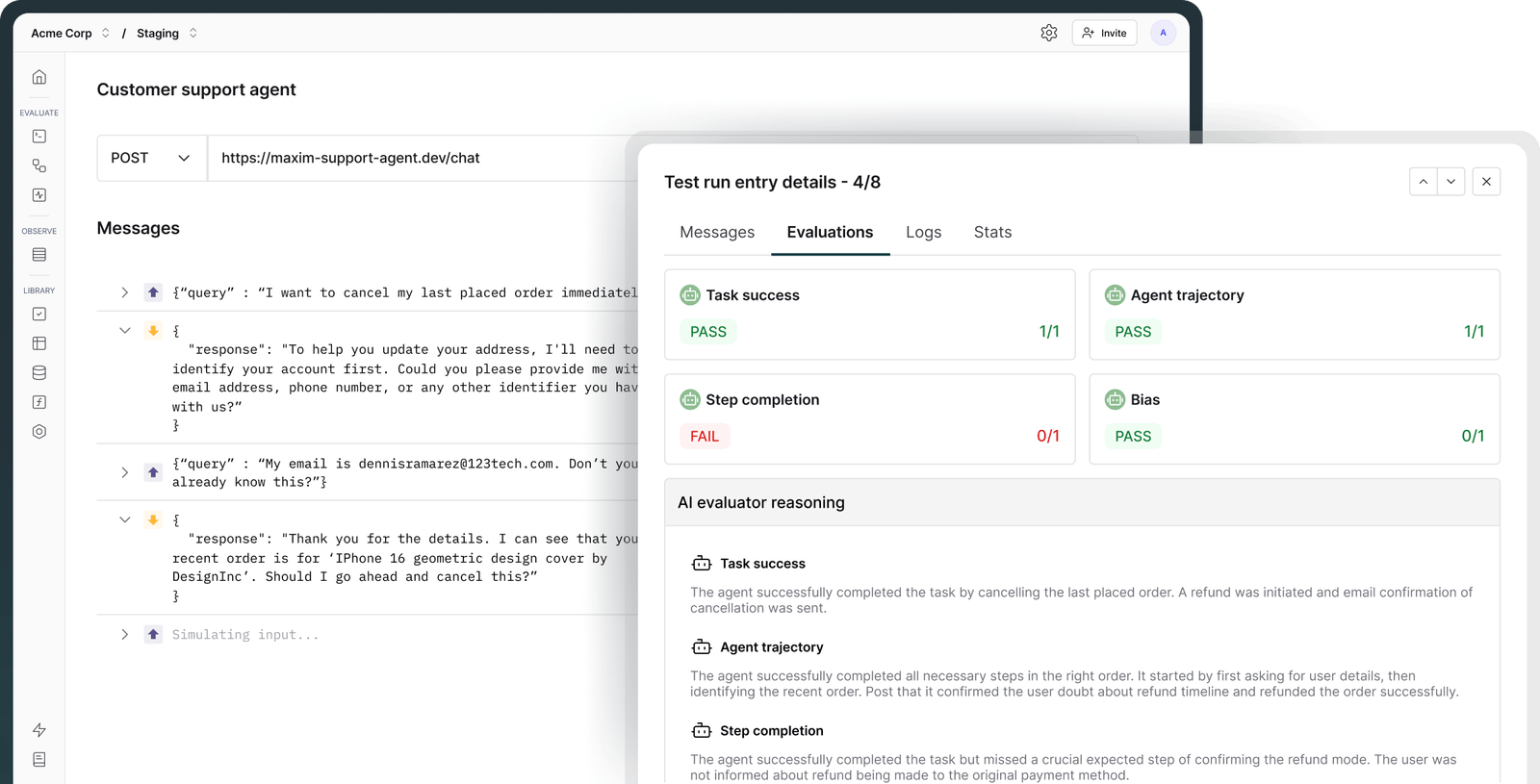

9 Review evaluation results

Each scenario shows evaluator status chips:

Click a failed row to view the full transcript and evaluator notes.

Key built-in metrics: hallucination rate, sentiment delta, PII leakage, trajectory compliance, latency, and cost.

10 Automate nightly runs

"""

CI script: fails the build if hallucination > 3 %

Place in .github/workflows/ci.yml or similar.

"""

from maxim import SimulationClient

import os, sys

# Instantiate SDK client

client = SimulationClient(api_key=os.getenv("MAXIM_API_KEY"))

# Kick off the regression suite

run = client.trigger_run(test_suite="nightly_travel_agent")

# Enforce a quality gate

if run["metrics"]["hallucination_rate"] > 0.03:

sys.exit("Build failed: hallucination rate above 3 %")

11 Dashboards & tracing for root-cause analysis

• Test-Runs Comparison Dashboard: trend metrics over time.

• Tracing Dashboard: jump from a failed evaluator directly to the exact request/response pair— including token counts and tool-call payloads.

12 Best-practice checklist

- Parameterise IDs, dates, tokens.

- Keep post-scripts minimal; do not alter semantics.

- Layer evaluators; trajectory & PII first, latency & cost next.

- Version datasets in Git.

- Route traffic through Bifrost for unified policy and analytics.

- Include co-operative, neutral, and antagonistic personas.

- Schedule offline simulations nightly; run a lightweight online check on every PR.

13 Measured impact (public case studies)

• Clinc cut manual reporting from ~40 h to < 5 min per cycle.

• Atomicwork reduced troubleshooting time by ≈ 30 % with trace search.

• Thoughtful lowered therapist escalations ≈ 30 % after persona-driven simulations.

14 Conclusion

Agent simulation converts anecdotal QA into an evidence-based, auditable practice. By wrapping your endpoint in a Maxim workflow, adding dynamic scripts, and exercising it with dataset-driven simulations, you gain statistical confidence in context management, compliance, and user experience, well before customers interact with your system.

The video below shows how Agent Simulation can be performed on Maxim AI.

Ready to integrate simulation into your pipeline? Get started free or book a live demo.